Navigating the complexities of Kubernetes can be a daunting task, particularly when it comes to optimizing your network traffic management with a high-performance proxy. This comprehensive guide provides valuable insights on using Envoy Gateway as your Kubernetes Proxy. The article covers a wide range of topics including the control plane, ingress controller, service proxy, headless service, API gateway, edge proxy, and even extends into using Envoy for lighter use cases. It also looks at the key features of Envoy Gateway, its application in service mesh architectures, and the role played by environment variables. No matter if you’re an application developer or a network administrator, your exploration into the world of Kubernetes service management, cloud-native applications, and dynamic service-to-service communication begins right here.

Understanding Envoy Gateway as a Kubernetes Proxy

Definition and architecture of Envoy Gateway

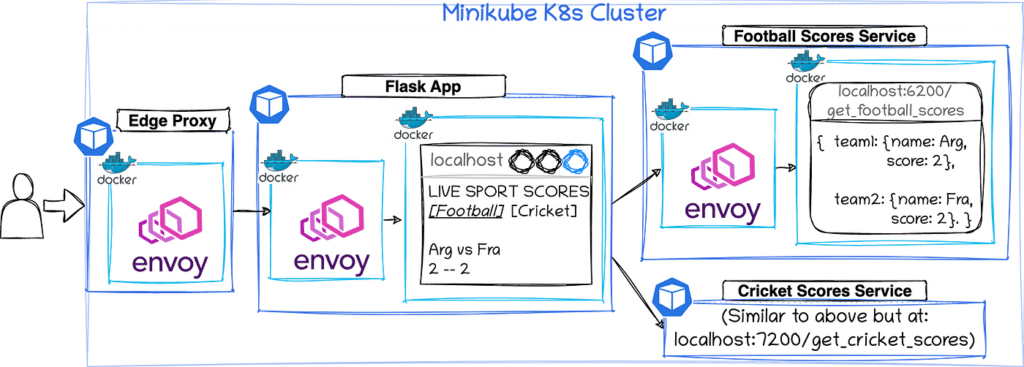

Envoy Gateway is a high performance, C++ distributed proxy, and communications bus specifically tailored for large modern service oriented architectures. Originally built to solve common networking issues in microservice architectures, Envoy operates as a service proxy designed for cloud-native applications. Installed as a sidecar proxy, it sits alongside your services acting as an envoy for incoming and outgoing network traffic. This distinct architectural positioning allows it to handle tasks such as load balancing, circuit breakers and most importantly, offloads the responsibilities of dealing with the complexities of distributed systems from your applications. The underlying architecture of Envoy Gateway consists of a variety of key features such as an L7 filter, HTTP/2 and gRPC proxies with advanced load balancing features.

Envoy in the Kubernetes ecosystem

Within the Kubernetes ecosystem, Envoy has found its niche as an edge, service and Kubernetes ingress / egress proxy. It functions as an effective tool for ensuring efficient service-to-service communication, ensuring high availability and reliability. The Kubernetes service discovery mechanism integrates seamlessly with Envoy, enhancing its ability in managing complex microservice interactions. Envoy Gateway also leverages the Kubernetes Gateway API for dynamic routing configurations.

Envoy as a high-performance proxy

Envoy Gateway stands out as a high-performance proxy due to its extensibility and interface-driven design, allowing for a rich set of extensions and filters. It supports HTTP/2, gRPC and the ability to communicate with back-end servers, enabling it to handle a large number of concurrent connections and high throughput thereby effectively managing network traffic. Its architecture is essentially built to enable horizontal scalability.

Key Concepts to Know

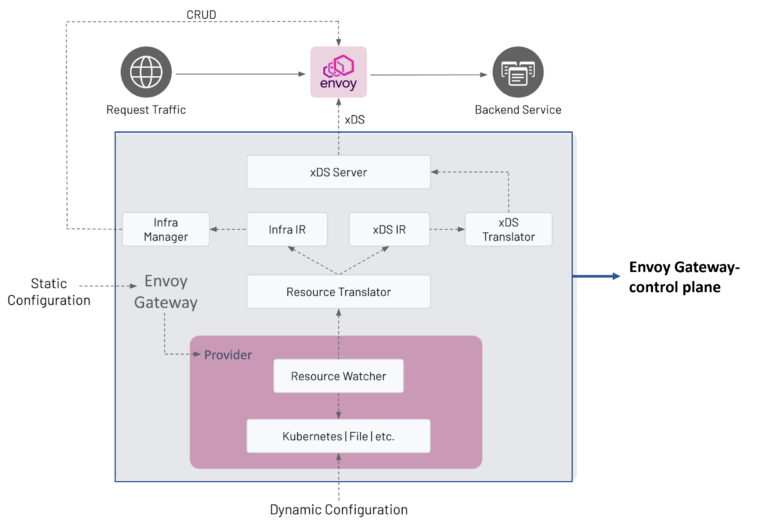

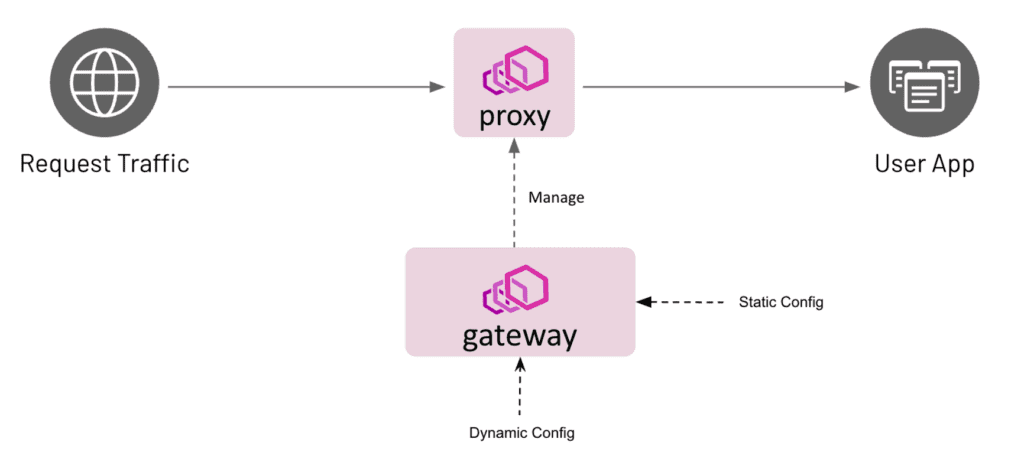

Control plane and data plane

The control plane and data plane are fundamental concepts in understanding how Envoy Gateway works in a Kubernetes cluster. The control plane is responsible for maintaining and distributing global network configurations, whereas the data plane (comprised of Envoy proxies) handles network traffic and acts upon the configurations distributed by the control plane. Together they form a key feature of service mesh architectures, enabling efficient and reliable networking in cloud-native applications.

Service mesh architectures

Service mesh architectures have seen widespread adoption in modern distributed system environments. They provide a dedicated layer for handling inter-service communication responsibilities such as service discovery, load balancing, traffic management, and security. The common features of service mesh architecture include a decentralized design, high scalability, and extensibility, all of which find a fitting match with Envoy.

API Gateway vs. Service Mesh

In a microservice architecture, while an API Gateway provides an entry point for cluster external traffic, a service mesh like Envoy goes a step further providing additional capabilities for managing internal service-to-service communication. Although there might be overlaps, a service mesh does not eliminate the need for an API gateway and both can co-exist in the architecture.

Headless services and Kubernetes service discovery

Headless services in Kubernetes create an environment where pods can directly address each other without the load balancer. This is particularly useful for stateful applications or for creating a service mesh. The Kubernetes service discovery mechanism leverages this feature effectively and that makes Envoy an ideal candidate for managing the traffic.

Setting Up Envoy in a Kubernetes Cluster

Prerequisites for Kubernetes and Envoy

Before setting up Envoy in your Kubernetes cluster, you would need a functioning Kubernetes cluster, Docker daemon running, and Helm installed. Familiarity with Kubernetes networking, Docker images, and Helm charts would be beneficial for deploying the Envoy proxy.

Deploying Envoy using Docker images

Docker images provide the easiest way for deploying Envoy in a Kubernetes cluster. A Docker image of Envoy can be pulled from Docker hub, and subsequently a pod can be created in the Kubernetes cluster using a deployment or daemonset.

Using helm charts for deployment

Helm charts provide a more curated way of deploying applications. They help manage Kubernetes applications by defining, installing, and upgrading them through the use of templates. You can install Envoy in your Kubernetes cluster using Helm to simplify the deployment process.

Verifying the installation

Upon successful installation of Envoy in your Kubernetes cluster, the next step is verification. You can do this by checking the status of the Envoy pods using the kubectl get pods command, and ensuring they are running as expected.

Configuring Envoy as an Ingress Controller

Introduction to Kubernetes Ingress

Kubernetes Ingress exposes HTTP and HTTPs routes from outside the cluster to services within the cluster. This happens through an Ingress Controller, such as Envoy.

Setting up Envoy as an ingress controller

Setting up Envoy as an ingress controller in the Kubernetes cluster involves deploying it as a Kubernetes service and ensuring all necessary configurations are in place for directing incoming traffic to the relevant services.

Defining Envoy configuration for ingress routing

To define Envoy configuration for ingress routing, you will typically define the entry points, routes, services, and middleware. The Kubernetes Ingress resource is used by Envoy to determine how incoming traffic should be routed to services.

Applying configurations using kubectl apply -f command

This involves creating a.kubeconfig file that holds the configuration details. To apply the changes to the cluster, you can use the “kubectl apply -f” command followed by the configuration file.

Using Envoy for Service-to-Service Communication

The role of sidecar proxies

The key role of Envoy within the Kubernetes ecosystem is that of a sidecar proxy. It essentially intercepts the network communication between microservices in a Kubernetes cluster, taking over the tasks of service discovery, load balancing, failure recovery, metrics, and monitoring.

Dynamic configuration for service discovery

Being a key part of the data plane, Envoy dynamically gets configured by the control plane and adapts to the changing service topology, ensuring efficient service discovery in a dynamic environment.

Load balancing and circuit breakers

Envoy’s role as an edge proxy involves handling inbound and outbound traffic, and therefore load balancing across multiple instances of service is crucial. It also incorporates the circuit breaker pattern to prevent system overloads and improve system resilience.

Securing inter-service communication

One crucial aspect of service-to-service communication is Security, and Envoy natively supports authentication, authorisation and encryption through the use of TLS. Envoy also facilitates gradual adoption of mutual TLS through permissive mode operation where services can continue unencrypted communications.

Advanced Traffic Management

Implementing traffic splitting and shadowing

Envoy supports advanced traffic management features like traffic splitting and traffic shadowing. Traffic splitting allows for dividable traffic among various services based on certain criteria. Traffic shadowing on the other hand, allows for the mirroring of live traffic for testing purposes.

Rate limiting and traffic shaping

Envoy integrates with the rate limiting API for setting rate limits on inbound and outbound traffic. Traffic shaping techniques can also be used to prioritize certain traffic flows over others, providing quality of service guarantees.

Using Envoy for blue/green deployments

Envoy has inherent abilities to perform traffic routing that are useful for implementing blue/green deployments. The ease with which it can gradually shift traffic from one version to another facilitates seamless deployment strategies.

Handling inbound and outbound network traffic

As a high-performance proxy, the extensive feature set and extensible architecture of Envoy combine to create a robust solution for handling both inbound and outbound network traffic, irrespective of the complexity of microservice interactions.

Monitoring and Observability

Integrating with Prometheus and Grafana

One crucial aspect of using Envoy involves incorporating logging, monitoring, and observability. This can be achieved by integrating it with Prometheus and Grafana. These tools help visualize the metrics emitted by Envoy and provide deep insight into performance and system health.

Logging and tracing with Envoy

Envoy’s observability capabilities include detailed logging and distributed tracing features. They provide valuable contextual information and a comprehensive view of network traffic, dependencies, and latencies among microservices.

Generating metrics from Envoy proxies

Envoy proxies provide rich metrics that give insights into performance, network behavior, and issues. These metrics are crucial for performance tuning and identifying and fixing issues.

Analyzing network traffic patterns

The insight gained from Envoy metrics enables you to analyze network traffic patterns, revealing trends that can drive performance improvements. Analysis can also identify unusual patterns, assisting in resolving issues before they escalate into larger problems.

Customizing and Extending Envoy

Working with Envoy-specific extensions

Envoy can be extended and customized through its rich set of envoy-specific extensions and filters. These features expand its core functionalities, creating room for optimization and use case adaptation.

Building custom filters for advanced use cases

Envoy provides the ability to create custom filters, extending the default filter chain provided by Envoy. This ability lets you customize how the Envoy proxy deals with incoming and outgoing network traffic, thereby catering to advanced use cases.

Modifying Envoy configuration for performance tuning

Modifying Envoy’s configuration allows better performance tuning. You can leverage environment variables for dynamic configurations, ensuring Envoy’s runtime behavior adapts to the needs of your Kubernetes cluster.

Leveraging environment variables for dynamic configurations

With the use of Envoy, environment variables can be employed for dynamic configurations. This enables the adjustment of Envoy’s settings depending on the present conditions of your environment.

Integrating Envoy with Existing Service Meshes

Envoy’s role in the service mesh landscape

Envoy has played a pioneering role in shaping the service mesh landscape and continues to be used as a foundational component for multiple service meshes, providing core features such as load balancing, service discovery, and more.

Connecting Envoy with Istio or Linkerd

Envoy can be integrated into existing service meshes like Istio or Linkerd, augmenting their capabilities or even replacing components. This capitalizes on its feature rich and extensible architecture.

Leveraging the Kubernetes Gateway API

With the Kubernetes Gateway API, Envoy achieves simplified networking configurations through declarative API.They define how connections from outside the cluster are routed to services within the cluster.

Enhancing service meshes with Envoy’s advanced features

The advanced features of Envoy such as load balancing, circuit breakers, traffic mirroring, rate limiting, can greatly enhance the capabilities of existing service meshes.

Troubleshooting Common Issues

Debugging Envoy configuration errors

Configuration issues are common while configuring Envoy. Debugging these configuration errors involves parsing the Envoy logs and collecting relevant information regarding the error conditions.

Handling container networking issues

Container networking issues can arise as a challenge for Envoy. This is because Envoy functions as a service proxy within the Kubernetes networking model. Resolving these issues might involve verifying pod network settings and restarting the affected pod instances.

Resolving Kubernetes service discovery problems

Service discovery problems in Kubernetes are another common issue. Potential solutions might include closely monitoring the state of the Envoy instances and troubleshooting any connectivity issues.

Frequently encountered pitfalls and their solutions

Common pitfalls include misunderstood traffic flow, performance bottleneck due to misconfiguration, and memory leaks. Diagnosing these issues involves actively monitoring performance metrics, understanding Envoy configuration, and actively updating to newer versions and patches. A strong understanding of Envoy’s operational conventions and best practices would be beneficial in avoiding such pitfalls.

In summary, Envoy Gateway’s pivotal role in the Kubernetes environment – as a high-performance proxy and as an integral part of the service mesh architecture – points to its profound impact on modern, cloud-native, distributed applications. Whether you are an application developer or a system operator, a deep understanding of how to leverage Envoy in your Kubernetes ecosystem will be instrumental in managing complex microservices interactions effectively and efficiently. Contact SlickFinch today for help or advice on anything Kubernetes.