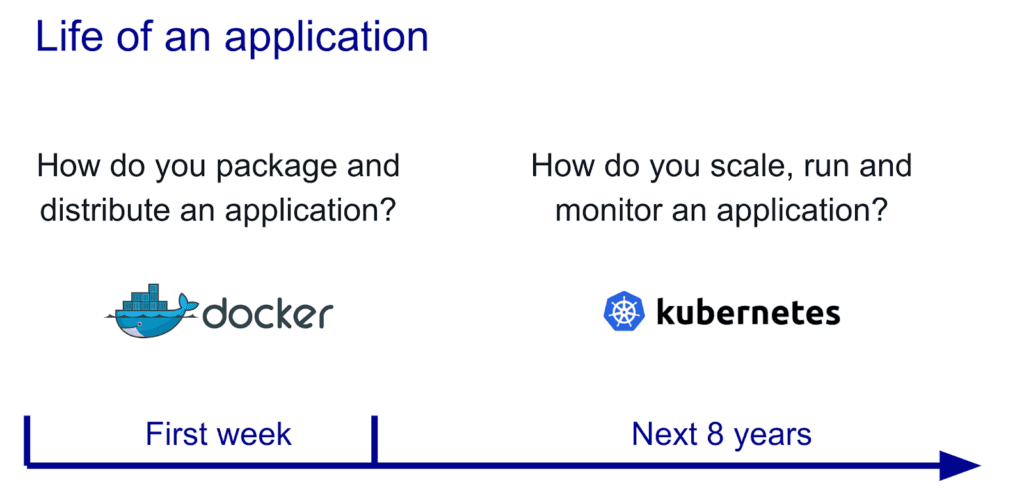

Understanding the nuanced but significant differences between Docker and Kubernetes is vital for developers, DevOps teams, and system admins focused on maximizing the efficiency and functionality of their modern applications. A question that I’ve often heard asked down the years, usually by those just learning about container technology, is “which is best, Docker or Kubernetes?”. Those that know better, will know that the question isn’t really valid, as Docker and Kubernetes do not do the same thing, and in fact usually work alongside each other in container-based application solutions. Docker, the dominant platform for container deployment, is distinct from Kubernetes, a container orchestratration tool designed for platforms like Docker. In this article we’ll look at how Docker’s widespread adoption led to the emergence of container orchestration tools such as Kubernetes. These tools are aimed at automating and centrally managing containerized applications to provide fault tolerance and scalability. Created by Google, Kubernetes, excels at handling large amounts of containers and users while seamlessly managing service discovery, load balancing, multi-platform deployment, and overall security. so let’s look at exactly what each one does, their background, benefits and how they work together.

Understanding Docker

Overview of Docker

You, as an IT professional or developer, might have heard of Docker before, and there’s a reason for that. Docker is a highly popular tool used in container orchestration. It is essentially a platform that helps you virtualize applications into standalone containers. What does this mean? Essentially, Docker allows you to package your application and all its components (libraries, system tools, code, runtime) into a container – a standard unit that can be run on any system which supports Docker, regardless of the host environment’s configuration.

How Docker Works

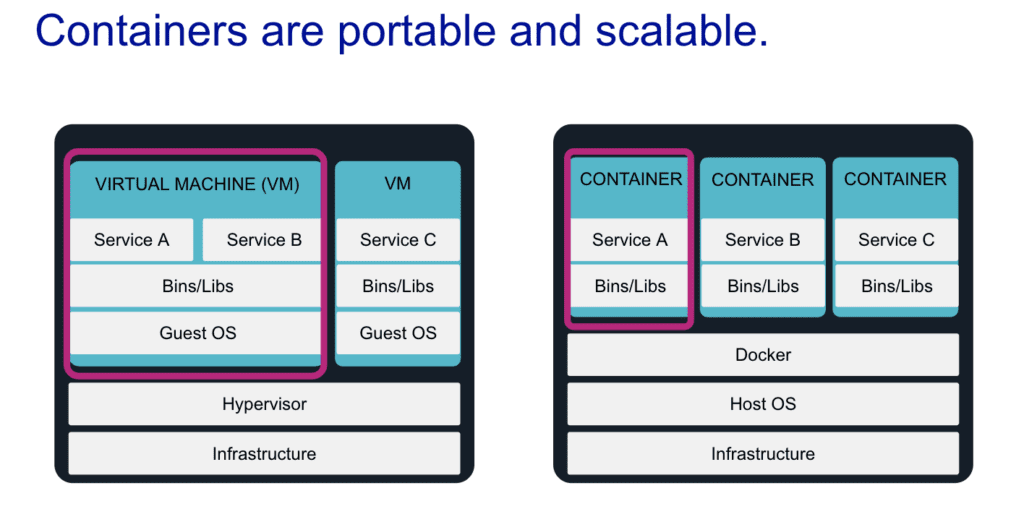

Docker works by allowing applications to be bundled into containers – miniature virtual machines that contain everything an application needs to run. This includes the operating system, system tools, libraries, and the application itself. As such, Docker guarantees that an application will run, regardless of the environment it is in. Any system equipped with Docker can run the Docker container, eliminating environmental discrepancies.

Functions and Features of Docker

Docker possesses a multitude of powerful functions, one of them being the capability to encapsulate a program’s code along with all its dependencies into a single, self-standing entity known as a container. Thanks to Docker’s containerization, a Dockerized application is always secure, consistent, and portable. Docker containers can be effectively isolated from each other and from the host system, thereby restricting any negative impact on the overall virtual environment.

Benefits of Using Docker

Using Docker has many benefits. Firstly, Docker provides a consistent environment for deployment, meaning you can avoid problems such as “it works on my machine”. Secondly, Docker’s containers are isolated from each other, which increases security. Lastly, Docker’s containers are lightweight and efficient when compared to using traditional VMs, as they share the host system’s OS kernel but remain isolated from each other and from the host system.

Common Use Cases of Docker

Docker features many use cases, including simplifying configuration, improving developer productivity, facilitating continuous integration/continuous deployment, and making it easy to implement app isolation. Docker is widely used by site reliability engineers, DevOps teams, developers, testers, and system admins. It’s trusted for its speed, scalability, and developer-friendly nature.

Understanding Kubernetes

Overview of Kubernetes

While Docker and Kubernetes are often mentioned together, Kubernetes is not another containerization platform. It’s a container orchestrator for container platforms like Docker. Developed at Google, Kubernetes has the power to orchestrate and manage containerized applications over a cluster of machines, making the cluster behave like one large machine.

How Kubernetes Works

Kubernetes operates by having the user declare the desired state for the deployed application, after which Kubernetes constantly works to ensure that the current state matches this intended target. Kubernetes achieves this through a simple control loop that checks the status of the deployed application and makes necessary changes if the current state doesn’t match the desired one.

Functions and Features of Kubernetes

Kubernetes has a diverse set of functions and features. It can handle service discovery and manage communication between containers, balance loads, conduct multi-platform deployment, authenticate and ensure security, and work with a massive volume of containers. It provides an efficient method to monitor and regulate clusters of containers over multiple hosts.

Benefits of Using Kubernetes

Using Kubernetes comes with numerous perks. It allows you to easily distribute a large volume of workloads across a large number of containers. The platform is highly flexible and enables you to manage containerized applications according to the desired state defined in your Kubernetes configurations. Additionally, it also provides several capabilities for self-monitoring, self-healing, and automatic rollbacks, therefore making applications running on Kubernetes highly available and resilient to failures.

Common Use Cases of Kubernetes

Kubernetes is widely used for deploying distributed systems, microservices, and managing large amounts of containers. Other common use cases include service discovery and load balancing, automated rollouts and rollbacks, configuration management, and secrets management.

Container Technology and Its Importance

What are Containers?

Containers are a solution to the age-old problem of “it worked on my machine” – a problem that occurs when a software works on one machine but not on another due to differences in configurations. Containers encapsulate your application and all its dependencies into one isolated unit which can run smoothly on any system with a containerization platform like Docker.

Role of Containers in Application Development

In application development, containers play a massive role in easing deployment, scaling, and isolation processes. They allow you to package an application along with all its dependencies into a standardized unit that can run on any system with a compatible containerization platform.

Benefits of Using Containers

Containers come with a wide range of benefits. They provide consistency across multiple development and deployment cycles, simplify development and deployment processes, offer an additional layer of security due to their isolated nature, and increase the efficiency of using system resources.

Concept of Container Orchestration

Container orchestration is the automated process of managing, organizing, and controlling large volumes of containers. It’s a crucial process that ensures the efficient operation of containers in a system, handling tasks such as deployment of containers, redundancy and availability of containers, scaling up or removing containers to spread applications’ load evenly across the host infrastructure.

Common Container Platforms

Common container platforms include Docker, Kubernetes, and Rancher. Docker and Rancher are tools used to create and handle containers, while Kubernetes is a popular orchestration tool, used to manage containers created by Docker and other platforms.

How Docker Contributes to Container Technology

Docker’s Role in Containerization

Docker plays a prominent role in popularizing containerization. Docker containers are lightweight, compact, and quick to start, which is ideal for quick scaling and distributing applications. Its ability to bundle an application, along with necessary libraries and components, into one package contributes massively to the world of container technology.

Unique Selling Points of Docker

Docker’s unique selling points lie in its ease of use, open-source nature, and consistent deployment. Docker enables the applications to be easily distributable and manageable across different platforms, which further contributes to its popularity.

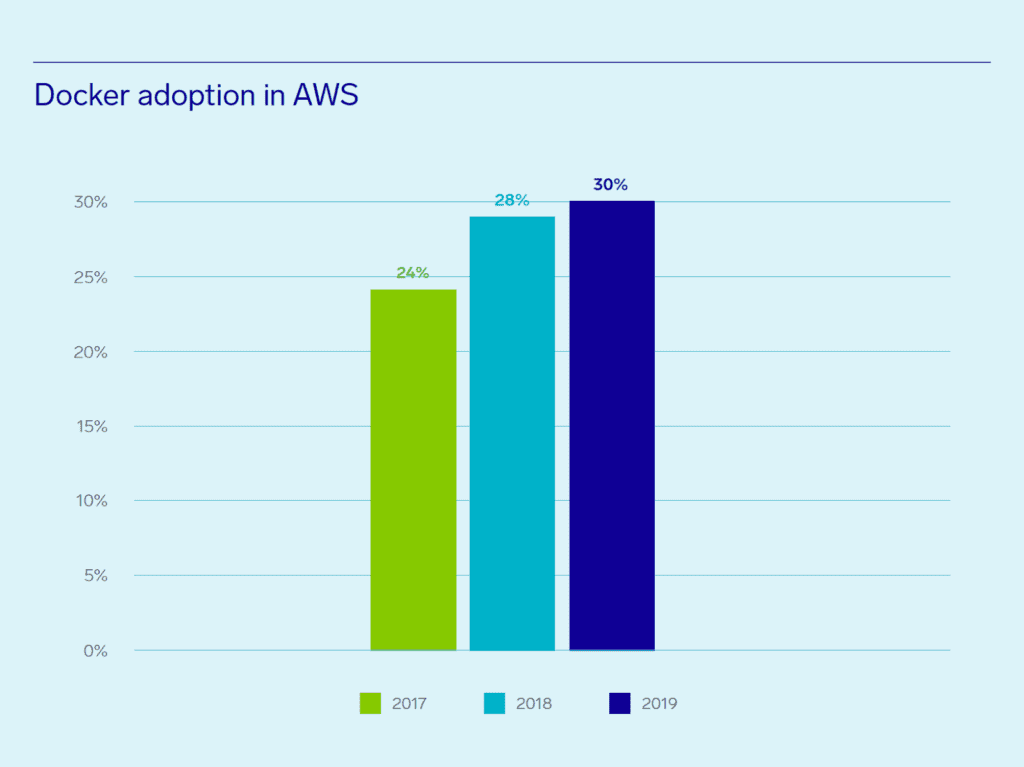

Docker’s Market Dominance

Docker’s market dominance is due to a myriad of reasons. Its open-source nature from the inception has played a major role in its wide adoption. As an open-source solution, the constantly growing Docker community can contribute to the platform, fix bugs, and add new features, making Docker a robust, flexible, and constantly evolving platform.

Docker’s Open-Source Nature

Docker’s open-source nature gives it a unique growth dynamic. The community contributes a vast amount of expertise and a wide range of features, leading to Docker’s rapid development, maintenance, and widespread adoption. It also opens doors for interoperability and flexibility, creating an ecosystem of associated Docker tools.

Introduction and Role of Container Orchestration Tools

What is Container Orchestration?

Container orchestration is the automated process of deploying, scaling, networking, and managing containers. It’s essential to have an orchestration tool when you’re dealing with multiple containers, and that’s where tools like Docker Swarm, Kubernetes, and Mesos step in.

Need for Orchestration Tools

Orchestration tools are needed to efficiently manage and scale the containers. They allow for automated scheduling and deployment of containers, seamless scaling, and handy recovery features. All of these are vital when working with large volumes of containers across multiple platforms.

Different Orchestration Tools

Various orchestration tools exist in the market. Docker Swarm, Kubernetes, and Mesos are amongst the most popular. Docker Swarm is Docker’s native clustering and scheduling tool, while Kubernetes and Mesos were developed by Google and Apache Software Foundation, respectively.

How Orchestration Tools Work

Orchestration tools regulate the life-cycle of containers. These tools ensure software versions synchronization over multiple nodes, scale applications based on workload, and manage databases. They also offer resilience testing, and services like load balancing, visibility, and monitoring.

How Kubernetes Contributes to Container Orchestration

Kubernetes’ Role in Orchestration

Among all orchestration tools available, Kubernetes has taken the lead in container orchestration. It offers a comprehensive solution to manage containers’ lifecycle across a fleet of hosts at scale, offering major features like service discovery, load balancing, and secrets management.

Unique Selling Points of Kubernetes

The unique selling points of Kubernetes include its wide-ranging community support, scalability, and portability. The Kubernetes ecosystem has a wide range of third-party tools and plugins due to its open-source nature. Kubernetes can also handle and manage a large number of containers irrespective of the complexity of your apps, making it highly scalable. Moreover, it can run on virtually any public, private, or hybrid cloud, providing unparalleled portability.

Kubernetes’ Market Dominance

Kubernetes’ market dominance can be attributed to Google’s reputation and the tool’s robustness, scalability, and resilience. Its widespread adoption, extensive community support, and regular updates have made it the choice of businesses and organizations for managing containerized applications.

Background of Kubernetes Development at Google

Kubernetes was developed by Google, a company that arguably has the most experience in operating containers at scale. Leveraging years of expertise in running billions of containers a week, Google open-sourced Kubernetes in 2014, and it has been growing rapidly ever since.

Exploring the Kubernetes Architecture

Kubernetes Control Loop

At the heart of Kubernetes lies a simple control loop. Users declare how they want their system to look, and Kubernetes acts to make it happen. The control loop constantly monitors for changes in the current status and works tirelessly to ensure the current state matches the one desired.

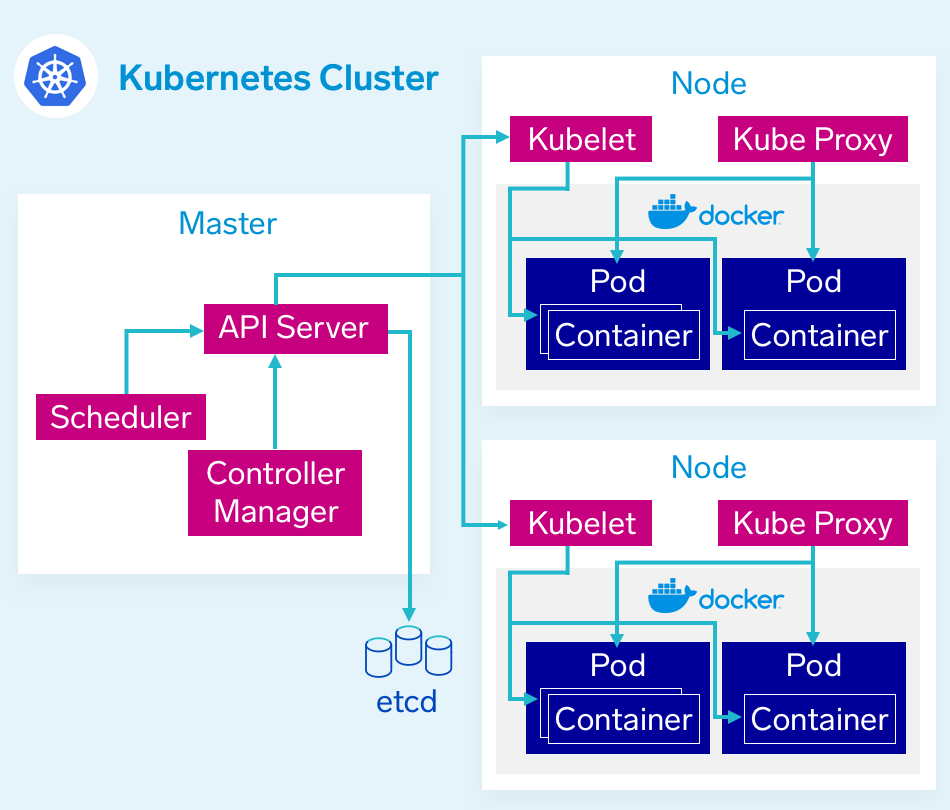

Components of Kubernetes Architecture

Key components in Kubernetes architecture encompass the Control Plane, Nodes, and Pods. The Control Plane maintains the desired state, the Nodes host running applications, and the Pods are the smallest deployment units in the Kubernetes ecosystem.

Understanding the Control Plane

The Control Plane maintains the cluster’s desired state. It includes several components, such as the API Server that accepts and processes commands, the Controller Manager that reconciles the current state with the desired state, the Scheduler that assigns Pods to Nodes, and etcd which stores configuration data.

Understanding Nodes and Pods

Nodes are workers machines that run applications. Each node runs the kubelet service, responsible for Pod maintenance. Pods are the smallest deployment units in Kubernetes and maintain one or multiple closely related containers. Each Pod has a unique IP address within the cluster, allowing for mutual communication and resource sharing.

How Kubernetes Handles Deployment and Management

Kubernetes facilitates deployments and management via its sophisticated architecture. It ensures the current state always matches the desired state declared in Kubernetes configurations. Kubernetes handles rollouts and updates, automates rollbacks, scales applications, and provides self-healing feature for Pods.

Distinct Differences Between Docker and Kubernetes

Comparative Analysis of Docker and Kubernetes

There are fundamental differences between Docker and Kubernetes, despite their frequent linked discussions. Fundamentally, Docker is a platform that containerizes applications, while Kubernetes is an orchestration tool that manages containers. Docker excels in creating and running containers, and Kubernetes excels in coordinating and scheduling containers.

How Docker and Kubernetes Work Together

Docker and Kubernetes are not direct competitors, but complementary technologies. Docker creates and packages containers making them lightweight, portable and consistent, whereas Kubernetes manages these containers at a larger scale ensuring high availability and network efficiency.

When to Use Docker vs Kubernetes

While Docker is ideal for creating and running single containers, Kubernetes shines when it’s time to manage clusters of them. So, you’d use Docker when the scope is limited to individual containers, but you’d need Kubernetes when dealing with multiple containers, requiring management, redudancy and scaling.

Docker Swarm vs Kubernetes

While Docker Swarm is Docker’s native tool for managing Docker containers, Kubernetes is more powerful and functional, especially in production environments. While Swarm may be simpler and easier to use, Kubernetes wins in scalability and multi-cloud capability.

Future Outlook and Recommendations

Current Trends in Docker and Kubernetes

Both Docker and Kubernetes continue to evolve rapidly. A significant trend is the focus on security. The need for secure containerization is paramount, and both technologies are making strides to enhance their security features in response to this demand.

Future Developments in Containerization and Orchestration

The future holds significant advancements in both containerization and orchestration. The emphasis is on creating smart platforms that not only provide containerization but do so in a secure, efficient, and scalable manner. Orchestration tools are evolving to offer more sophisticated management features at larger scales.

Recommendations for Potential Users of Docker and Kubernetes

For both newbies and professionals in the field, the recommendation is to start with Docker, for its simplicity and popularity, and, once familiar with its workings, to incorporate Kubernetes into your development and deployment procedures for improved scalability and management. A good starting point is to setup and run a local Kubernetes cluster. Always keep an eye on evolving trends and advancements. Use proper monitoring services such as Kubecost to get the most out of these technologies. If you need a Kubernetes solution for your application but don’t have the team to implement it, talk to us today about our managed Kubernetes service.