Key Takeaways

Utilizing GPUs efficiently in AI workloads can significantly reduce costs and improve performance.

Google Kubernetes Engine (GKE) offers features like multi-instance GPUs and time-sharing to optimize GPU usage.

Assessing your specific GPU needs is crucial for effective resource management.

Setting up GKE for AI workloads involves selecting the right mode and configuring GPUs correctly.

Monitoring GPU performance ensures you can make necessary adjustments for optimal utilization.

Optimizing GPU Utilization with Google Kubernetes Engine in AI Workloads

Introduction to GPU Utilization in AI

In the world of artificial intelligence (AI), GPUs (Graphics Processing Units) play a pivotal role. They are designed to handle multiple operations in parallel, making them ideal for AI tasks like training models and running complex computations. However, simply having a GPU isn’t enough; you need to use it efficiently to get the best results.

Why GPU Optimization is Important in AI

Optimizing GPU utilization is crucial for several reasons. First and foremost, GPUs are expensive resources. If they’re underutilized, you’re essentially wasting money. Secondly, efficient GPU usage can drastically speed up AI workloads, leading to quicker insights and faster product development.

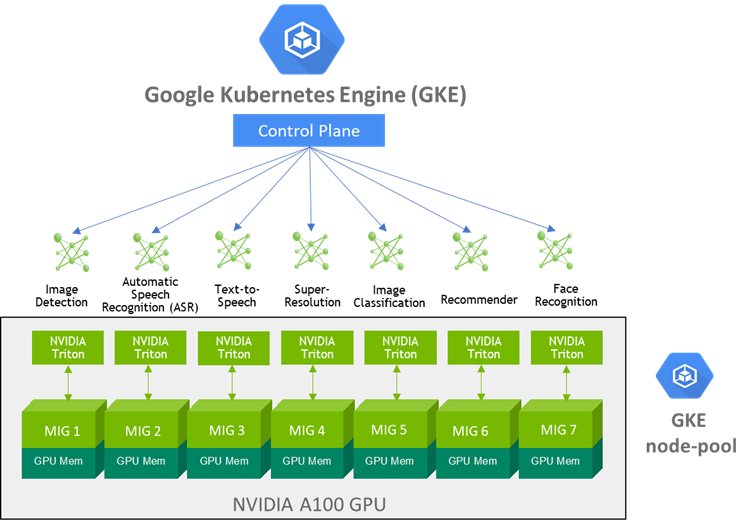

“NVIDIA A100 Multi-Instance GPUs …” from developer.nvidia.com and used with no modifications.

Challenges in GPU Utilization for AI Workloads

High Costs of Underutilized Resources

One of the biggest challenges is the high cost associated with underutilized GPUs. When a GPU is not fully utilized, you’re paying for power and performance that you aren’t using. This inefficiency can quickly add up, especially in large-scale AI projects.

Difficulty in Balancing Workloads

Balancing workloads across multiple GPUs can be tricky. AI tasks often have varying computational requirements, making it challenging to allocate resources effectively. This imbalance can lead to some GPUs being overworked while others remain idle.

Technical Complications and GPU Management

Managing GPUs in a Kubernetes environment adds another layer of complexity. From driver installations to workload scheduling, there are numerous technical aspects that need to be handled correctly to ensure optimal GPU utilization.

Google Kubernetes Engine (GKE) Solutions for GPU Optimization

Overview of GKE

Google Kubernetes Engine (GKE) offers a managed environment for deploying, managing, and scaling containerized applications using Kubernetes. One of its standout features is its support for GPUs, which can be leveraged to optimize AI workloads.

Multi-instance GPUs

Multi-instance GPUs allow you to partition a single GPU into multiple instances. This feature is particularly useful for running multiple lightweight AI tasks simultaneously. By splitting the GPU, you can ensure that its resources are fully utilized, thereby maximizing efficiency.

Google Kubernetes Engine (GKE) Solutions for GPU Optimization

Google Kubernetes Engine (GKE) offers several features designed to enhance GPU utilization for AI workloads. These features ensure that the GPUs are used to their full potential, reducing costs and improving performance. Let’s dive into the specific solutions that GKE provides.

Overview of GKE

GKE is a managed Kubernetes service that allows you to run containerized applications in the cloud. It simplifies the management of Kubernetes clusters, including the deployment, scaling, and operations of applications. GKE also supports GPUs, enabling you to leverage powerful hardware for demanding AI tasks.

Multi-instance GPUs

Multi-instance GPUs (MIGs) allow you to partition a single GPU into multiple instances. This means you can run several smaller workloads on a single GPU, maximizing its utilization. For example, if you have a GPU with 40GB of memory, you can divide it into four instances with 10GB each. This feature is particularly useful for AI workloads that don’t require the full capacity of a GPU.

Time-sharing GPUs

Time-sharing GPUs enable multiple containers to share a single GPU over time. This approach is ideal for workloads that have varying GPU demands. By allowing different tasks to use the GPU at different times, you can ensure that the GPU is always busy, thereby improving its utilization.

Steps to Optimize GPU Utilization with GKE

Optimizing GPU utilization in GKE involves several steps, from assessing your needs to monitoring performance. By following these steps, you can ensure that your GPUs are used efficiently, reducing costs and improving performance.

Assessing Your GPU Needs

Before you start, it’s crucial to assess your GPU needs. Determine the computational requirements of your AI workloads and estimate the number of GPUs you’ll need. Consider factors like the size of your datasets, the complexity of your models, and the expected workload.

Setting Up GKE for AI Workloads

Once you have a clear understanding of your GPU needs, the next step is to set up GKE for your AI workloads. This involves selecting the right mode (Autopilot or Standard) and configuring your GPU nodes. Autopilot mode is fully managed, making it easier to set up and manage, while Standard mode offers more customization options.

To set up GKE for AI workloads, follow these steps:

Create a GKE cluster with GPU nodes.

Install the necessary GPU drivers.

Configure your Kubernetes pods to use GPUs.

Deploying GPU Workloads in GKE

Deploying GPU workloads in GKE involves creating Kubernetes pods that request GPU resources. You can specify the number of GPUs required for each pod in the pod’s resource requests. This ensures that the pods are scheduled on GPU nodes, allowing them to leverage the GPU’s computational power.

Here is an example of a Kubernetes pod configuration that requests a GPU:

{ "apiVersion": "v1", "kind": "Pod", "metadata": { "name": "gpu-pod" }, "spec": { "containers": [ { "name": "gpu-container", "image": "your-ai-workload-image", "resources": { "limits": { "nvidia.com/gpu": 1 } } } ] } }

Monitoring GPU Performance

Monitoring GPU performance is essential to ensure that your GPUs are being utilized efficiently. GKE provides tools like NVIDIA Data Center GPU Manager (DCGM) to monitor GPU metrics. By keeping an eye on metrics like GPU utilization, memory usage, and temperature, you can make necessary adjustments to optimize performance.

Besides that, setting up alerts for critical metrics can help you quickly identify and address issues, ensuring that your GPUs are always performing at their best.

Best Practices for Managing GPU Utilization

Managing GPU utilization effectively requires following best practices. These practices ensure that your GPUs are used efficiently, reducing costs and improving performance.

Choosing Right-sized GPU Acceleration

Choosing the right-sized GPU acceleration for your workloads is crucial. Over-provisioning GPUs can lead to underutilization and increased costs, while under-provisioning can result in performance bottlenecks. Therefore, it’s essential to match the GPU resources to your workload requirements.

Here are some tips for choosing the right-sized GPU acceleration:

Analyze the computational requirements of your workloads.

Choose GPUs with the appropriate memory and processing power.

Consider using multi-instance GPUs for smaller workloads.

Implementing GPU Sharing Strategies

Implementing GPU sharing strategies can help you make the most of your GPU resources. By allowing multiple workloads to share a single GPU, you can ensure that the GPU is always busy, maximizing its utilization. For more details on optimizing your AI workflows, check out AI model lifecycle management with Kubeflow.

Some effective GPU sharing strategies include:

Using multi-instance GPUs to partition a single GPU into multiple instances.

Time-sharing GPUs to allow different workloads to use the GPU at different times.

Leveraging NVIDIA Multi-Process Service (MPS) to run multiple processes on a single GPU.

Managing GPU Stack through NVIDIA GPU Operator

The NVIDIA GPU Operator simplifies the management of GPU resources in Kubernetes. It automates tasks like driver installation, GPU monitoring, and resource allocation, making it easier to manage your GPU stack.

To manage your GPU stack using the NVIDIA GPU Operator, follow these steps:

Install the NVIDIA GPU Operator in your GKE cluster.

Configure the operator to manage your GPU resources.

Monitor and manage GPU performance using the operator’s tools.

Case Studies: Real-life Examples

To better understand how optimizing GPU utilization with Google Kubernetes Engine (GKE) can make a significant impact, let’s look at two real-life case studies. These examples illustrate the practical benefits of using GKE’s advanced features for AI workloads.

Case Study 1: Efficient AI Model Training

A leading tech company was facing challenges with training their AI models efficiently. They had multiple teams working on different models, each requiring substantial GPU resources. However, their existing setup led to underutilized GPUs and high operational costs.

By implementing GKE with multi-instance GPUs, the company was able to partition their GPUs into smaller instances. This allowed multiple teams to run their training jobs concurrently on the same physical GPU, maximizing its utilization.

As a result, the company saw a 30% reduction in GPU-related costs and a 25% increase in training speed. The efficient use of resources also enabled faster iteration cycles, leading to quicker model improvements and a competitive edge in the market.

Case Study 2: Scalable AI Inference

An AI-driven startup specializing in real-time image recognition needed a scalable solution for their inference workloads. Their application required high GPU availability to process incoming data streams, but their existing infrastructure couldn’t scale efficiently.

By leveraging GKE’s time-sharing GPU feature, the startup was able to dynamically allocate GPU resources based on real-time demand. This approach ensured that GPUs were always in use, even during fluctuating workload periods.

The implementation led to a 40% improvement in resource utilization and a 20% decrease in latency. The startup was able to handle increased traffic without compromising on performance, enabling them to scale their services and attract more customers.

Conclusion

Optimizing GPU utilization in AI workloads is essential for reducing costs and improving performance. Google Kubernetes Engine (GKE) offers powerful features like multi-instance GPUs and time-sharing to help you achieve this goal. By assessing your GPU needs, setting up GKE correctly, deploying workloads efficiently, and monitoring performance, you can ensure that your GPUs are always used to their full potential.

Following best practices such as choosing the right-sized GPU acceleration, implementing GPU sharing strategies, and managing your GPU stack through the NVIDIA GPU Operator can further enhance your resource utilization. Real-life examples demonstrate the tangible benefits of these approaches, showcasing how companies can achieve significant cost savings and performance gains.

Frequently Asked Questions (FAQ)

Here are some common questions about optimizing GPU utilization with GKE and their answers to help you get started.

How does GKE help in reducing GPU costs?

GKE helps reduce GPU costs by offering features like multi-instance GPUs and time-sharing, which maximize GPU utilization. These features allow you to run multiple workloads on a single GPU, ensuring that its resources are fully used and minimizing waste.

What are the advantages of using multi-instance GPUs?

Multi-instance GPUs allow you to partition a single GPU into smaller instances. This is beneficial for running multiple lightweight AI tasks simultaneously, ensuring that the GPU’s resources are fully utilized. It helps in reducing costs and improving the efficiency of AI workloads.

Besides that, multi-instance GPUs enable better resource management and flexibility, allowing you to allocate just the right amount of GPU power needed for each task.

Can multiple containers share a single GPU in GKE?

Yes, multiple containers can share a single GPU in GKE using time-sharing or multi-instance GPUs. Time-sharing allows different containers to use the GPU at different times, while multi-instance GPUs partition the GPU into smaller instances that can be used concurrently.

Time-sharing GPUs enable efficient use of GPU resources during varying workload demands.

Multi-instance GPUs allow concurrent execution of multiple tasks on a single GPU.

How do I monitor GPU performance in GKE?

Monitoring GPU performance in GKE can be done using tools like NVIDIA Data Center GPU Manager (DCGM). These tools provide metrics on GPU utilization, memory usage, and temperature, allowing you to make necessary adjustments for optimal performance. For more advanced AI model management, consider exploring AI model lifecycle management with Kubeflow on Kubernetes.

Setting up alerts for critical metrics can help you quickly identify and address issues, ensuring that your GPUs are always performing at their best. Regular monitoring and analysis of GPU performance data can lead to continuous improvements in resource utilization.