Key Takeaways

- Kubernetes namespaces provide the foundation for multi-tenancy but require additional security configurations for true workload isolation

- Combining namespaces with RBAC, network policies, and resource quotas creates a robust isolation architecture

- Implementation requires a systematic approach across five key areas: namespace creation, access control, network boundaries, resource limits, and security standards

- Different isolation patterns (soft, hard, and hybrid) can be implemented depending on organizational needs and security requirements

- Advanced techniques like service meshes and policy controllers can further enhance isolation in production environments

Kubernetes has revolutionized how organizations deploy applications, but sharing cluster resources between teams, departments, or customers introduces significant security and operational challenges. Creating truly isolated environments within a single Kubernetes cluster requires more than just basic namespace separation—it demands a comprehensive approach to resource management, access control, and network security.

Multi-tenancy in Kubernetes allows multiple users or workloads to coexist on the same infrastructure while maintaining logical separation. When implemented correctly, it delivers cost savings through efficient resource utilization while preventing noisy neighbor problems, unauthorized access, and potential security breaches. SlickFinch, a leading provider of multi-tenancy architecture solutions for Kubernetes, are experts in delivering solutions that make implementing proper isolation significantly easier for platform teams.

Kubernetes Multi-Tenancy Explained: Why Isolation Matters

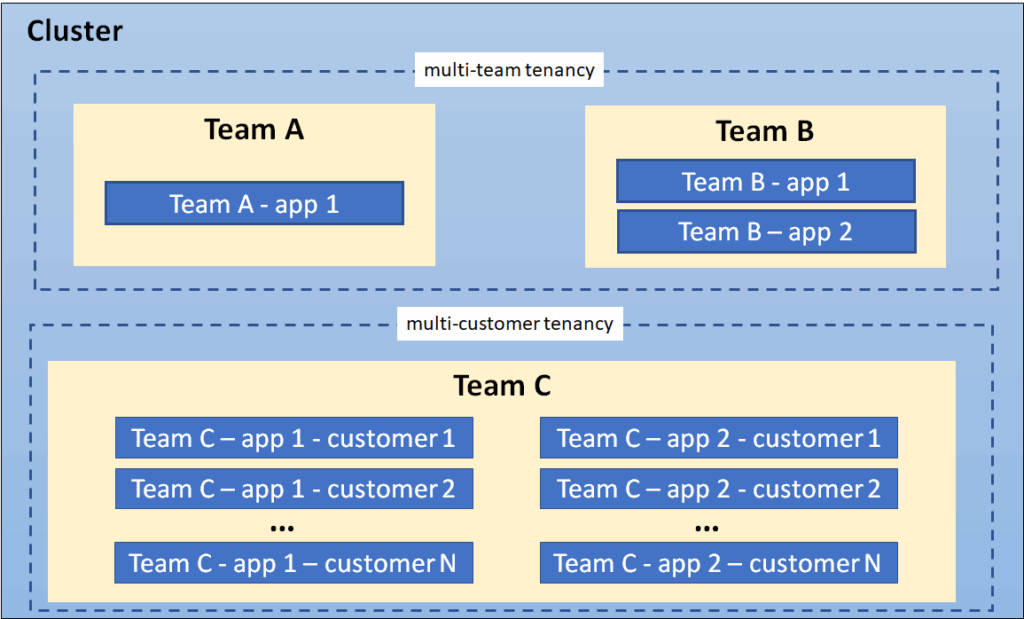

Multi-tenancy in Kubernetes refers to the practice of hosting multiple separate user groups (tenants) on a single cluster. These tenants could be development teams, applications, customers, or entire environments like staging and production. The principle sounds straightforward, but the implementation details determine whether you achieve true isolation or merely superficial separation.

Without proper isolation, tenants can negatively impact each other in various ways. A memory-hungry application in one namespace might starve resources from critical workloads in another. Overly permissive network policies could allow unauthorized communication between tenant applications. Administrators with excessive privileges might accidentally modify resources they shouldn’t access. All these scenarios highlight why comprehensive isolation strategies are essential for production Kubernetes deployments.

The business benefits of multi-tenancy are compelling: reduced infrastructure costs, simplified management, more efficient resource utilization, and standardized deployment practices. However, these advantages only materialize when isolation is implemented correctly—which is precisely what this guide addresses.

Building Blocks of Namespace Isolation in Kubernetes

Creating truly isolated tenant environments in Kubernetes requires combining several native features. No single mechanism provides complete isolation, but together they form a robust architecture for multi-tenant workloads.

Namespaces: The Foundation of Multi-Tenancy

Namespaces serve as the fundamental building block for multi-tenancy in Kubernetes. They provide a scope for names, allowing you to organize cluster resources into non-overlapping groups. Importantly, namespaces give you a mechanism for dividing cluster resources between multiple users through resource quotas. While namespaces offer basic separation, they don’t provide network isolation, security boundaries, or access control by default—these must be configured separately.

RBAC: Controlling Who Can Access What

Role-Based Access Control (RBAC) is essential for limiting what users can do within specific namespaces. Through Roles, ClusterRoles, RoleBindings, and ClusterRoleBindings, RBAC allows you to define precise permissions at both namespace and cluster levels. For proper isolation, each tenant should have carefully scoped permissions that follow the principle of least privilege—access only to what’s needed and nothing more.

A well-designed RBAC strategy prevents tenants from viewing or modifying other tenants’ resources while allowing cluster administrators to maintain oversight across all namespaces. This separation of concerns is critical for both security and operational stability in multi-tenant environments.

Network Policies: Creating Tenant Boundaries

By default, all pods in a Kubernetes cluster can communicate with each other, regardless of namespace boundaries. This default behavior undermines isolation efforts, as malicious or compromised workloads could potentially access services in other namespaces. Network Policies act as a virtual firewall, allowing you to control traffic flow between namespaces, pods, and external networks.

For proper tenant isolation, each namespace should have restrictive Network Policies that explicitly define allowed communications and deny everything else. This zero-trust approach ensures that even if a workload is compromised, the blast radius remains contained within its namespace boundary.

Resource Quotas: Preventing Noisy Neighbors

Resource contention is one of the most common challenges in multi-tenant environments. Without proper limits, one tenant’s workloads could consume excessive CPU, memory, or storage, degrading the performance of other applications. ResourceQuotas allow administrators to set hard limits on resource consumption within a namespace, while LimitRanges establish defaults and constraints for individual containers.

Effective resource management ensures fair distribution of cluster resources and prevents performance degradation from “noisy neighbors.” It also provides predictable scaling behavior, which is essential for production workloads with specific performance requirements.

Step-by-Step Implementation Guide for Namespace Isolation

Implementing proper namespace isolation isn’t simply a matter of creating namespaces—it requires a systematic approach across multiple Kubernetes subsystems. This section provides a practical implementation guide that platform administrators can follow to establish true multi-tenancy.

1. Create Dedicated Namespaces for Each Tenant

The first step is creating a dedicated namespace for each tenant. While this seems straightforward, it’s important to establish consistent naming conventions and labeling strategies that scale with your organization. A structured approach might include department/team identifiers, environment types, or other organizational metadata as part of the namespace name.

Namespace creation should be automated through Infrastructure as Code (IaC) tools like Terraform or through Kubernetes operators to ensure consistency and auditability. This automation should also apply appropriate labels and annotations that will be used by monitoring tools and policy engines.

kubectl create namespace tenant-akubectl label namespace tenant-a tenant=team-alpha environment=development2. Configure RBAC Permissions

Each namespace requires carefully crafted RBAC configurations that grant tenant users only the permissions they need. Start by creating a Role (namespace-scoped) or ClusterRole (cluster-wide) that defines the allowed actions. Then create RoleBindings to associate these permissions with specific users or groups.

For most multi-tenant scenarios, you’ll want to restrict users to their assigned namespaces and prevent access to cluster-level resources. Service accounts used by applications should follow the same principle of least privilege, with permissions limited to specific API operations they require. Additionally, implementing zero trust cloud migration security can further enhance the protection of your resources.

apiVersion: rbac.authorization.k8s.io/v1

kind: Role

metadata:

namespace: tenant-a

name: tenant-full-access

rules:

- apiGroups: ["", "extensions", "apps"]

resources: ["*"]

verbs: ["*"]

- apiGroups: ["batch"]

resources: ["jobs", "cronjobs"]

verbs: ["*"]

---

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

name: tenant-a-access

namespace: tenant-a

subjects:

- kind: Group

name: tenant-a-team

apiGroup: rbac.authorization.k8s.io

roleRef:

kind: Role

name: tenant-full-access

apiGroup: rbac.authorization.k8s.io3. Implement Network Policies

Network Policies are essential for preventing unauthorized communication between namespaces. A default deny-all policy should be applied to each namespace, followed by more specific policies that permit only required traffic flows. This zero-trust networking model ensures that even if a workload is compromised, lateral movement between namespaces is restricted.

For proper isolation, configure policies that block ingress from other namespaces while allowing egress to specific services that tenants need to access (such as databases or shared APIs). Remember that Network Policies are additive, so multiple policies affecting the same pods are combined to determine the effective ruleset.

apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

name: default-deny-all

namespace: tenant-a

spec:

podSelector: {}

policyTypes:

- Ingress

- Egress

---

apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

name: allow-dns

namespace: tenant-a

spec:

podSelector: {}

policyTypes:

- Egress

egress:

- to:

- namespaceSelector:

matchLabels:

kubernetes.io/metadata.name: kube-system

ports:

- protocol: UDP

port: 534. Set Up Resource Quotas and Limits

To prevent resource contention between tenants, apply ResourceQuotas to each namespace. These quotas define the maximum amount of CPU, memory, storage, and object counts that a namespace can consume. LimitRanges complement quotas by enforcing minimum and maximum resource requirements for individual containers and setting default values when not specified.

Resource management becomes increasingly important as your cluster scales. Without proper quotas, a single tenant could consume most or all of a cluster’s resources, effectively causing a denial of service for other tenants. Careful capacity planning and regular review of quota utilization are essential practices for maintaining a healthy multi-tenant environment.

apiVersion: v1

kind: ResourceQuota

metadata:

name: tenant-quota

namespace: tenant-a

spec:

hard:

requests.cpu: "10"

requests.memory: 20Gi

limits.cpu: "20"

limits.memory: 40Gi

pods: "50"

services: "20"

persistentvolumeclaims: "15"5. Apply Pod Security Standards

Pod Security Standards (formerly Pod Security Policies) provide another crucial layer of isolation by restricting the security context of pods. These standards help prevent privilege escalation, container breakouts, and host resource access that could compromise isolation boundaries.

For multi-tenant clusters, the Baseline or Restricted profiles should be applied rather than the more permissive Privileged profile. These stricter profiles prevent pods from running as root, mounting sensitive host paths, or using host network namespaces—all capabilities that could potentially bypass namespace isolation.

Implementation can be done through the built-in Pod Security admission controller in newer Kubernetes versions, or through third-party tools like OPA Gatekeeper or Kyverno for more complex policy enforcement scenarios.

Real-World Isolation Architecture Patterns

Soft Multi-Tenancy: Team-Based Separation

Soft multi-tenancy is typically used within a single organization where different teams share a cluster but require logical separation. This approach assumes a basic level of trust between tenants while still providing isolation for resource management, access control, and organizational purposes. In soft multi-tenancy, security controls focus on preventing accidental interference rather than defending against malicious actions from other tenants.

Hard Multi-Tenancy: Customer Isolation

Hard multi-tenancy implements rigorous security boundaries intended to isolate untrusted tenants from each other, such as in SaaS platforms where each tenant represents a different customer. This approach requires comprehensive isolation across all dimensions: strict network policies, pod security standards, strong RBAC configurations, resource quotas, and regular security audits. Hard multi-tenancy aims to provide security comparable to dedicated clusters while maintaining the economic benefits of shared infrastructure.

Hybrid Approaches for Complex Organizations

Many organizations implement hybrid approaches that combine elements of both soft and hard multi-tenancy. For example, development and testing environments might use soft multi-tenancy between internal teams, while production environments implement harder boundaries. Similarly, core services might run in dedicated namespaces with special privileges, while standard application workloads operate in more restricted tenant namespaces.

The ideal approach depends on your specific security requirements, organizational structure, and operational model. Most successful implementations start with a clear security baseline applied to all namespaces, then apply additional controls based on the sensitivity of the workloads and the trust relationship between tenants.

Advanced Isolation Techniques

While the core Kubernetes features provide a solid foundation for namespace isolation, production environments often require additional layers of security and control. These advanced techniques build upon the basic isolation mechanisms to create more robust multi-tenant architectures.

Pod Security Admission Controllers

The Pod Security Admission controller, which replaced Pod Security Policies in Kubernetes 1.25, provides a built-in mechanism for enforcing security standards at the namespace level. It offers three profiles (Privileged, Baseline, and Restricted) that progressively limit the security context of pods. For multi-tenant environments, configuring namespaces with the Restricted profile prevents tenants from running privileged containers, accessing host resources, or using host namespaces—capabilities that could potentially breach isolation boundaries.

Implementation involves labeling namespaces with the desired enforcement level, mode, and version. This declarative approach makes it easier to ensure consistent security controls across all tenant namespaces and provides clear documentation of your security posture.

OPA Gatekeeper for Policy Enforcement

Open Policy Agent (OPA) Gatekeeper extends Kubernetes’ admission control with customizable policies written in Rego. This powerful policy engine allows administrators to implement organization-specific security requirements that go beyond what’s possible with native Kubernetes controls. In multi-tenant environments, Gatekeeper can enforce namespace isolation by validating resource definitions against policies that prevent cross-namespace references, restrict network access patterns, or enforce security best practices.

The key advantage of Gatekeeper is its ability to implement complex, context-aware policies. For example, you could create policies that enforce stricter controls on production namespaces compared to development ones, or that implement tenant-specific compliance requirements.

Custom Admission Controllers

For organizations with specific requirements not addressed by existing tools, custom admission controllers provide a way to insert validation logic directly into the Kubernetes API request flow. These controllers can analyze incoming requests to create or modify resources, rejecting those that violate your isolation requirements. While more complex to implement than off-the-shelf solutions, custom controllers offer maximum flexibility for unique multi-tenant scenarios.

Service Mesh for Deep Network Control

Service meshes like Istio, Linkerd, or Cilium add powerful networking capabilities that enhance namespace isolation. They provide fine-grained traffic management, observability, and security features that operate at the application protocol level rather than just IP and port. For multi-tenant environments, service meshes enable mTLS encryption between services, detailed access control policies, and traffic monitoring that can detect attempts to bypass namespace boundaries.

The observability features of service meshes are particularly valuable for multi-tenant troubleshooting, as they provide insights into cross-namespace communication patterns that might indicate isolation failures. Additionally, the ability to implement circuit breaking and rate limiting helps prevent one tenant’s traffic issues from affecting others.

Monitoring and Troubleshooting Multi-Tenant Environments

Effective monitoring is essential for maintaining isolation in multi-tenant Kubernetes environments. Without proper visibility, isolation breaches may go undetected, resource contention issues could escalate, and administrators might struggle to identify the source of problems that affect multiple tenants.

Tenant-Aware Logging

Centralized logging systems should capture namespace information along with other metadata to enable tenant-specific filtering and analysis. This allows administrators to isolate logs from a particular tenant when troubleshooting issues, while giving tenant users access only to their own logs. Tools like Loki, Elasticsearch, or Cloud-native logging solutions can be configured to enforce these access boundaries through RBAC integration.

Consider implementing structured logging standards that include tenant and namespace identifiers in log entries, making it easier to correlate events across different components of a tenant’s application stack. This tenant-aware approach to logging supports both isolation requirements and operational efficiency.

Namespace-Level Resource Monitoring

Resource monitoring in multi-tenant clusters needs to provide both cluster-wide visibility for administrators and namespace-specific metrics for tenant users. Prometheus and Grafana can be configured with multi-tenancy in mind, using namespace labels to segregate metrics and dashboard access. Key metrics to monitor include resource utilization against quotas, API server requests by namespace, and network traffic patterns between namespaces.

Alert thresholds should be set to provide early warning of potential isolation issues, such as unusual cross-namespace network activity or approaching resource limits. Proactive monitoring helps maintain the integrity of namespace boundaries before problems affect tenant workloads.

Common Isolation Failures and Fixes

Even well-designed multi-tenant environments can experience isolation failures. Common issues include overly permissive RBAC configurations, missing network policies, resource contention despite quotas, and security misconfigurations. Regular auditing can help identify these issues before they lead to serious problems.

When troubleshooting isolation failures, start by verifying the basic configuration elements: RBAC roles and bindings, network policies, resource quotas, and pod security standards. Many isolation breaches result from misconfigurations or overlooked settings rather than fundamental design flaws. Tools like Pluto, Kube-bench, or Kubernetes-specific security scanners can help identify misconfigurations systematically.

Alternative Approaches: When Namespaces Aren’t Enough

Node-Level Isolation with Taints and Tolerations

For scenarios requiring stronger isolation than namespaces alone can provide, node-level segregation offers an additional boundary layer. By applying taints to nodes and corresponding tolerations to tenant pods, you can ensure that specific workloads run on dedicated nodes. This approach creates physical separation between tenants, preventing side-channel attacks and reducing the impact of node-level resource contention.

Node isolation is particularly valuable for workloads with specialized hardware requirements, compliance needs that mandate physical separation, or performance-critical applications that cannot tolerate resource variability. The trade-off is reduced cluster efficiency, as dedicated nodes may remain underutilized compared to shared ones.

Multiple Physical Clusters vs. Multi-Tenancy

Despite the advancements in Kubernetes multi-tenancy, some organizations determine that completely separate clusters provide the best isolation for their needs. This approach maximizes tenant separation by eliminating all shared components but increases operational overhead and costs. Dedicated clusters might be appropriate for highly sensitive workloads, customers with specific compliance requirements, or scenarios where different clusters need substantially different configurations.

The decision between multi-tenancy and multiple clusters should consider security requirements, operational complexity, cost factors, and scaling needs. Many organizations implement a hybrid approach, using multi-tenancy for most workloads while maintaining dedicated clusters for special cases.

Securing Your Multi-Tenant Setup for Production

Before deploying a multi-tenant Kubernetes architecture to production, comprehensive security validation is essential. The interconnected nature of Kubernetes means that seemingly minor misconfigurations can create significant security vulnerabilities, especially in shared environments where isolation boundaries are critical.

Security Checklist Before Going Live

A thorough pre-production security assessment should verify all isolation mechanisms. This includes validating RBAC configurations with permission testing, verifying network policy effectiveness through connectivity tests, confirming resource quota enforcement under load, and checking Pod Security Standard compliance. The assessment should also include API server auditing configuration, secrets management practices, and proper encryption of sensitive data.

Beyond technical controls, review your incident response procedures for multi-tenant scenarios. Clear processes for investigating and containing isolation breaches help minimize their impact when they occur.

Automated Policy Enforcement

Manual configuration of isolation controls is error-prone and difficult to scale. Automated policy enforcement through admission controllers, GitOps workflows, and CI/CD pipelines ensures consistent application of security controls across all tenant namespaces. These automation tools should validate changes against your security baseline before they reach the cluster, preventing misconfigurations that could compromise isolation.

Policy-as-code approaches, using tools like OPA Gatekeeper, Kyverno, or custom admission webhooks, allow you to express isolation requirements as versioned, testable code. This enables systematic validation and provides an audit trail of security control evolution over time.

Example Policy-as-Code for Namespace Isolation

Using OPA Gatekeeper to enforce that pods cannot use host network:

apiVersion: constraints.gatekeeper.sh/v1beta1 kind: K8sPSPHostNetworkingPorts metadata: name: prevent-host-network spec: match: kinds: - apiGroups: [""] kinds: ["Pod"] namespaces: - "tenant-a" - "tenant-b" parameters: hostNetwork: false

Consider implementing continuous validation that regularly tests isolation boundaries, rather than relying solely on configuration checks. Tools like Kubernetes Cluster Network Testing (kube-nettest) or network policy validators can probe actual runtime behavior to verify that your isolation mechanisms are functioning as expected.

Regular Isolation Audits

Isolation is not a one-time configuration but an ongoing requirement that needs regular verification. Schedule periodic audits of your multi-tenant environment, focusing on permission boundaries, network policies, and resource utilization patterns. These audits should look for signs of isolation drift—gradual weakening of security controls through incremental changes or exceptions that accumulate over time.

Auditing tools like Trivy, Kube-bench, and KubeHunter can identify misconfigurations and vulnerabilities that might affect isolation. Combine automated scanning with manual reviews, particularly after significant changes to your cluster architecture or tenant onboarding processes.

Namespace Isolation: The Path Forward

Kubernetes continues to evolve its multi-tenancy capabilities with each release. Future improvements will likely focus on simplifying isolation implementation, strengthening security boundaries, and providing better tools for managing multi-tenant environments at scale. Organizations implementing multi-tenancy today should design their architectures to accommodate these improvements while maintaining compatibility with existing security controls.

The most successful multi-tenant Kubernetes deployments combine technical controls with clear governance processes. Document your isolation architecture, tenant onboarding procedures, and security responsibilities. Create a tenant agreement that clearly communicates expectations and limitations of the shared environment. This comprehensive approach ensures that multi-tenancy delivers on its promise of efficiency without compromising security or operational stability.

Frequently Asked Questions

Multi-tenant Kubernetes architectures raise common questions about implementation details, security boundaries, and operational concerns. Here are answers to the most frequently asked questions about namespace isolation:

- How do I determine the right level of isolation for my organization?

- What are the performance implications of multi-tenancy?

- Can I implement different isolation levels for different types of tenants?

- How do shared services work in a multi-tenant architecture?

- What is the overhead of maintaining proper isolation controls?

The appropriate isolation strategy depends on your specific security requirements, organizational structure, and operational model. Start with a clear understanding of the trust relationships between tenants and the sensitivity of the workloads they’ll be running. This assessment will guide your decisions about which isolation mechanisms to implement and how strictly to enforce them.

Remember that isolation is just one aspect of a comprehensive Kubernetes security strategy. It should complement other security practices like vulnerability management and secret protection.

How do I prevent tenants from seeing each other’s resources?

By default, users with namespace-scoped permissions cannot see resources in other namespaces. However, certain resource types (like nodes, persistent volumes, and storage classes) are cluster-scoped and visible across namespace boundaries. To prevent tenants from seeing each other’s resources, implement strict RBAC policies that grant only namespace-specific permissions and avoid giving users broad cluster-level access. For complete visual separation, consider implementing custom UIs or dashboards that filter resources by namespace, or use virtual clusters that provide fully isolated Kubernetes API endpoints.

Can tenants in separate namespaces communicate by default?

Yes, by default all pods in a Kubernetes cluster can communicate with each other across namespace boundaries. This default behavior allows for flexible application architectures but undermines isolation efforts. To prevent inter-namespace communication, you must explicitly implement NetworkPolicies that deny ingress from and egress to other namespaces. A common approach is to apply a default-deny policy to each tenant namespace, then selectively allow only the specific communication paths that are required for application functionality.

What happens if a tenant exceeds their resource quota?

When a namespace reaches its ResourceQuota limits, the Kubernetes API server will reject new resource creation requests that would exceed those limits. For CPU and memory limits, existing pods will continue running, but new pods cannot be created or updated if doing so would exceed the quota. This behavior prevents a tenant from consuming more than their allocated share of cluster resources but can cause deployment failures if quota limits are reached unexpectedly. Monitoring systems should alert on approaching quota limits so administrators can take proactive action before applications are affected.

Is namespace isolation sufficient for highly sensitive workloads?

For highly sensitive workloads or untrusted tenants, namespace isolation alone may not provide sufficient security guarantees. Kubernetes namespaces were not designed as hard security boundaries, and sophisticated attacks might potentially bypass namespace isolation through kernel vulnerabilities, container escapes, or control plane weaknesses. Organizations with stringent security requirements should consider additional isolation mechanisms such as node-level separation, virtual clusters (like those provided by Loft’s vcluster), or entirely separate physical clusters for their most sensitive workloads.

The security assessment should consider the threat model, data sensitivity, compliance requirements, and the technical sophistication of potential attackers when determining if namespace isolation meets your security needs.

How do I migrate from a single-tenant to multi-tenant Kubernetes architecture?

Migrating to a multi-tenant architecture requires careful planning and incremental implementation. Start by establishing your isolation requirements and designing the target architecture with all necessary security controls. Then create a tenant onboarding process that includes namespace setup, RBAC configuration, network policies, and resource quotas. Begin migration with less critical workloads to validate your approach before moving sensitive applications.

The migration process should include comprehensive testing of isolation boundaries, monitoring configuration, and tenant user experience. Document the new architecture and provide training for both administrators and tenant users on their responsibilities in the shared environment.

With proper implementation of namespace isolation techniques, organizations can achieve both the efficiency benefits of shared infrastructure and the security requirements of separated workloads. The key is understanding that true isolation requires multiple complementary controls working together, regularly validated through testing and auditing.

For organizations looking to implement robust multi-tenancy solutions with minimal operational overhead, SlickFinch provides all the expertipowerful alternative to manual namespace configuration while maintaining compatibility with existing Kubernetes workflows and tools.