Key Takeaways

MLOps on Google Cloud Platform (GCP) streamlines machine learning (ML) workflows by integrating development, deployment, and monitoring processes.

Core components of MLOps on GCP include Vertex AI, TensorFlow Extended (TFX), and Cloud Build.

Using MLOps on GCP ensures continuous integration and delivery (CI/CD) of ML models, enhancing efficiency and reliability.

Automating model training and deployment with GCP tools reduces human error and accelerates time-to-market.

Real-world examples demonstrate the effectiveness of MLOps on GCP in achieving seamless ML deployments.

What is MLOps on Google Cloud Platform?

MLOps, or Machine Learning Operations, on Google Cloud Platform (GCP) is a set of practices that combines machine learning, DevOps, and data engineering to automate and streamline the entire ML lifecycle. From data preparation and model training to deployment and monitoring, MLOps on GCP ensures that ML models are efficiently developed, tested, and maintained.

Core Principles of MLOps

The core principles of MLOps revolve around automation, collaboration, and continuous improvement. By automating repetitive tasks, data scientists and ML engineers can focus on innovation. Collaboration between teams ensures that models are robust and reliable. Continuous improvement through monitoring and feedback loops allows for rapid iteration and enhancement of models.

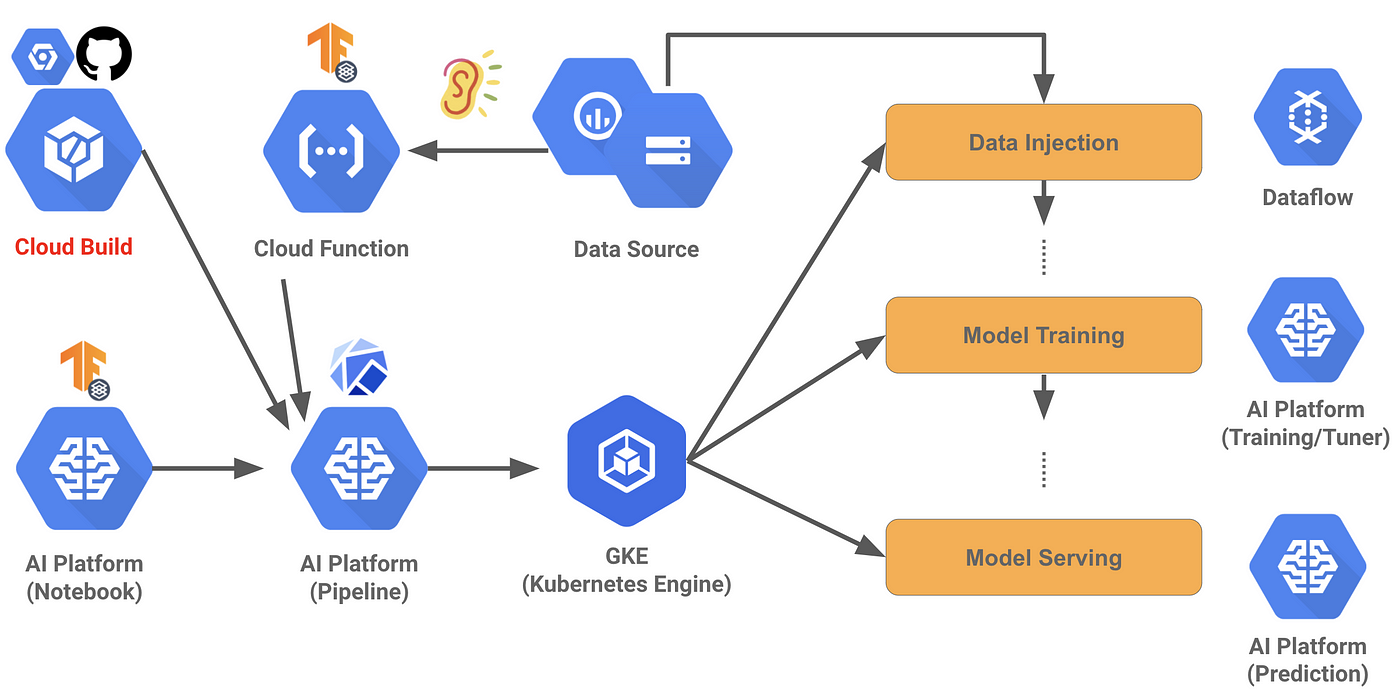

“MLOps: Big Picture in GCP. “Why do we …” from medium.com and used with no modifications.

Understanding MLOps on Google Cloud Platform

Definition and Importance

MLOps is essential for scaling machine learning efforts in any organization. It addresses the complexities of deploying ML models in production, where models need to be continuously updated and maintained. Google Cloud Platform provides a comprehensive suite of tools that support the entire ML lifecycle, making it easier to implement MLOps practices.

Benefits of Using Google Cloud for MLOps

Google Cloud offers several advantages for MLOps, including:

Scalability: GCP’s infrastructure can handle large-scale ML workloads, ensuring that models can be trained and deployed efficiently.

Integration: GCP integrates seamlessly with other Google services, such as BigQuery for data storage and analysis, and Google Kubernetes Engine (GKE) for container orchestration.

Automation: Tools like Vertex AI and Cloud Build automate many aspects of the ML lifecycle, from data preprocessing to model deployment.

Security: GCP provides robust security features, ensuring that data and models are protected throughout their lifecycle.

Core Components of MLOps on Google Cloud

Google Cloud offers several key components that are integral to implementing MLOps. These tools and services work together to create a seamless ML pipeline.

Vertex AI

Vertex AI is Google Cloud’s unified AI platform that simplifies the process of building, deploying, and scaling ML models. It provides a range of tools for data preparation, model training, and deployment, all within a single platform.

With Vertex AI, you can:

Train and deploy models using pre-built algorithms or custom code.

Automate hyperparameter tuning to optimize model performance.

Monitor model performance and detect anomalies in real-time.

TensorFlow Extended (TFX)

TensorFlow Extended (TFX) is an end-to-end platform for deploying production ML pipelines. It provides a set of components that help with data validation, transformation, model training, and serving.

Key features of TFX include:

Data Validation: Ensures that the input data is consistent and meets quality standards.

Transform: Preprocesses data to make it suitable for model training.

Trainer: Trains the model using TensorFlow.

Serving: Deploys the trained model for inference.

Cloud Build

Cloud Build is a continuous integration and continuous delivery (CI/CD) platform on Google Cloud that automates the process of building, testing, and deploying code. It integrates seamlessly with other Google Cloud services, making it an essential tool for MLOps.

With Cloud Build, you can define custom workflows using YAML configuration files. These workflows can include steps for data preprocessing, model training, testing, and deployment. By automating these tasks, Cloud Build ensures that your ML pipelines are consistent and reproducible.

Implementing Continuous Integration and Delivery

Continuous Integration (CI) and Continuous Delivery (CD) are critical components of MLOps. CI ensures that changes to the codebase are automatically tested and validated, while CD automates the deployment of models to production environments. Implementing CI/CD pipelines on Google Cloud ensures that your ML models are always up-to-date and reliable.

Setting Up CI/CD Pipelines

Setting up CI/CD pipelines on Google Cloud involves several steps, including integrating tools like Kubernetes to enhance software release velocity.

Define the Pipeline: Use a YAML file to define the steps in your CI/CD pipeline. This file specifies the tasks to be performed, such as data preprocessing, model training, and deployment.

Integrate with Source Control: Connect your pipeline to a source control system like GitHub or GitLab. This integration ensures that changes to the codebase trigger the pipeline automatically.

Automate Testing: Include steps in your pipeline to automatically test the model. These tests can include unit tests, integration tests, and performance tests.

Deploy the Model: Automate the deployment of the trained model to a production environment. Use tools like Vertex AI or TensorFlow Serving to deploy the model.

Tools and Techniques

Several tools and techniques can enhance your CI/CD pipelines on Google Cloud:

Kubeflow Pipelines: A platform for building and deploying portable, scalable ML workflows based on Kubernetes. It integrates with Google Kubernetes Engine (GKE) to provide a robust infrastructure for running ML pipelines.

Cloud Functions: Serverless functions that can be triggered by events, such as changes to the codebase or the completion of a pipeline step. Cloud Functions can be used to automate tasks like data preprocessing and model evaluation.

Artifact Registry: A secure repository for storing and managing container images and other artifacts. Use Artifact Registry to store the trained model and other dependencies needed for deployment.

Automating Model Training

Automating model training is a key aspect of MLOps. By automating the training process, you can ensure that models are always up-to-date and optimized for performance. Google Cloud provides several tools and services to automate model training, making it easier to manage the ML lifecycle.

Continuous Training (CT) in MLOps

Continuous Training (CT) involves automatically retraining models as new data becomes available. This approach ensures that models remain accurate and relevant over time. On Google Cloud, you can set up CT pipelines using Vertex AI and TFX.

For example, you can schedule regular retraining jobs using Vertex AI Pipelines. These jobs can be triggered by events, such as the arrival of new data or changes to the model code. By automating the retraining process, you can reduce manual intervention and ensure that your models are always performing at their best.

Best Practices for Automated Training

To ensure the success of your automated training pipelines, follow these best practices:

Data Versioning: Keep track of different versions of your training data. Use tools like Dataflow and BigQuery to manage and version your data.

Hyperparameter Tuning: Automate the process of hyperparameter tuning to optimize model performance. Use Vertex AI’s built-in hyperparameter tuning capabilities to find the best hyperparameters for your model.

Model Evaluation: Continuously evaluate the performance of your models using metrics like accuracy, precision, and recall. Use Vertex AI’s model evaluation tools to monitor and compare different versions of your model.

Managing and Monitoring Deployments

Once your model is deployed, it’s essential to monitor its performance and ensure that it continues to deliver accurate predictions. Google Cloud provides several tools for managing and monitoring ML deployments, allowing you to detect and address issues quickly.

Continuous Monitoring (CM)

Continuous Monitoring (CM) involves tracking the performance of your deployed models in real-time. By continuously monitoring your models, you can detect issues like data drift, model degradation, and anomalies. Google Cloud offers several services for continuous monitoring, including Vertex AI and Stackdriver.

Tools for Monitoring and Logging

Effective monitoring and logging are crucial for maintaining the reliability of your ML models. Google Cloud provides several tools to help you monitor and log your deployments.

Vertex AI Monitoring: Provides real-time monitoring of your deployed models, including metrics like prediction accuracy and latency. Use Vertex AI Monitoring to set up alerts and notifications for critical issues.

Stackdriver Logging: Collects and stores logs from your ML applications. Use Stackdriver Logging to analyze logs and troubleshoot issues in your deployment pipeline.

Cloud Monitoring: Offers a comprehensive suite of monitoring tools, including dashboards, alerts, and custom metrics. Use Cloud Monitoring to track the health and performance of your ML infrastructure.

Case Studies of MLOps on Google Cloud

To illustrate the effectiveness of MLOps on Google Cloud, let’s look at some real-world examples. These case studies demonstrate how organizations have successfully implemented MLOps practices to streamline their ML workflows and achieve better outcomes.

One notable example is a large retail company that used Google Cloud’s MLOps tools to optimize their inventory management system. By automating the training and deployment of their ML models, they were able to reduce stockouts and improve customer satisfaction. The company used Vertex AI to manage their entire ML pipeline, from data preprocessing to model deployment. They also leveraged Cloud Build to automate their CI/CD workflows, ensuring that their models were always up-to-date.

Another example is a healthcare organization that implemented MLOps on Google Cloud to improve patient outcomes. The organization used TFX to build and deploy ML models that predicted patient readmissions. By continuously monitoring and retraining their models, they were able to achieve higher accuracy and reduce readmission rates. The healthcare organization also used Stackdriver Logging to monitor their deployments and quickly address any issues that arose.

Lessons Learned and Best Practices

From these real-world examples, several key lessons and best practices can be gleaned:

Automate Repetitive Tasks: Automating tasks such as data preprocessing, model training, and deployment reduces human error and speeds up the ML lifecycle.

Continuous Monitoring: Regularly monitor your deployed models to detect and address issues quickly. Use tools like Vertex AI Monitoring and Stackdriver Logging for real-time insights.

Collaboration: Foster collaboration between data scientists, ML engineers, and DevOps teams to ensure robust and reliable models.

Regular Retraining: Implement continuous training pipelines to keep your models up-to-date with the latest data.

Use Managed Services: Leverage managed services like Vertex AI and TFX to simplify the management of your ML pipelines.

By following these best practices, organizations can streamline their ML workflows and achieve better outcomes with MLOps on Google Cloud.

Conclusion: Mastering MLOps for Efficient Deployments

Mastering MLOps on Google Cloud Platform is essential for organizations looking to scale their machine learning efforts. By leveraging tools like Vertex AI, TFX, and Cloud Build, you can automate and streamline the entire ML lifecycle, from data preprocessing and model training to deployment and monitoring. Continuous integration, delivery, and monitoring ensure that your models are always up-to-date and performing at their best. By following best practices and learning from real-world examples, you can achieve seamless ML deployments and drive better business outcomes.

Frequently Asked Questions (FAQ)

Here are some common questions about MLOps on Google Cloud Platform:

What is the role of Vertex AI in MLOps?

Vertex AI is a unified AI platform on Google Cloud that simplifies the process of building, deploying, and scaling ML models. It provides a range of tools for data preparation, model training, and deployment, all within a single platform. Vertex AI also offers features like hyperparameter tuning, model monitoring, and automated pipelines, making it an essential component of MLOps on Google Cloud.

How does Continuous Integration differ from Continuous Delivery in MLOps?

Continuous Integration (CI) involves automatically testing and validating changes to the codebase to ensure that they do not introduce errors. CI pipelines typically include steps for running unit tests, integration tests, and performance tests. Continuous Delivery (CD), on the other hand, automates the deployment of models to production environments. CD pipelines include steps for packaging the model, deploying it to a production environment, and verifying that the deployment was successful.

CI ensures that changes to the codebase are automatically tested and validated.

CD automates the deployment of models to production environments.

Both CI and CD are critical components of MLOps, ensuring that models are always up-to-date and reliable.

What are the best practices for automated model training?

Best practices for automated model training include:

Data Versioning: Keep track of different versions of your training data to ensure reproducibility.

Hyperparameter Tuning: Automate the process of hyperparameter tuning to optimize model performance.

Model Evaluation: Continuously evaluate the performance of your models using metrics like accuracy, precision, and recall.

Regular Retraining: Implement continuous training pipelines to keep your models up-to-date with the latest data.

Why is continuous monitoring important in MLOps?

Continuous monitoring is crucial in MLOps because it allows you to track the performance of your deployed models in real-time. By continuously monitoring your models, you can detect issues like data drift, model degradation, and anomalies. This ensures that your models remain accurate and reliable over time. Tools like Vertex AI Monitoring and Stackdriver Logging provide real-time insights into your model’s performance, allowing you to address issues quickly.

Can you provide examples of successful MLOps implementations on Google Cloud?

Yes, there are several examples of successful MLOps implementations on Google Cloud:

MLOps on Google Cloud Platform ensures smooth machine learning deployments by integrating development and operations. By leveraging tools like Kubernetes, teams can efficiently manage and scale their machine learning models. For instance, Kubeflow provides a comprehensive suite of tools that streamline the entire machine learning lifecycle.