Main Points

- Istio provides a wide range of features and customization options, but requires more resources and has a higher learning curve than Linkerd.

- Linkerd values simplicity and performance, using significantly less resources while offering essential service mesh features with lower latency.

- When deciding between these service meshes, consider your team’s experience, the scale of your infrastructure, and your specific needs for traffic management, security, and observability.

- Linkerd is generally better for teams that are new to service mesh technology or have limited resources, while Istio is better suited to complex enterprise environments that require extensive customization.

- Both service meshes address key microservices challenges, such as service discovery, load balancing, and secure communication, without requiring changes to the code.

Choosing the right service mesh can make a big difference to your microservices architecture. As more and more organizations adopt cloud-native technologies, service meshes have become a vital part of the infrastructure for managing complex, distributed systems.

Istio and Linkerd are the top two competitors in the service mesh arena. They both aim to simplify the management of microservices, but they go about it in completely different ways. They differ greatly in terms of complexity, performance, and the features they offer. According to the comprehensive research conducted by Solo.io on service mesh technologies, it is vital to understand these differences in order to make a decision that best suits the specific needs and technical capabilities of your organization.

Why Service Meshes are Crucial for Microservices

The way we develop applications has been transformed by microservices architectures. They allow teams to build, deploy, and scale services independently. But this approach also adds a lot of complexity to communication between services, enforcing security, and observability across distributed environments.

The Communication Hurdles of Contemporary Microservices

In the world of microservices, applications are broken down into dozens or even hundreds of tiny, specialized services. Each of these services needs to find, connect with, and securely communicate with other services. As your application grows, managing these interactions becomes more and more complicated, especially when you have to deal with authentication, authorization, and circuit breaking.

Conventional networking methods often struggle in these volatile environments where services are continuously deployed, scaled, and ended. In the absence of suitable tools, developers are left to incorporate complex networking code into their applications, resulting in repeated efforts, inconsistent implementations, and a greater maintenance workload. To mitigate these challenges, developers can explore service discovery tools that streamline networking processes.

How Service Meshes Tackle Key Infrastructure Issues

Service meshes tackle these problems by providing a dedicated infrastructure layer for managing service-to-service communication. They introduce critical capabilities including:

Service meshes offer several key features:

- Service Discovery: They automatically locate and connect to services, eliminating the need for hardcoded addresses.

- Load Balancing: Service meshes intelligently distribute traffic among service instances.

- Traffic Management: They implement circuit breakers, retries, and timeouts.

- Security: Service meshes enforce mutual TLS, access policies, and authentication.

- Observability: They collect metrics, logs, and traces without requiring changes to the application.

One of the main advantages of service meshes is that they provide these features without requiring any changes to the application code. This is accomplished by injecting lightweight proxies (sidecars) next to each service instance. The service mesh intercepts all network traffic, applies policies, and collects telemetry in a transparent manner.

Istio: Google’s Comprehensive Service Mesh

Created by Google, IBM, and Lyft, Istio has become one of the most extensive service mesh solutions on the market. Its architecture is a testament to Google’s experience with large-scale distributed systems and offers a wide range of configuration options for intricate deployment scenarios.

Basic Structure and Elements

Istio is designed with a distinct division between its control plane and data plane elements. The control plane is made up of several elements that control and set up the proxies to enforce rules and gather telemetry. The central component of this is Istiod, which combines several control functions including distributing configuration, managing certificates, and discovering services.

The data plane is made up of Envoy proxies that are deployed as sidecars next to each service. These high-performance, expandable proxies manage all network communication to and from the services they are paired with. This design allows Istio to offer a wide range of features while keeping a clear separation of concerns.

In microservices architecture, service mesh solutions like Istio and Linkerd have become indispensable tools for managing complex service-to-service communication. These platforms offer features such as load balancing, service discovery, and security, which are crucial for maintaining robust and scalable applications. As organizations increasingly adopt cloud-native technologies, understanding the differences and capabilities of these service mesh solutions becomes essential.

“Istio / Architecture” from istio.io and used with no modifications.

Managing Traffic

Istio is a leader in traffic management, offering a suite of features that go beyond simple routing. Istio offers advanced traffic shifting capabilities that give you precise control over request flows, allowing for advanced deployment strategies such as canary releases and blue-green deployments. This allows you to test new features with a limited user base before rolling them out to all users.

Istio has a solid circuit breaker system that stops the domino effect of service failures by restricting the influence of services that are failing. If a service begins to malfunction, Istio can identify these problems and temporarily reroute traffic away from the instances causing issues. This gives them a chance to recover without impacting the whole application.

Security Features and Implementation

Istio’s security model is multi-layered and very comprehensive. At its core, Istio provides automatic mutual TLS (mTLS) encryption between services, ensuring that all communication within the mesh is encrypted and authenticated. This happens transparently, without requiring developers to implement encryption logic in their applications.

Aside from encryption, Istio provides detailed access control via its authorization policies. Administrators have the power to specify the exact services that are able to communicate with each other, and this is based on several attributes such as identity, source, and headers. This zero-trust approach guarantees that even if a hacker manages to gain access to your cluster, their capacity to move laterally is significantly restricted. For more insights on security management, explore Kubernetes secrets management techniques.

Monitoring Tools and Integration

Istio really stands out when it comes to monitoring. It provides a detailed view of how services are behaving without needing any changes to instrumentation. Istio automatically gathers comprehensive metrics on all traffic that moves through the mesh. This includes request rates, error rates, and latencies. This makes it easy to spot performance issues and quickly fix problems.

Istio works smoothly with well-known monitoring tools such as Prometheus, Grafana, Jaeger, and Kiali, which offer a full observability stack. With these integrations, teams can see service dependencies, follow requests as they go through several services, and pinpoint the exact point of failure when problems arise.

Requirements for Resources and Impact on Performance

Istio’s broad range of features does have an impact on resource usage. Even in the smallest installations, the control plane typically requires a minimum of 1-2 CPU cores and 1.5GB of memory. As the service mesh expands, so do the requirements. Each Envoy proxy sidecar adds about 100-200MB of memory overhead and uses more CPU resources.

Istio’s resource footprint is significantly larger than other options, such as Linkerd. Companies must weigh the benefits of Istio’s additional features against the higher resource usage, particularly in environments with limited capacity or where many small services are being run.

Linkerd: The Lighter CNCF Option

Istio may offer a more all-encompassing solution, but Linkerd is all about being simple, fast, and user-friendly. As a project that has graduated from the Cloud Native Computing Foundation (CNCF), Linkerd has become quite popular among teams who want a service mesh solution that is more streamlined and offers fundamental features without being overly complicated.

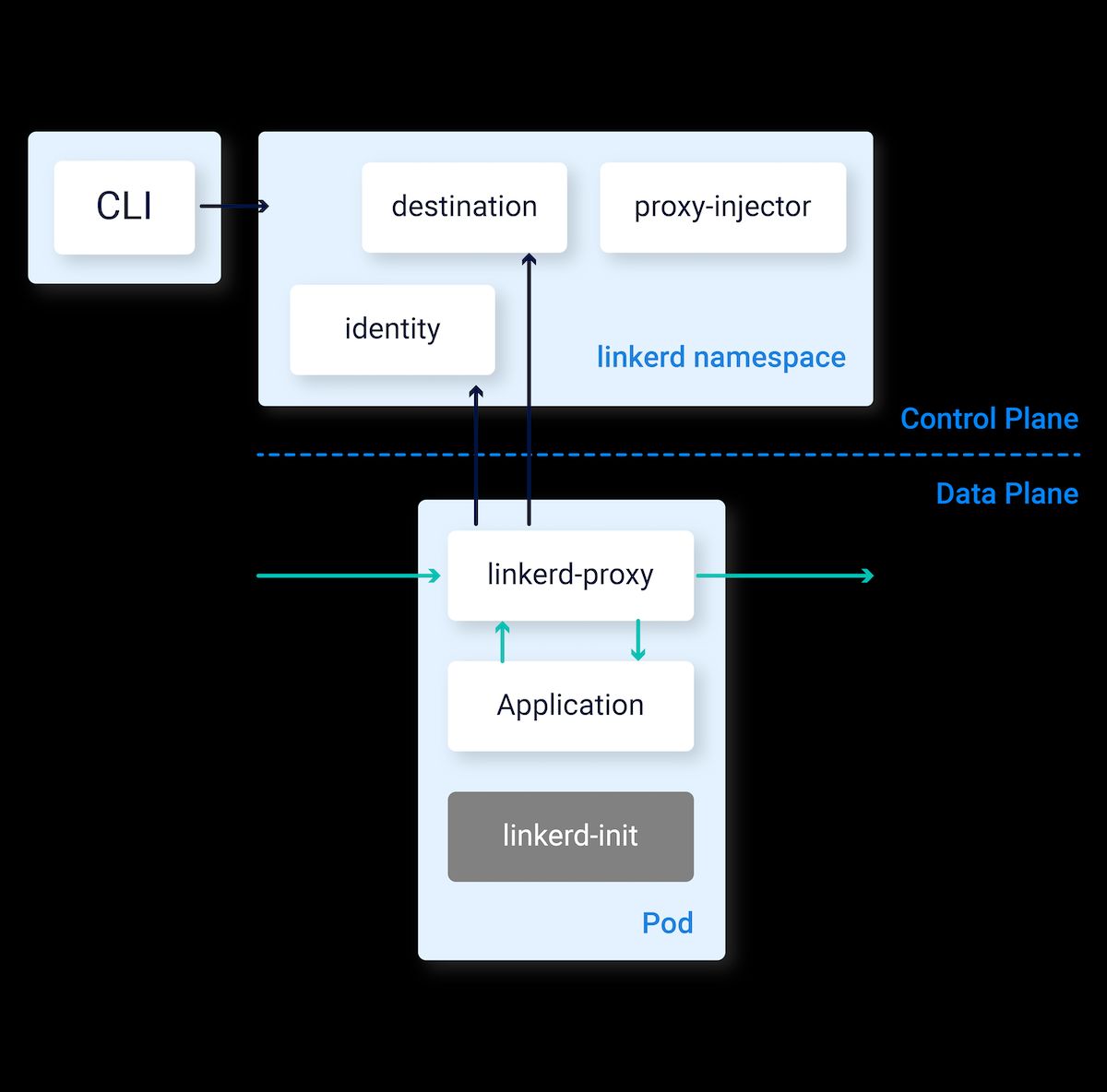

A Quick Look at Architecture

Linkerd uses a streamlined architecture that focuses on delivering the key service mesh functions without adding extra complexity. Unlike Istio, which uses Envoy proxies, Linkerd uses extremely lightweight proxies built using Rust and fine-tuned specifically for service mesh needs, which results in much less resource usage.

The control plane is also simplified, needing fewer components and less setup to get started. This architectural simplicity means faster setup times, easier maintenance, and a smaller learning curve for teams that are new to service mesh technology.

“Linkerd’s architecture” from linkerd.io and used with no modifications.

How they handle traffic management

Linkerd’s traffic management strategy is practical and straightforward, prioritizing reliability and simplicity over extensive customization. It comes with an automatic retry feature that can recover from short-term failures without needing a developer to step in, greatly improving service resilience in environments where the network isn’t stable.

Linkerd and Istio both have traffic split capabilities, which allow for a percentage of traffic to be allocated between different service versions. This supports basic canary deployments and A/B testing scenarios. Linkerd’s traffic management is not as feature-rich as Istio’s, but it covers the most common use cases and requires significantly less configuration complexity.

- Automated retry and timeout handling

- Load distribution with server-side selection

- Traffic division for canary rollouts

- Circuit disruption to avoid cascading failures

- Request direction based on HTTP headers

These features come with reasonable defaults that operate efficiently right away, needing little adjustment for most applications. This “everything you need is included” method allows teams to realize instant benefits without the need for a lot of configuration work, similar to how service discovery tools can streamline deployment processes.

Security Features

Linkerd offers automatic mTLS between services to provide robust security, ensuring that all communication within the mesh is encrypted and authenticated. Unlike many of its competitors, Linkerd automatically handles certificate rotation and management, with certificates that rotate every 24 hours by default to minimize the potential impact of key compromises. The security model is identity-based and built on a minimalist approach that emphasizes simplicity while still offering strong protection against common threats.

Options for Monitoring and Observability

Linkerd manages to maintain its lightweight nature without sacrificing observability. It automatically collects and shows golden metrics (success rates, request volumes, and latencies) for all services in the mesh, and it does this without needing any changes to the code or annotations.

Linkerd has a built-in dashboard that gives instant insight into the health and performance of the mesh. It also integrates well with Prometheus and Grafana for teams that need more advanced visualization, allowing organizations to take advantage of existing observability infrastructure.

|

Type of Metric |

Linkerd |

Istio |

|---|---|---|

|

Success Rate |

Included with minimal overhead |

Thorough with detailed classification |

|

Latency |

P50, P95, P99 percentiles |

Customizable histogram buckets |

|

Request Volume |

Total requests with protocol breakdown |

Detailed with extensive attributes |

|

Tracing |

Optional via OpenCensus |

Integrated with sampling controls |

Linkerd’s observability strategy is unique in its emphasis on “debuggability.” The mesh is built to simplify problem-solving, with easy-to-understand error messages and diagnostic tools such as the tap feature, which enables live traffic inspection between services.

A Side-By-Side Performance Analysis

Performance is a key factor to consider when comparing service meshes, as they are positioned directly in the request path between services. Benchmarks regularly demonstrate substantial differences between Istio and Linkerd in terms of resource consumption and latency impact, which can affect both the performance of the application and the cost of the infrastructure.

Resource Efficiency

Linkerd is much more efficient than Istio when it comes to resource usage, typically using 40-60% less CPU and memory in similar deployments. Benchmark tests show that Linkerd’s control plane uses about 200-300MB of memory, while Istio’s control plane requires at least 1GB, often increasing to 2GB or more in production. This difference in efficiency also applies to the data plane, where Linkerd’s Rust-based proxies use significantly less memory per instance than Istio’s Envoy proxies. This makes Linkerd a better choice for environments with limited resources or deployments with many small services. If you’re considering a Kubernetes upgrade strategy, understanding these resource efficiencies can be crucial.

Latency Benchmarking

When it comes to measuring request latency, the performance difference between the two becomes even more obvious. Independent benchmarks have demonstrated that Linkerd adds about 0.9-1.2ms of latency per request in typical workloads, whereas Istio adds between 3-10ms. This difference may appear to be minor, but it accumulates with each service-to-service hop in a request path.

Applications with intricate request flows that may cross numerous services can experience significant performance degradation and a poor user experience due to Istio’s higher per-hop latency. Linkerd’s specialized proxy design, which prioritizes the specific needs of a service mesh over the general-purpose proxy approach used by Envoy in Istio, is the source of this performance discrepancy.

Handling Increased Traffic

Both meshes handle increased traffic differently. Linkerd maintains consistent performance as traffic increases, with minimal latency variation up to high concurrency levels. Istio can handle high loads, but it tends to show more latency variation and higher resource consumption as concurrency increases. This makes Linkerd more predictable in high-traffic scenarios, but Istio’s extensive configuration options do allow for performance tuning when necessary, provided teams have the expertise to optimize these settings.

Comparing Setup and Installation

Installation is the first impression a service mesh makes, and it often hints at what’s to come. Istio and Linkerd have markedly different installation and configuration processes, which mirror their overall differences in complexity and user-friendliness.

The complexity of installation directly affects how quickly teams can start using a service mesh. Linkerd focuses on getting users started quickly with a working mesh, while Istio requires more initial setup but allows for more customization to meet specific business needs.

How to Install Istio

Istio’s installation process has come a long way, transitioning from a single, large installation to a more modular one with IstioOperator. The current recommended method of installation is to use Helm charts or the istioctl command-line tool, which offer a wide range of configuration options to customize the deployment. However, this flexibility also introduces complexity – administrators are required to make decisions about a variety of components and settings before getting started. For those looking to maintain uptime during updates, consider a Kubernetes upgrade strategy that ensures zero downtime.

Installing Istio normally means deciding which components to install (such as ingress gateways, egress gateways, and telemetry addons), picking configuration profiles, and integrating with existing infrastructure. If you’re familiar with the system, the installation process usually takes 15-30 minutes, but it can take a lot longer for teams new to Istio because there’s a learning curve to understanding all the options.

After installation, teams frequently have to devote more time to setting up integration with monitoring tools, establishing gateways, and formulating initial traffic management policies. This extends the time-to-value, but it enables exact customization to particular organizational needs.

Linkerd’s Fast and Simple Approach

Linkerd has a significantly different approach to installation, with a focus on simplicity and speed. A basic Linkerd installation can be done in less than 5 minutes with just two commands: one to check prerequisites and one to install the control plane. This simplified process allows teams to get started quickly, enabling them to begin reaping the benefits of a service mesh with little initial investment.

Comparing the Complexity of Configuration

When it comes to configuration, these projects are a reflection of their overall design approaches. Istio uses a configuration model that is powerful but complex, based on Kubernetes Custom Resources. It has separate resources for virtual services, destination rules, gateways, and policies. This model provides extreme flexibility, but it requires a lot of learning and can be intimidating for those who are new to it. On the other hand, Linkerd uses a configuration model that is minimal, with sensible defaults and a smaller set of custom resources. This makes common tasks easy to do, while still providing extension points for advanced use cases.

Practical Applications and Ideal Situations

Deciding between Istio and Linkerd really comes down to your company’s unique needs, current infrastructure, and team skills. By recognizing the situations in which each mesh shines, you can make a knowledgeable choice that matches your requirements.

When Istio is the Better Choice

Istio is the top pick for businesses with intricate, multi-cluster environments that need advanced traffic management features. Large corporations with dedicated platform teams who can put time into learning and managing Istio’s extensive features will find its thorough approach beneficial. It’s especially useful for regulated industries with tight security and compliance standards, as its detailed policies can enforce complex access controls and its comprehensive audit capabilities provide the necessary trail for compliance verification. Businesses already invested in the wider Istio ecosystem (including Knative, Kubernetes Gateway API implementations, or service mesh extensions) will also discover that Istio offers better integration with these technologies.

Why Choose Linkerd?

If you’re looking for a service mesh solution that is simple, efficient, and offers a quick return on investment, Linkerd is the way to go. This solution is particularly beneficial for organizations with smaller platform teams, those with limited Kubernetes knowledge, or those operating in resource-limited environments. Linkerd’s lightweight approach and low operational costs make it an attractive option. Linkerd is ideal for startups and medium-sized businesses that require basic service mesh features without the added complexity. This solution offers the fundamental features these businesses need while remaining user-friendly and easy to troubleshoot. Linkerd is also a great choice for performance-sensitive applications where minimal latency overhead is key. This includes financial trading platforms and real-time applications where every millisecond counts.

Things to Consider When Switching Between Service Meshes

Switching from one service mesh to another is not an easy task and needs to be thought out carefully. When moving from Istio to Linkerd or the other way around, teams must take into account the differences in configuration models, feature compatibility, and operational procedures. A slow and steady migration strategy that uses a namespace-by-namespace approach is usually the most effective, as it allows teams to confirm functionality before fully committing to the migration.

Both service meshes allow for gradual adoption, which can help mitigate migration difficulties. However, it’s worth noting that some Istio features, such as complex virtual service configurations, don’t have a direct counterpart in Linkerd, necessitating a rethinking of traffic management strategies. Likewise, businesses transitioning from Linkerd to Istio will encounter a more challenging configuration learning curve but will gain access to more sophisticated features. Regardless of the direction, comprehensive testing in staging environments is critical before moving production workloads.

Comparing the Communities and Ecosystems

The community that supports a technology often plays a big role in its long-term success and the support options that are available. Both Istio and Linkerd have strong communities, but they are different in ways that reflect the philosophies of the projects. Istio has a bigger community overall and has strong corporate support, especially from Google and IBM. This has resulted in a rich ecosystem of extensions, integrations, and tools from third parties. Because it is widely used in enterprise settings, there is a lot of knowledge about how to deploy it and best practices.

Linkerd’s community is smaller in size but is known for being quite active. It is also known for being more welcoming to newcomers. The project’s CNCF status ensures that it is governed in a vendor-neutral manner. This has helped to establish trust in its long-term sustainability. Community surveys consistently show that Linkerd users are highly satisfied. They are particularly satisfied with the quality of the documentation and the responsiveness of the support. These differences in the community can have a significant impact on the experience of the operator. They should be considered when deciding whether to adopt the technology.

Progress and Acceptance

Both projects have reached significant maturity milestones, though they’ve followed different paths. Istio launched with considerable fanfare and corporate backing in 2017, quickly gaining adoption in enterprise environments despite early API instability. It has since stabilized and is now widely deployed in production at many large organizations. Linkerd, initially released in 2016, took a more gradual adoption path but achieved CNCF graduation status in July 2021, signifying its maturity and production readiness. While Istio has higher overall adoption numbers, Linkerd has been gaining momentum, particularly among organizations prioritizing simplicity and teams new to service mesh technology.

Support Options Available

Availability of support is an important factor to consider for production deployments. Istio has the advantage of commercial support from a number of vendors such as Google, IBM, and Solo.io, which offer enterprise support contracts with service level agreements. There are also many large consulting firms that provide Istio implementation services, creating a strong commercial ecosystem around the project. Linkerd, although it has fewer commercial support options, is supported by Buoyant (the original creator), which provides enterprise support and professional services. Its Cloud Native Computing Foundation (CNCF) status also ensures a vendor-neutral community support channel that many organizations find valuable, especially those who are worried about vendor lock-in or those who prefer community-driven support models.

Compatibility with Cloud Providers

Working with cloud providers can make service mesh operations a lot easier. Istio has become quite compatible with major cloud providers, with Google Cloud offering Anthos Service Mesh (based on Istio) as a managed service. AWS App Mesh and Azure Service Mesh also offer Istio-compatible APIs. These managed services lighten the load by taking care of upgrades, scaling, and monitoring of the control plane. Linkerd doesn’t have as many managed service options but it does work well with cloud-native tools. It’s lightweight enough to be suitable for self-managed deployments on any Kubernetes platform, and community-maintained charts and operators make deployment easier on major cloud providers including AWS EKS, Azure AKS, and Google GKE.

Choosing the Right Service Mesh for Your Microservices

When it comes to choosing between Istio and Linkerd, it’s not about which one is better, but rather which one is better for your specific needs and organizational context. You should consider your team’s expertise, your application’s performance sensitivity, your security and compliance requirements, and your tolerance for operational complexity. If your organization has the resources to manage its complexity, Istio offers unmatched flexibility and feature richness. If your team values simplicity, performance, and ease of operations, Linkerd offers a more streamlined path to the benefits of a service mesh. Many organizations start with Linkerd because it’s easier to adopt and move to Istio only if they encounter specific requirements that require its advanced capabilities. Regardless of which option you choose, implementing a service mesh is a significant step toward more reliable, secure, and observable microservices. Solo.io’s expertise in service mesh technologies can help guide your organization through this important decision-making process and implementation journey.

Common Questions

The questions below are often asked when assessing service mesh solutions for microservices frameworks. These insights can help shed light on important decision factors and implementation considerations based on your unique environment and needs.

Is it possible to run Istio and Linkerd in the same Kubernetes cluster?

Although it is technically feasible to run both service meshes in the same Kubernetes cluster, it is generally not advised for production environments because of possible conflicts and operational complexity.

Indeed, it’s possible to operate Istio and Linkerd concurrently within a single Kubernetes cluster. To do so, you’ll need to deploy each to a separate namespace and ensure their control planes do not overlap. This can be a handy method for comparing their performance in a live environment or during a migration. But, keep in mind that running two service meshes simultaneously can result in considerable operational complexity and potential resource contention.

Running each service mesh in separate namespaces with clear boundaries between them is a more practical approach. This allows incremental migration from one mesh to another while maintaining production stability. Some organizations use this approach to test a new service mesh without fully committing to a migration, or to leverage specific features of each mesh for different workloads.

Keep in mind that operating two meshes continuously results in a complicated operational environment that only a few teams can manage effectively. It is typically preferable to standardize on a single service mesh solution after conducting a thorough evaluation and testing.

Which service mesh is easier for beginners with little Kubernetes knowledge?

Linkerd is by far the better option for teams that are new to service mesh technology or have limited Kubernetes knowledge. Its easy installation process, sensible default settings, and focused set of features make the learning curve much less steep. Beginners can get a functional mesh up and running in minutes and start seeing the benefits right away without having to understand complicated configuration options. Linkerd’s documentation is also written with beginners in mind, offering clear explanations and easy-to-understand examples. Teams can gradually explore more advanced features as they become more comfortable with the basic concepts of service mesh, making Linkerd an excellent “first service mesh” that can grow with your knowledge.

What is the additional latency each service mesh adds to service calls?

Linkerd consistently shows less latency overhead than Istio in benchmark tests. In standard deployments, Linkerd adds about 0.9-1.2ms per request, while Istio adds about 3-10ms depending on configuration. These numbers can change based on workload characteristics, hardware, and specific mesh configurations, but the relative performance difference remains consistent across testing scenarios.

Latency impact is more noticeable in architectures with deep call chains where requests traverse multiple services. In a system where a single user request might touch 5-10 services, Istio’s higher per-hop latency can accumulate to noticeable end-user performance degradation. For performance-critical applications, Linkerd’s lower latency overhead can be a decisive advantage. However, Istio does offer various performance tuning options for organizations with the expertise to optimize their deployment.

Is a service mesh necessary for my small microservices application?

Small applications with only a handful of services may not warrant the operational overhead of a full service mesh. If your application has less than 5-10 services and straightforward communication patterns, the advantages of a service mesh may not compensate for the additional complexity. Instead, consider using application libraries or simpler solutions like Kubernetes native features to meet your immediate needs for service discovery and basic load balancing.

Nonetheless, if you foresee expansion or have particular security needs (such as mTLS between services) or comprehensive observability, deploying a lightweight service mesh like Linkerd early on can set up good practices for future scalability. Many teams discover that starting with a service mesh becomes beneficial when they have 10-15 services or when they start to face difficulties with service-to-service communication, security enforcement, or observability that are hard to address with application-level solutions.

Can service meshes be used in non-Kubernetes environments?

Istio and Linkerd, although mainly designed for Kubernetes, provide different levels of support for environments that are not Kubernetes. Istio, through its Workload Entry feature, provides more advanced support for VMs and bare metal deployments, allowing services outside Kubernetes to be part of the mesh. This makes Istio a potential option for hybrid deployment models where some services are run in Kubernetes and others remain in traditional infrastructure.

Historically, Linkerd has been more focused on Kubernetes, but it has recently improved its support for non-Kubernetes workloads. For organizations with significant investments in non-Kubernetes infrastructure that need to be integrated into a service mesh, Istio currently offers more comprehensive options. However, both projects continue to evolve their support for diverse infrastructure environments.

If your environment is mainly not Kubernetes-based, you may also want to consider other service mesh implementations that were specifically designed for those environments, like Consul Connect. This was built to function across multiple types of infrastructure, including VMs and bare metal.

In the end, service meshes are most valuable and integrate most seamlessly in Kubernetes environments, where they can use the platform’s built-in capabilities for deployment, scaling, and lifecycle management.