Key Takeaways

Natural Language Processing (NLP) models are essential for modern AI applications, from chatbots to sentiment analysis.

MLOps (Machine Learning Operations) enhances traditional ML by automating workflows, improving scalability, and ensuring continuous integration and deployment.

Key MLOps tools for NLP projects include Pachyderm, MLflow, TensorFlow Extended (TFX), and Kubeflow.

Building an efficient MLOps pipeline involves steps like data preprocessing, model training, validation, and monitoring.

Real-world examples and case studies demonstrate the successful deployment of NLP models using MLOps frameworks.

The Importance of NLP in Modern AI

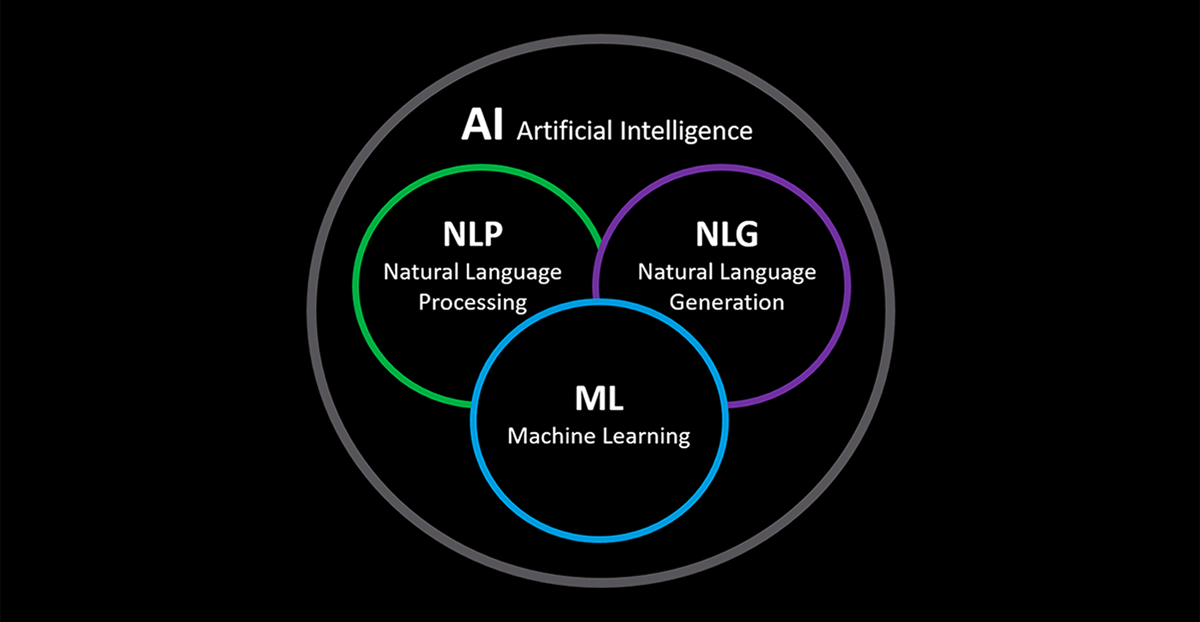

Natural Language Processing (NLP) has become a cornerstone of modern AI, enabling machines to understand and respond to human language. From chatbots that provide customer service to algorithms that analyze sentiment in social media posts, NLP models are integral to many applications.

Most importantly, deploying these models effectively is crucial for their success. This is where Machine Learning Operations (MLOps) comes into play. By automating and streamlining the deployment process, MLOps ensures that NLP models are scalable, reliable, and continuously improving.

“AI, Machine Learning, NLP, and NLG …” from medium.com and used with no modifications.

Understanding the Transition from Traditional ML to MLOps

Manual Approach vs Automated Pipelines

In traditional machine learning (ML), deploying a model often involves manual steps. Data scientists might manually preprocess data, train the model, and deploy it. This approach can be time-consuming and error-prone.

On the other hand, MLOps automates these steps through pipelines. Automated pipelines ensure that every step, from data preprocessing to model deployment, is reproducible and efficient. This automation reduces the risk of human error and speeds up the deployment process.

Benefits of MLOps Over Traditional ML

MLOps offers several advantages over traditional ML approaches:

Scalability: Automated pipelines can handle large datasets and complex models more efficiently.

Reproducibility: Every step in the pipeline is documented and can be reproduced, ensuring consistency.

Continuous Integration and Deployment (CI/CD): MLOps frameworks support continuous integration and deployment, allowing models to be updated and improved continuously.

Monitoring and Maintenance: MLOps tools provide monitoring capabilities, ensuring that models perform as expected and can be updated when necessary.

Building an Efficient MLOps Pipeline for NLP

Data Preprocessing and Feature Engineering

The first step in building an MLOps pipeline for NLP is data preprocessing. This involves cleaning and preparing the data for model training. Common preprocessing steps include:

Removing stop words

Tokenizing text

Normalizing text (e.g., converting to lowercase)

Handling missing values

Feature engineering is another critical step. This involves creating features from the raw data that the model can use to make predictions. In NLP, this might include:

Creating word embeddings

Extracting n-grams

Using part-of-speech tagging

Model Training and Validation

Once the data is preprocessed and features are engineered, the next step is model training. This involves selecting an appropriate model architecture and training it on the prepared data. For further insights on MLOps, you can read about MLOps on Google Cloud.

Validation is equally important. This step involves evaluating the model’s performance on a separate validation dataset to ensure it generalizes well to new data. Common metrics for NLP model validation include accuracy, precision, recall, and F1 score.

MLOps Tools for NLP Projects

Pachyderm: Automated Versioning and Data-Driven Pipelines

Pachyderm is a powerful MLOps tool that automates versioning and creates data-driven pipelines. It ensures that every step in the pipeline is versioned, making it easy to track changes and reproduce results.

MLflow: Experiment Tracking and Lifecycle Management

MLflow is another essential tool for MLOps. It provides experiment tracking, allowing data scientists to log and compare different model versions. MLflow also supports lifecycle management, making it easier to deploy and monitor models in production.

TensorFlow Extended (TFX): Productionize ML Pipelines

TensorFlow Extended (TFX) is a comprehensive end-to-end platform designed to deploy production ML pipelines. TFX provides a robust framework that integrates well with TensorFlow, making it easier to manage the entire machine learning lifecycle, from data ingestion to serving models in production.

TFX components include:

ExampleGen: Ingests and splits data into training and evaluation datasets.

Transform: Applies data transformations and feature engineering.

Trainer: Trains the model using TensorFlow.

Evaluator: Evaluates the model’s performance.

InfraValidator: Validates the infrastructure for serving the model.

ModelValidator: Ensures the model meets performance criteria before deployment.

Pusher: Deploys the validated model to a serving infrastructure.

TFX is particularly powerful for NLP projects because it can handle large datasets and complex transformations, ensuring that the entire pipeline is efficient and scalable.

Kubeflow: Scalable Machine Learning Pipelines on Kubernetes

Kubeflow is an open-source platform designed to deploy scalable machine learning workflows on Kubernetes. It leverages Kubernetes’ orchestration capabilities to manage ML workloads, making it easier to scale and deploy models in production. Learn more about the architecture of Kubeflow for machine learning.

Key features of Kubeflow include:

Pipeline Orchestration: Define and manage ML pipelines using a user-friendly interface.

Jupyter Notebooks: Integrated support for Jupyter notebooks, enabling interactive development and experimentation.

Hyperparameter Tuning: Automate hyperparameter tuning to optimize model performance.

Model Serving: Deploy and serve models at scale using Kubernetes-native tools.

Kubeflow is ideal for NLP projects that require scalable and flexible deployment solutions. Its integration with Kubernetes ensures that models can be deployed and managed efficiently, even in complex production environments. Learn more about the architecture of Kubeflow for machine learning to understand its full capabilities.

Ensuring Continuous Integration and Deployment (CI/CD) in NLP

Importance of Continuous Integration in Model Training

Continuous Integration (CI) is a critical aspect of modern ML workflows. It involves automatically integrating code changes into a shared repository, ensuring that new code is tested and validated continuously.

In NLP projects, CI helps maintain the quality and consistency of models by:

Automatically running tests on new code to catch errors early.

Ensuring that data preprocessing and feature engineering steps are reproducible.

Facilitating collaboration among data scientists by providing a shared codebase.

Streamlining Model Deployment with Continuous Deployment

Continuous Deployment (CD) takes CI a step further by automating the deployment of models into production. This ensures that new models and updates are deployed quickly and reliably.

Key steps in implementing CD for NLP models include:

Automating the deployment pipeline to reduce manual intervention.

Ensuring that models are validated and meet performance criteria before deployment.

Monitoring the deployed models to ensure they perform as expected.

Automating Monitoring and Logging

Once an NLP model is deployed, it is crucial to monitor its performance and log relevant metrics. Automated monitoring and logging help detect issues early and ensure that models continue to perform well in production.

Essential components of monitoring and logging include:

Performance Metrics: Track key metrics such as accuracy, latency, and throughput.

Error Logging: Log errors and anomalies to identify potential issues.

Model Drift Detection: Monitor for changes in model performance over time.

Optimizing NLP Models for Production

Reducing Latency and Improving Inference Speed

Latency and inference speed are critical factors in deploying NLP models in production. High latency can lead to poor user experience, especially in real-time applications like chatbots and virtual assistants.

To reduce latency and improve inference speed:

Optimize the model architecture to reduce complexity.

Use efficient data structures and algorithms for preprocessing and inference.

Leverage hardware acceleration, such as GPUs and TPUs, to speed up computations.

Handling Real-Time Data in Production

Real-time data processing is essential for applications that require immediate responses, such as customer service chatbots and real-time sentiment analysis.

To handle real-time data in production:

Use streaming data platforms like Apache Kafka or Apache Flink.

Implement real-time data preprocessing and feature extraction.

Deploy models in a low-latency environment to ensure quick responses.

Ensuring Robustness and Scalability

Robustness and scalability are crucial for deploying NLP models in production. Models must handle varying workloads and maintain performance under different conditions.

To ensure robustness and scalability:

Implement robust error handling and fallback mechanisms.

Use scalable infrastructure, such as cloud-based solutions, to handle varying workloads.

Continuously monitor and update models to adapt to changing data and requirements.

Case Studies on Deploying NLP Models with MLOps

Deploying NLP models with MLOps has shown promising results across various industries. These case studies demonstrate the practical application and benefits of integrating MLOps frameworks into NLP projects.

Success Stories from Leading Companies

One success story comes from a large e-commerce company that implemented Kubeflow to deploy an NLP-based chatbot for customer service. This chatbot was designed to handle customer inquiries, process orders, and provide product recommendations. By using Kubeflow, the company could automate the entire deployment pipeline, ensuring that the chatbot was always up-to-date and capable of handling a high volume of requests. This led to a significant reduction in customer wait times and an overall improvement in customer satisfaction.

“By leveraging Kubeflow, we were able to deploy our chatbot at scale, ensuring that it was always available and capable of handling customer inquiries efficiently. This not only improved our customer service but also freed up our human agents to handle more complex issues.” – Company CTO

Another notable example is a financial services firm that used TensorFlow Extended (TFX) to deploy a sentiment analysis model. This model was designed to analyze social media posts and news articles to gauge market sentiment and provide actionable insights for trading strategies. TFX’s automated versioning and data-driven pipelines ensured that the model was continuously updated with the latest data, providing accurate and timely insights to traders.

“TFX allowed us to maintain a high level of accuracy in our sentiment analysis model by ensuring that it was always trained on the latest data. This gave our traders a competitive edge in the market.” – Head of Data Science at the Financial Services Firm.

Key Lessons Learned

These case studies highlight several key lessons for deploying NLP models with MLOps:

Automate the Deployment Pipeline: Automating the deployment pipeline reduces manual intervention and speeds up the deployment process, ensuring that models are always up-to-date.

Implement Continuous Integration and Deployment: CI/CD practices ensure that models are validated and deployed efficiently, maintaining high performance in production.

Monitor and Log Model Performance: Continuous monitoring and logging are essential for detecting issues early and maintaining model performance over time.

Leverage Scalable Infrastructure: Using scalable infrastructure, such as cloud-based solutions, ensures that models can handle varying workloads and maintain performance under different conditions.

Conclusion

Final Thoughts on Leveraging MLOps for NLP

Leveraging MLOps for deploying NLP models offers numerous benefits, including improved scalability, reproducibility, and continuous improvement. By integrating MLOps tools like Pachyderm, MLflow, TFX, and Kubeflow, data scientists can streamline the deployment process and ensure that their models perform well in production. These tools provide the necessary automation, versioning, and monitoring capabilities to manage the entire machine learning lifecycle effectively.

What’s more, the case studies discussed demonstrate the real-world impact of deploying NLP models with MLOps. Companies that have adopted these practices have seen significant improvements in model performance, scalability, and overall efficiency.

Future Trends in NLP and MLOps

As the field of NLP continues to evolve, MLOps will play an increasingly important role in deploying and managing models. Future trends in NLP and MLOps include greater automation, improved scalability, and enhanced monitoring capabilities. These advancements will ensure that NLP models remain at the forefront of AI innovation and continue to provide valuable insights and solutions across various industries.

In addition, we can expect to see more integration between NLP and other AI technologies, such as computer vision and reinforcement learning. This will enable the development of more sophisticated and comprehensive AI systems that can tackle complex real-world problems.

Frequently Asked Questions (FAQ)

What is MLOps and why is it important for NLP?

MLOps, or Machine Learning Operations, is a set of practices that automate and streamline the deployment of machine learning models. It is important for NLP because it ensures that models are scalable, reliable, and continuously improving. MLOps provides the necessary infrastructure to manage the entire machine learning lifecycle, from data preprocessing to model deployment and monitoring. For more details, check out this guide on MLOps on Google Cloud.

Which MLOps tools are best for NLP projects?

Several MLOps tools are well-suited for NLP projects, including:

Pachyderm: Provides automated versioning and data-driven pipelines, ensuring reproducibility and scalability.

MLflow: Offers experiment tracking and lifecycle management, making it easier to compare and deploy different model versions.

TensorFlow Extended (TFX): A comprehensive platform for deploying production ML pipelines, integrating well with TensorFlow.

Kubeflow: Designed to deploy scalable ML workflows on Kubernetes, providing robust pipeline orchestration and model serving capabilities.

How can continuous integration and deployment improve NLP models?

Continuous integration and deployment (CI/CD) ensure that models are always up-to-date and perform well in production. CI/CD automates the testing, validation, and deployment of models, reducing manual intervention and speeding up the deployment process.

What are common challenges in deploying NLP models and how to overcome them?

Common challenges in deploying NLP models include handling large datasets, ensuring scalability, and maintaining model performance. These challenges can be overcome by leveraging MLOps tools, automating the deployment pipeline, and continuously monitoring and updating models.