Key Points

- Blue-green deployments switch out whole environments simultaneously, providing fast rollbacks but needing more resources than canary deployments

- Canary deployments lessen risk by slowly releasing to a small group of users first, giving early warning of problems

- Your app’s architecture and your business needs should dictate which method is best for your circumstances

- Changes to the database schema pose unique problems for both deployment methods and need careful thought

- Mixed methods that combine parts of both methods can provide the ideal mix of speed and safety for complex apps

The method you use to deploy code to production can mean the difference between a smooth release and a disaster that your customers can see. Modern deployment methods have moved on a long way from the risky “big bang” deployments of the past, and blue-green and canary deployments have emerged as two of the most effective methods for minimising downtime and spotting errors before they affect all your users.

Both strategies are designed to minimize deployment risk, but they do so in very different ways. SlickFinch offers automation tools that support both of these deployment strategies, helping teams choose the best strategy for their specific needs. Knowing the differences between these strategies can help you choose the best one for your production environment and business needs.

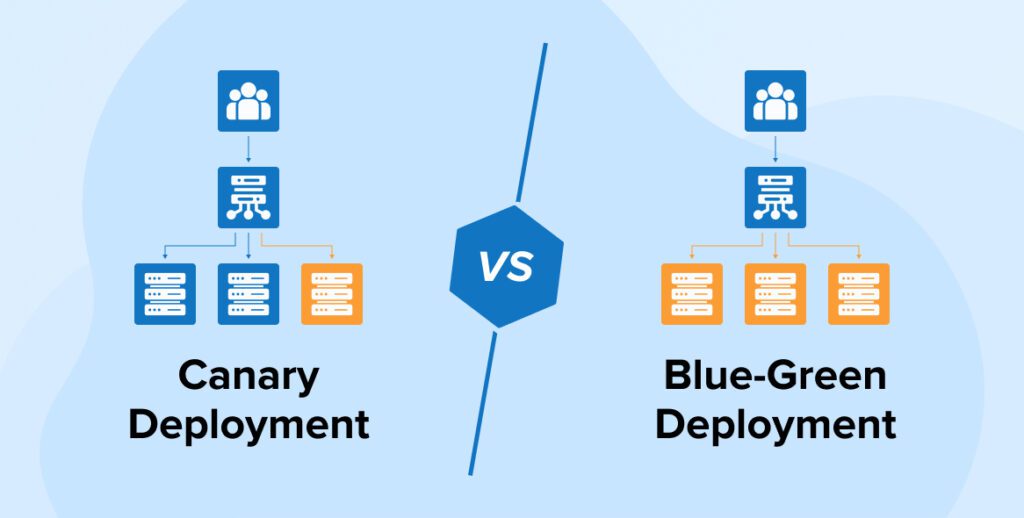

“Blue Green Deployment vs Canary – Spectral” from spectralops.io and used with no modifications.

The Importance of Your Deployment Strategy for Successful Production Releases

Deployment failures can lead to millions in lost revenue for organizations, along with a loss of customer trust and team morale. The old-fashioned method of pushing updates directly to production is now seen as too risky in today’s high-stakes digital environment. Modern deployment strategies are now necessary tools for developers, offering structured methods to minimize risk while maximizing delivery speed.

The deployment strategy you select will directly affect important metrics such as mean time to recovery (MTTR), change failure rate, and deployment frequency. For teams that use DevOps or operate in highly competitive markets, these metrics are more than just operational concerns—they are what set your business apart. The right deployment strategy can speed up innovation while still providing a safety net when things go wrong.

Blue-green and canary deployments are both vast improvements over deploying directly to production, but they each solve unique problems and come with their own set of pros and cons. Grasping these differences is crucial for choosing the best strategy for your specific application architecture, team capabilities, and business needs.

Blue-Green Deployments: Swapping Complete Environments

The blue-green deployment strategy is a method that minimizes deployment risk by operating two identical production environments simultaneously. At any given moment, only one of these environments is live and handles all production traffic. The “blue” environment usually represents the current production version, while the “green” environment is the new version that is being readied for release.

This method gives you the ability to rollback instantly—if something goes wrong after the switch to the green environment, you can immediately redirect traffic back to the blue environment. This makes blue-green deployments especially valuable for companies that prioritize the ability to recover quickly from deployment problems.

Understanding Blue-Green Deployments

The process for a blue-green deployment is straightforward. Initially, the blue environment continues to handle user traffic while the green environment is set up as a perfect copy of the production infrastructure. The new version of the application is then deployed to this green environment, where it can be tested in a setting identical to production without impacting actual users.

When the green environment has been tested and confirmed to be working as expected, traffic is rerouted from the blue environment to the green one. This is typically done through a router, load balancer, or DNS change. The switchover process is usually fast, taking only seconds or minutes, which helps to minimize the potential for service disruptions. Once it has been confirmed that the green environment is successfully handling production traffic, the blue environment is either kept on standby for a quick rollback or decommissioned to conserve resources.

What’s great about this method is that it’s easy to understand and has a clear distinction between versions. You don’t have to worry about complicated traffic splitting or managing a slow rollout—users will either see the old version or the new version. This makes the deployment process easy to put into practice and comprehend. For a deeper understanding of how automation can enhance deployment processes, check out this case study on CI/CD automation.

The Advantages of Blue-Green Deployment in Production

Blue-green deployments are the best choice when you want to minimize deployment risk but still need to make complete, all-at-once transitions. They are especially useful for critical systems where partial deployments could cause compatibility issues between components or where you need to quickly revert to a known-good state.

During high-traffic seasons like Black Friday, e-commerce platforms usually prefer blue-green deployments because they need to be able to quickly undo problematic changes. Enterprise applications with strict uptime requirements and predictable traffic patterns also benefit from the clean cutover approach.

“Blue-green deployments give us the confidence to deploy major changes during business hours. We can test in a production-identical environment and switch back in seconds if needed. It’s been a game-changer for our release velocity.” — Sarah Chen, DevOps Lead at a Fortune 500 retailer

Setting Up Your First Blue-Green Pipeline

Implementing a blue-green deployment pipeline requires careful planning and infrastructure design. Start by ensuring your application can run in parallel instances without conflicts—particularly around shared resources like databases. Your infrastructure should support maintaining two complete production-grade environments, which has cost implications but provides significant safety benefits.

The main technological aspect is the traffic routing mechanism, which will guide users to either the blue or green environment. Depending on your architecture, this could be a load balancer, API gateway, or DNS configuration. Make sure this mechanism can make quick switches with minimal disruption and has monitoring to confirm traffic is flowing correctly after the switch.

Usual Blue-Green Traps to Steer Clear Of

Even though it has its benefits, blue-green deployment also has possible traps. One of the most significant challenges is changes in the database schema—new application versions often need database changes that have to be backward compatible with both environments during the transition period. This needs meticulous planning and sometimes executing multiple deployment steps.

Since you’re essentially doubling your production infrastructure, resource costs can also be considerable. This means that for large-scale applications, blue-green deployments can be potentially costly. Furthermore, during the switch, long-running processes and sessions can be disrupted, necessitating session persistence strategies or graceful termination processes.

Lastly, blue-green deployments are an all-or-nothing deal. There’s no in-between like canary deployments where a small group of users test the new version. Instead, everyone switches at the same time. This could potentially make it hard to detect certain problems until they affect all of your users.

Canary Deployments: Dip Your Toes in the Water

Canary deployments are named after the old tradition of miners bringing canaries into mines to check for poisonous gases. In the same way, this deployment strategy lets a small group of users try out a new version before it is released to everyone. This gives teams the chance to collect real-world feedback and performance data while keeping the risk low.

Canary deployments are a great way to minimize risk by slowly rolling out changes. Instead of sending all traffic to the new version, you can gradually increase the amount of traffic that goes there. This way, if there’s a problem, it won’t affect all of your users. This is especially useful for applications that are user-facing, where unnoticed bugs could cause a lot of problems.

How Canary Deployments Work

When you deploy using a canary strategy, the new version is released alongside the existing production version. To begin with, a small percentage of traffic—usually around 5-10%—is directed to the new version while the majority of users continue to use the stable version. This routing can be done randomly or it can be targeted to specific user segments based on factors such as geography, user attributes, or device types.

As you become more confident in the new version, based on monitoring and observation, you can gradually increase the percentage of traffic. You might follow a schedule of 5%, 20%, 50%, and finally 100%, pausing at each stage to evaluate performance and gather feedback. If there are any problems, you can quickly revert traffic to the stable version, impacting only the small percentage of users currently on the canary.

Essential technical prerequisites encompass a traffic routing mechanism that can split based on percentage, thorough monitoring to identify problems promptly, and automated rollback capabilities. The implementation of these prerequisites is now much easier than before, thanks to modern service meshes and feature flag systems.

When to Use Canary Deployments

Canary deployments are best suited for high-traffic situations where it’s important to gauge the effects before rolling out the full release. They’re especially useful for customer-facing applications where user experience is key and where different user groups might react differently to changes.

Canary deployments are frequently used by SaaS platforms to confirm changes across a range of customer environments. Mobile backends also find canary testing useful for checking compatibility with different client versions in the wild. Companies with a strong culture of data-driven decision making also appreciate the measurable approach of canary deployments, as they allow the impact of changes to be quantified before full deployment.

Creating a Canary Deployment Pipeline that Works

To set up a canary pipeline, you need to be able to manage traffic in a more nuanced way than with blue-green deployments. You need to have a traffic routing layer that can send exact percentages of users to different versions of the application. You can set this up with rules that you can configure. You could use load balancers, service meshes like Istio, or feature flags at the application level to do this.

Keeping an eye on things is even more important in canary deployments, because you have to look at the metrics for the canary and stable versions side by side to spot any differences. This usually means looking at technical metrics like how many errors there are and how long things take, as well as business metrics like how many people are buying things or interacting with the site. Automatically comparing these metrics and sending out alerts when there are big changes is what keeps your canary deployments safe.

The last component is an automated system for promoting and rolling back deployments that can shift traffic distribution based on the metrics being monitored. This sets up a feedback loop where successful canary deployments are automatically given more traffic, while any problematic ones are rapidly rolled back before they can impact more users.

Keeping a Close Eye on Your Canary for Red Flags

For canary deployments to work, you need to keep a close eye on them. The trick is to set up comparison metrics that you can use to compare your canary environment to your baseline environment. You should be looking at technical indicators such as error rates, latency percentiles, and resource utilization, as well as business metrics that are relevant to the success of your application.

|

Category of Metric |

Examples |

Warning Signs |

|---|---|---|

|

Technical Health |

Error rates, latency, CPU/memory usage |

>10% deviation from baseline |

|

User Experience |

Page load time, UI rendering speed |

>5% degradation from baseline |

|

Business Impact |

Conversion rate, session duration, checkout completion |

Any statistically significant negative change |

|

Infrastructure Cost |

Resource utilization, API call volume |

>15% increase from baseline |

In addition to metrics, logs and trace data that are contextually relevant can provide invaluable insights when diagnosing issues with canaries. Modern platforms for observability that correlate metrics, logs, and traces can dramatically reduce the time needed to identify the root cause of failures in canaries. This is particularly important in microservices architectures where problems may cascade across the boundaries of services.

Tools that perform automated analysis are capable of using statistical methods to spot anomalies that could be overlooked in raw metrics. These tools can pick up on slight performance regressions or errors that occur intermittently, which could be missed by human observers, offering an extra level of protection for your canary deployments.

Head-to-Head: Blue-Green vs Canary in Key Areas

|

Characteristic |

Blue-Green Deployment |

Canary Deployment |

|---|---|---|

|

Risk Profile |

All-at-once with quick rollback |

Gradual exposure with limited impact |

|

Resource Requirements |

Double infrastructure during transition |

Smaller infrastructure overhead, higher complexity |

|

Implementation Complexity |

Simpler to implement and understand |

More complex traffic routing and monitoring |

|

Feedback Cycle |

Limited pre-release validation |

Real-world validation with minimal exposure |

|

Rollback Speed |

Very fast (seconds to minutes) |

Fast for affected percentage (seconds to minutes) |

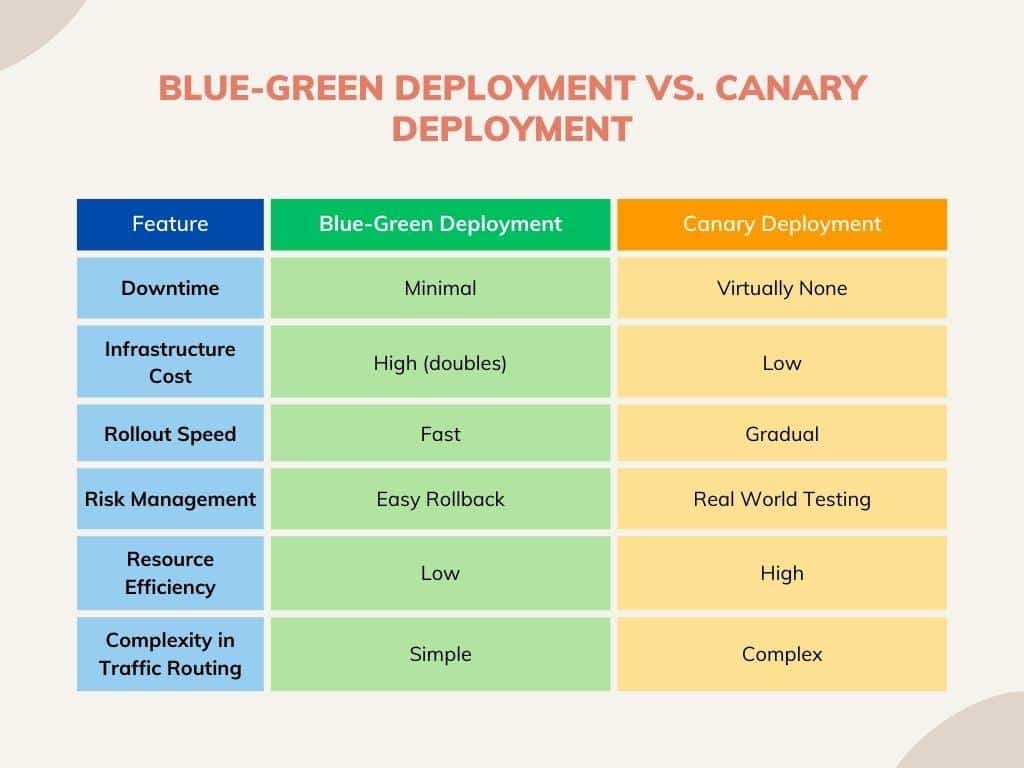

When comparing these deployment strategies, it’s crucial to understand that neither is inherently superior—they simply address different requirements and constraints. Blue-green deployments offer simplicity and speed at the cost of requiring more resources, while canary deployments provide finer-grained risk control at the expense of greater complexity and longer deployment times.

Your choice will ultimately depend on the specific characteristics of your application, the capabilities of your team, and your business priorities. Many organizations actually use both strategies for different applications, or even different types of changes within the same application, choosing the most appropriate approach based on the risk profile of each change.

Let’s take a closer look at the main differences between these strategies to help you make the best decision for your specific situation.

Managing Risk

Blue-green deployments are great for situations where you need to be able to roll back quickly. Switching back to the old environment can be done in seconds or minutes, bringing all users back to the previous stable version. This makes blue-green deployments especially good for mission-critical systems where the most important thing is to minimize downtime during failures.

Canary deployments are excellent for identifying potential problems before they affect all of your users. By only exposing a small number of users to changes, you’re essentially creating an early warning system that can detect issues that might only come up in production environments. This slow roll-out is perfect for consumer-facing applications, where changes to the user experience can have a big impact on your business.

Blue-green deployment focuses on quickly recovering from failures, while canary deployment aims to prevent widespread failures from happening at all. They’re both valid ways to manage risk, but they focus on different priorities.

Demands on Resources and Infrastructure Expenses

Considering the resources, blue-green deployments usually demand twice the production infrastructure during the transition phase. This can be a considerable expense, particularly for large-scale applications that require a lot of resources. However, this cost is often temporary, as the old environment can be decommissioned once the cutover is successful.

Canary deployments typically demand less extra infrastructure because only a segment of your traffic needs to be handled by the new version. However, they come with higher operational complexity costs when it comes to traffic management, monitoring, and analysis. The infrastructure for routing specific traffic percentages and comparing metrics between versions can add complexity to your architecture.

Companies that operate with flexible cloud infrastructure often find that canary deployments are more budget-friendly. This is because they can slowly increase the scale of the new version while decreasing the scale of the old one. This creates a more efficient use of resources compared to the sudden change of blue-green deployments.

Tradeoffs between Speed and Safety

The main difference between these two strategies is the tradeoff between speed and safety. Blue-green deployments allow for quicker complete transitions—once testing is complete, all users are transitioned to the new version at the same time. This makes blue-green deployments more suitable for updates that need to be deployed quickly and completely, like security patches.

Canary deployments emphasize safety by gradually introducing new features, giving teams the chance to see how they work in the real world before fully deploying them. This strategy does make the deployment process longer because it adds extra verification steps. However, it also greatly decreases the chance of widespread problems. For new features that might significantly impact users or that involve complicated technical changes, the extra safety provided by canary deployments often makes the longer timeline worthwhile.

Whether you prioritize speed or safety should depend on your business goals and the type of changes you’re deploying. Important bug fixes may require the quickness of blue-green, while significant feature releases may be better suited to the careful method of canary deployments.

Difficulties with Database Compatibility

While both deployment strategies have issues when it comes to changes in the database schema, they present themselves in different ways. In the case of blue-green deployments, database compatibility is especially important because both environments have to work with the same database while the changeover is happening. This usually means that schema changes have to be backward-compatible, done in several steps, or that separate databases have to be kept with ways to keep them in sync.

Canary deployments can often simplify database migrations by permitting incremental schema changes that coincide with the gradual shift in traffic. That said, they still necessitate meticulous planning to maintain database consistency between versions, particularly in distributed systems where data might be accessed by various services at different version levels.

Implementing either deployment strategy often involves the database challenge, which is usually the most complex aspect. Many organizations have developed specialized patterns and tools for managing schema migrations in a way that supports the deployment approach they have chosen.

What Users See During Deployment

When it comes to blue-green deployments, users will typically see a more consistent experience. This means that all users will see either the old version or the new version, with a clean switch between the two. This can be a good thing for changes that significantly change user interfaces or workflows, as it avoids confusing situations where users might see different experiences on different visits. For companies looking to streamline their deployment process, exploring CI/CD automation can be highly beneficial.

Canary deployments can lead to a scenario where various users have distinct experiences during the deployment period. This can be used to your advantage. For instance, you might direct initial canary traffic to internal users or beta testers. However, if the differences between versions are substantial enough, it can also create support difficulties. In this case, support teams would need to figure out which version a user is viewing when resolving problems.

When thinking about this aspect, consider the nature of your changes and who your users are. B2B applications with trained users may handle variations in experience better than consumer applications where users expect the same behavior.

Practical Framework for Real-World Implementation Decisions

Deciding between blue-green and canary deployments doesn’t have to be a binary choice. Many businesses use both strategies, choosing the right one based on the nature of the change and the level of risk involved. A practical decision-making framework can assist you in making consistent, logical decisions about which strategy to use in various situations.

When deciding on a deployment strategy, your team should take into account the size and importance of the changes, how critical the system being affected is, the potential impact on users, and your team’s familiarity with each strategy. By consistently considering these factors for every release, you can choose a deployment strategy that strikes the right balance between risk, speed, and resource limitations.

5 Things to Consider Before You Choose Your Strategy

- What is the risk level of this change? Adding features, fixing bugs, patching security, and making infrastructure changes all come with different risk levels. These risk levels might suggest different ways to deploy.

- How easy is it to detect problems before production? Changes that are hard to validate completely before production might be better off with the gradual approach of canary.

- What are our rollback needs? If you need to rollback quickly, blue-green has advantages in rapid, complete reversions.

- How much extra infrastructure can we afford? If resources are limited, canary deployments might be more cost-effective than maintaining full blue-green environments.

- Are there changes to the database? The complexity of database migrations might affect which strategy is more practical for a specific release.

These considerations help you make the decision based on your specific context instead of abstract principles. The aim is to match the deployment strategy to the actual needs and constraints of each situation, instead of rigidly applying the same approach to every change.

Hybrid Approaches: Combining the Best of Both Worlds

More and more, companies are adopting hybrid approaches that take advantage of the strengths of both blue-green and canary deployments. The goal of these hybrid strategies is to enjoy the advantages of both methods while minimizing their individual shortcomings.

A hybrid pattern that is often used is the “blue-green canary” method. This involves using a small blue-green environment as a canary. A small amount of traffic is directed to the new “green” environment. The full blue-green switch is only carried out after the performance of the new environment has been validated. This method combines the early warning system of canary deployments with the clean cutover capability of blue-green deployments.

There’s also a hybrid method that uses feature flags in combination with either deployment strategy. This gives teams the ability to deploy code changes independently of feature activation, offering another level of control over the deployment process. New code can be deployed using blue-green or canary methods, but it stays inactive until feature flags are progressively enabled for growing user segments.

Resources That Simplify Both Methods

Contemporary deployment resources have been developed to assist with both blue-green and canary methods, frequently including specific features that address typical issues. Platforms based on Kubernetes provide inherent support for both strategies via controllers such as Argo Rollouts, Flagger, or Spinnaker. These resources can streamline the traffic shifting, health checking, and promotion/rollback decisions that are crucial to these deployment methods.

Service mesh technologies like Istio, Linkerd, and AWS App Mesh offer advanced traffic management capabilities that are especially useful for canary deployments. These technologies can direct certain percentages of traffic based on request attributes, while also gathering detailed metrics that assist in assessing canary performance.

Deployment strategies can be enhanced by feature management platforms like LaunchDarkly, Split, and Unleash, which separate code deployment from feature activation. This gives teams the flexibility to use either blue-green or canary deployment methods, while also independently managing feature exposure through targeted rollouts or percentage-based exposure.

Implementing Your Deployment Strategy

Understanding the concepts behind the blue-green and canary deployment strategies is just the first step. To truly make them work, you need to pay attention to monitoring, automation, and integration with your existing development practices. The difference between understanding these strategies and actually succeeding with them often comes down to how well you embed them into your daily operations.

It’s better to start with the smaller, less risky services when you first adopt these strategies. This way, you can perfect your approach before you move on to the more important components. Make sure to write down your process, including how you decide what to do, how you implement the strategies, and what you learn from each deployment. This will be very helpful when you start using these strategies with bigger teams and more complex systems.

- Start with infrastructure automation – Both strategies require reliable, repeatable environment provisioning

- Invest in monitoring before complex deployments – You can’t safely deploy what you can’t observe

- Practice rollbacks regularly – Teams should be comfortable with failure recovery

- Begin with non-critical services – Build confidence before applying to mission-critical components

Remember that deployment strategies are means to an end—the goal is delivering value to users safely and efficiently. The best strategy is the one that enables your team to confidently deliver changes at a pace that matches your business needs, while maintaining the stability and reliability your users expect.

No matter which method you select, the secret to triumph is in detailed planning, cautious observation during deployment, and establishing clear standards for success and failure. The more you automate the process, the less likely you are to make mistakes, and the more predictable and repeatable your deployments become.

Key Performance Indicators to Watch for Each Approach

Both deployment strategies require careful monitoring, but the specific indicators to watch for are slightly different. For blue-green deployments, the focus should be on comparing system health before and after the switch. This includes looking at error rates, response times, and throughput to ensure the new environment is performing as expected. For canary deployments, it’s important to continuously compare the stable and canary versions. Pay close attention to any changes in error rates, performance metrics, or business KPIs that could signal issues with the new version.

How to Automatically Rollback When Issues Arise

Automated rollbacks are the lifeboat that makes current deployment strategies possible. For blue-green deployments, automated rollback should focus on swiftly rerouting traffic back to the blue environment when issues are found. For canary deployments, the rollback process should automatically decrease or stop traffic to the canary version when metrics show issues, stopping further user impact while maintaining the stable version’s availability.

Implementing Either Approach into Your CI/CD Pipeline

Both deployment approaches need to be entirely implemented into your CI/CD pipeline to take full advantage of their benefits. This implementation should encompass automated testing prior to deployment, infrastructure provisioning for the new environment, controlled traffic shifting in line with your chosen approach, automated monitoring throughout the deployment, and predefined criteria for promotion or rollback. By automating these steps within your pipeline, you change deployment from a risky manual process into a dependable, reliable capability that supports regular releases.

Commonly Asked Questions

Teams that are beginning to use these deployment strategies often have the same questions about how to implement them, what the best practices are, and how to overcome common obstacles. Here are some answers to the most commonly asked questions about blue-green and canary deployments.

Is it possible for small teams to effectively implement blue-green or canary deployments?

Indeed, small teams can definitely implement these strategies effectively, often with less coordination overhead than larger organizations. Begin with simplified versions—for blue-green, you might start with manual traffic switching and basic health checks before adding automation. For canary deployments, start with simple percentage-based routing and a few key metrics before expanding to more sophisticated analysis. Avoid pitfalls like manual configuration that can hinder your startup’s success.

Cloud platforms have made these techniques much more accessible. Services such as AWS Elastic Beanstalk, Google Cloud Run, and Azure App Service provide built-in support for deployment strategies that small teams can use without having to maintain complex infrastructure. Managed Kubernetes services also make container-based implementations easier to use.

How do stateful applications interact with these deployment strategies?

Stateful applications introduce unique obstacles for both deployment strategies. The main challenge is maintaining state consistency between versions. For blue-green deployments, possible solutions include shared database access with schema compatibility between versions, database replication with synchronization, or sticky sessions that keep users on their original version until their session completes.

When deploying stateful applications using canary deployment, it’s important to make sure that users stay on either the stable or canary version for the duration of their session. This usually requires session affinity at the load balancer level. You may also need to have systems in place to deal with situations where changes in state in one version need to be mirrored in the other.

How much does blue-green deployment typically cost compared to canary deployment?

- Infrastructure expenses – During deployment, blue-green usually needs 200% capacity, while canary may need 110-150% depending on your traffic allocation

- Operational complexity – Canary deployments usually require more advanced monitoring and analysis tools

- Development overhead – Both strategies may necessitate changes to the application to ensure version compatibility

- Training and expertise – The costs of learning and specialized knowledge are often overlooked

The actual cost comparison is heavily dependent on your specific infrastructure, application architecture, and team capabilities. Organizations with flexible cloud infrastructure and robust automation practices often find that the additional costs of either strategy are quickly offset by reduced downtime, quicker issue detection, and increased release confidence.

When figuring out ROI, don’t just think about the direct infrastructure costs. You should also think about how the business will be positively impacted by safer deployment. If you can prevent even one outage, it could make up for months or years of investment into better deployment methods.

For many organizations, the real cost question isn’t whether to use blue-green or canary strategies, but whether to use either strategy at all, given the cost of outages, rollbacks, and emergency fixes that result from less sophisticated deployment approaches.

Canary deployments typically provide better long-term cost benefits for large-scale systems due to their ability to gradually scale and more effectively utilize resources. However, it’s important to consider the ongoing cost of the operational complexity associated with canary deployments when calculating the total cost.

Do I need any specific tools to execute these deployment strategies?

Although specific tools can make the implementation process easier, you can execute basic versions of both strategies using common infrastructure components such as load balancers and monitoring systems. As your practice matures, you can use purpose-built tools for progressive delivery like Argo Rollouts, Flagger, or commercial solutions to add complexity and lessen the operational burden on your team.

How do blue-green and canary deployments manage changes to the database schema?

Changes to the database schema are one of the most difficult parts of either deployment strategy. The basic strategy is to make changes to the schema that are backward-compatible with the previous version of the application. This usually requires multi-phase deployments where you first add new elements to the schema without changing existing ones, then deploy changes to the application that use the new schema, and finally remove old elements from the schema in a subsequent deployment.

Some of the more advanced strategies you can use include utilizing database views to show different schema versions to different application versions, using schema migration tools that support versioning, or employing dual-write patterns where both the old and new schemas are kept up to date during the transition period.

Many businesses in the real world create standardized models for various types of schema modifications, classifying them by risk level and necessary strategy. High-risk schema modifications may necessitate maintenance windows or special deployment methods that fall outside of the standard blue-green or canary procedures.

The issue with databases is a great example of why deployment strategies need to be comprehensive, dealing with not just the application code but all components that affect the behaviour of the system. To be successful, application developers, database specialists, and operations teams need to work together to come up with strategies that ensure the integrity of the data throughout the deployment process.

When it comes to deployment strategies for production, there are two main methods that are often used: blue-green and canary. Both of these strategies have their own unique advantages and disadvantages, and the choice between the two often comes down to the specific needs and goals of the project at hand.

Blue-green deployment is a strategy that involves maintaining two production environments, referred to as the blue environment and the green environment. At any given time, one of these environments is live, while the other is idle. When a new version of an application is ready for deployment, it is released onto the idle environment. Once it has been thoroughly tested and deemed ready for production, the router is switched so that all incoming requests are directed to the new environment. This allows for a seamless transition with no downtime, as well as the ability to immediately roll back to the previous version if any issues are discovered.

Canary deployment, on the other hand, is a strategy that involves gradually rolling out changes to a small subset of users before deploying the changes to the entire user base. This allows for the testing of new features or changes in a live environment with real users, while minimizing the risk and impact of any potential issues. If any problems are detected, the changes can be rolled back quickly and easily. However, this strategy does require more complex monitoring and management, and may not be suitable for all types of applications.

Ultimately, the choice between blue-green and canary deployment strategies will depend on a variety of factors, including the size and complexity of the application, the resources available for testing and monitoring, and the level of risk tolerance. Both strategies offer a way to deploy changes to production in a controlled and manageable way, reducing the risk of downtime and other issues.