When it comes to harnessing the full potential of containerization, Kubernetes stands out as the go-to orchestrator. It’s not just about running a single container; it’s about running them in unison to achieve greater efficiency and reliability. That’s where multi-container pods come into play. With a proper setup, developers can streamline workflows, improve resource utilization, and enhance application performance.

Article-at-a-Glance

-

Understand what multi-container pods are and their role in Kubernetes.

-

Discover the benefits multi-container pods offer to developers and organizations.

-

Learn the best practices for setting up and configuring multi-container pods.

-

Explore running tips to manage and maintain multi-container pods effectively.

-

Know when and why to reach out for expert Kubernetes consulting services.

Unlock the Power of Kubernetes Multi-Container Pods

Defining Multi-Container Pods

A multi-container pod is a single instance in Kubernetes that can run multiple containers. These containers are bundled together, sharing the same environment, storage, and network interfaces. They’re like close-knit teammates working on the same project, each with its specialized role, yet striving towards a common goal.

Core Benefits for Developers

Why should you, as a developer, care about multi-container pods? The answer lies in the core benefits:

-

Simplified Communication: Containers within the same pod can communicate with each other effortlessly using localhost, since they share the same network space.

-

Shared Resources: They can also access the same storage volumes, which means they can easily share files and data.

-

Efficient Resource Utilization: Multi-container pods can lead to better resource usage since containers can share the same resources, cutting down on overhead.

These advantages mean your applications can be more efficient, more resilient, and easier to manage.

Best Practices for Multi-Container Pod Configuration

Container Cohesion Strategy

When packing containers into a pod, think about their relationship to each other. Containers should be tightly coupled in terms of their functionality. For instance, a web server and its cache should live in the same pod because they need to work closely together. Understanding the difference between Docker and Kubernetes can further clarify why certain containers are best deployed together.

Resource Allocation and Limits

One of the most critical aspects of multi-container pods is resource management. Each container should have its resource requests and limits defined to ensure that one container doesn’t hog all the resources, which could starve the others and affect the pod’s overall performance.

For example, if you have a container that’s known to consume a lot of memory, you should set a memory limit to prevent it from affecting other containers in the pod.

Health Checks and Probes

Health checks are vital to ensure that your containers are running smoothly. Kubernetes provides liveness and readiness probes to check the health of your containers:

-

Liveness Probes: These determine if a container is running. If it fails, Kubernetes will restart the container.

-

Readiness Probes: These determine if a container is ready to serve traffic. If it fails, Kubernetes will not send traffic to the container until it passes the check.

Setting up these probes helps maintain the reliability of your services by making sure traffic is only routed to healthy containers.

Imagine a scenario where a container is stuck in a loop and cannot handle requests. A liveness probe would detect this and restart the container, potentially resolving the issue without manual intervention.

Security Contexts and Roles

Security in Kubernetes is non-negotiable, and multi-container pods are no exception. Each container may have different security requirements, and defining a security context helps dictate how the containers should behave. This includes setting permissions and access controls that are appropriate for each container’s role within the pod. By specifying user IDs, restricting network traffic, and managing access to storage, you can ensure that each container operates securely and doesn’t overstep its bounds.

Managing Persistent Storage

Even in a transient environment like Kubernetes, some data must persist. That’s where persistent volumes (PVs) and persistent volume claims (PVCs) come into play. When configuring multi-container pods, it’s essential to ensure that persistent storage is accessible to the containers that need it, without interference. This might involve setting up shared volumes or defining specific access policies within your Kubernetes deployment.

Consider a database container that requires disk storage to save data, and a logging container that needs to write audit logs. Both can use the same PV through different PVCs, maintaining data persistence across container restarts and deployments.

Running Tips for Multi-Container Pods in Kubernetes

Pod Lifecycle Management

Understanding the pod lifecycle is crucial. From the moment you create a pod to the time it’s retired, every phase matters. Init containers can be used to prepare your environment before the main containers start. Knowing when to use postStart and preStop hooks can also make a difference in how smoothly your containers run and shut down.

Inter-Container Communication

Inside a multi-container pod, containers need to talk to each other, and they do so through shared network spaces. But remember, they should communicate as if they are on the same local host. This means choosing the right inter-process communication (IPC) mechanisms is key to ensuring that your containers work together seamlessly.

Logging and Monitoring Techniques

Keep a close eye on your containers. Logging and monitoring are vital for understanding what’s happening within your multi-container pods. Centralized logging, using tools like Fluentd or Logstash, can help you aggregate logs from all containers, making it easier to troubleshoot issues when they arise.

Update and Rollback Strategies

Updates and rollbacks are a part of life in the container world. Kubernetes offers several strategies, like rolling updates or blue-green deployments, to update containers with minimal downtime. It’s also essential to have a rollback plan in case things don’t go as expected. Test your update and rollback procedures regularly to ensure they work as intended.

Boost Your Efficiency with Expert Strategies

Advanced Networking Approaches

Networking in Kubernetes can be complex, especially with multi-container pods. Network policies allow you to control the flow of traffic between pods, ensuring that only the necessary communication happens. This can prevent potential attacks and also reduce network congestion.

Utilizing Init Containers Effectively

Init containers are the unsung heroes of Kubernetes. They run before your main containers and are perfect for setup scripts or other preparatory tasks. Use them wisely to ensure your environment is correctly configured for your application’s needs.

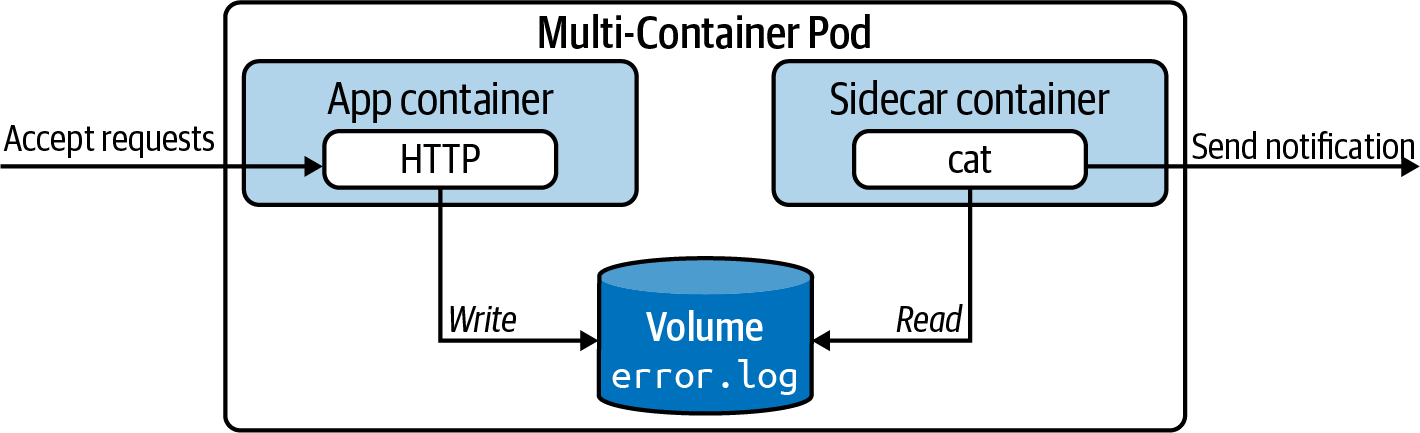

Sidecar Pattern Implementation

The sidecar pattern is a powerful design pattern in Kubernetes. It involves pairing a helper container with a main container, enhancing or extending the functionality of the main container without changing its code. For example, you might have a sidecar container that handles logging or monitoring, which can be updated independently of the main application container.

Implementing Multi-Container Pod Setups

Step-by-Step Process

Implementing a multi-container pod setup requires a careful approach. Start by defining your pod’s structure in a YAML file, specifying each container and its configuration. Then, use kubectl to apply this configuration to your cluster. Monitor the status of your pod’s deployment with kubectl commands, ensuring each container starts up as expected.

Common Pitfalls and How to Avoid Them

There are several common pitfalls when working with multi-container pods:

-

Overloading a pod with too many containers can lead to resource contention.

-

Not properly defining health checks can result in undetected failures.

-

Failing to set resource limits can lead to unstable pods.

Avoid these issues by carefully planning your pod’s architecture and being meticulous with your configurations. For a practical guide on setting up health checks, refer to our article on Kubernetes startup probes.

Real-World Scenarios and Case Studies

Let’s talk real-world impact. Multi-container pods are not just a theoretical concept; they are being used by companies to solve complex problems. For instance, a popular e-commerce platform uses multi-container pods to couple their web application with a caching service, drastically reducing load times and improving user experience.

Another case is a streaming service that employs multi-container pods to run their video service alongside a logging sidecar container, ensuring that any issues with streaming can be quickly identified and addressed.

These examples demonstrate how multi-container pods can be used to enhance performance and reliability in a real-world setting.

Getting Started with Your First Multi-Container Pod

Preparing Your Development Environment

Before diving into the world of multi-container pods, you need to set up your development environment. This includes installing Kubernetes, setting up a local cluster (like Minikube or Kind), and getting familiar with kubectl, the command-line tool for interacting with your cluster.

Running Your Containers: A Beginner’s Tutorial

Once your environment is ready, it’s time to get your hands dirty. Start by creating a simple multi-container pod configuration file. Define two containers that will share a volume – one to write data to the shared volume and another to read from it. Apply the configuration using kubectl and watch as Kubernetes brings your multi-container pod to life.

Explore Our Expert Kubernetes Consulting Services

Efficiency is key in development, and sometimes, you need a guiding hand to unlock the full potential of Kubernetes. That’s where our expertise comes in. We provide tailored consulting services to help you design, implement, and optimize your Kubernetes infrastructure. We can even manage the whole thing for you.

Custom Solutions to Scale Your Applications

Every application is unique, and so are the challenges that come with scaling them. We understand this and offer custom solutions that cater specifically to your needs. Whether you’re looking to refine your multi-container pod strategy or build a robust Kubernetes environment from scratch, we’re here to help.

Contact Us for Tailored Kubernetes Strategies

If you’re looking to take your Kubernetes setup to the next level, don’t hesitate to reach out. Our team of seasoned experts is ready to assist you in crafting a Kubernetes strategy that’s as efficient and reliable as it gets. Contact us today to learn how we can help you streamline your development workflow with multi-container pods.

Frequently Asked Questions

What Are the Key Advantages of Multi-Container Pods?

The key advantages of using multi-container pods include:

-

Tight coupling of related containers for efficient communication and resource sharing.

-

Enhanced resource utilization, leading to cost savings and improved performance.

-

Isolation of responsibilities, allowing for easier updates and maintenance.

How Do I Handle Logging Across Multiple Containers?

Centralized logging is your friend when it comes to multi-container pods. Tools like Fluentd or Elastic Stack can aggregate logs from all containers, giving you a holistic view of your application’s behavior and making it easier to pinpoint issues.

What Is the Ideal Scenario for Using Init Containers?

Init containers are perfect for setup tasks that need to run before your main containers start. This could include tasks like database migrations, configuration file creation, or pre-fetching dependencies.

How Can I Ensure Efficient Resource Usage in Multi-Container Pods?

To ensure efficient resource usage:

-

Define resource requests and limits for each container.

-

Monitor pod performance and adjust configurations as needed.

-

Use Quality of Service (QoS) classes to prioritize critical workloads.

When Should I Choose Multi-Container Pods Over Single Container Pods?

Choose multi-container pods when your containers need to work closely together, share resources, and communicate on the same local network. They’re ideal for scenarios where containers complement each other’s functionality, like a main application with a sidecar for logging or monitoring.