Summary

- Google’s GKE generally offers more advanced features and automation than Azure’s AKS, with Google’s original creation of Kubernetes giving them an advantage

- AKS offers a completely free control plane while GKE charges for cluster management after the free tier, potentially saving thousands annually for smaller deployments

- GKE usually shows faster cluster provisioning times and more advanced auto-scaling capabilities, but the performance gap has significantly decreased

- Companies already using Microsoft’s ecosystem will find better integration with AKS, while those using Google Cloud services will benefit from GKE’s seamless connections

- Both platforms now support multi-cloud strategies, though GKE’s Anthos platform currently offers more mature hybrid cloud capabilities compared to Azure Arc

AKS vs GKE: The Clash of the Kubernetes Titans

The decision to choose between Azure Kubernetes Service (AKS) and Google Kubernetes Engine (GKE) is not just a technical one, but a strategic one that can impact your organization’s cloud journey for years to come. As containerization becomes the norm for modern application deployment, these two managed Kubernetes platforms have emerged as leaders, each with unique advantages that could make or break your DevOps transformation. This comprehensive comparison will guide you through the critical differences that matter most to real-world deployments.

Since Google launched Kubernetes in 2014, the managed Kubernetes landscape has changed significantly. What was once a field dominated by Google has now become a competitive market where Microsoft’s Azure has not only caught up in terms of functionality but also offers attractive integration with its broader cloud ecosystem. To understand these platforms beyond the marketing hype, it is necessary to examine their architectural differences, operational capabilities, and cost structures.

As companies continue to incorporate multi-cloud strategies, the choice between AKS and GKE is rarely a standalone decision. Your existing cloud footprint, your team’s expertise, and your future cloud roadmap are all critical factors in deciding which platform will provide the most benefit. Let’s examine how these Kubernetes heavyweights compare across the factors that are most important to both enterprise IT teams and cloud-native startups.

Market Standings and Adoption Speeds

Managed Kubernetes services are rapidly expanding in the market, with GKE currently leading with around 37% market share. This lead is primarily due to Google’s first-mover advantage—since they developed Kubernetes in-house before making it open-source in 2014. However, AKS has been quickly catching up, especially among enterprise clients who are already part of the Microsoft ecosystem. The adoption trend shows GKE with a stronger presence among tech companies and startups, while AKS is more popular with traditional enterprises moving from Windows-centric environments.

Experts in the field anticipate that GKE will continue to grow faster than AKS until 2025, even though Microsoft is working hard to improve AKS capabilities and close the gap. Companies that use multi-cloud strategies are increasingly using both of these platforms. Recent surveys show that 23% of businesses are now running production workloads on both AKS and GKE. This approach of using both platforms allows teams to take advantage of the unique strengths of each service and avoid being tied to a single vendor.

Both platforms have seen rapid innovation due to competition, resulting in much improved feature parity in the past few years. What was once a clear technical advantage for GKE has now become a more detailed comparison where ecosystem integration and existing cloud investments often are the deciding factors, rather than just technical capabilities.

Background and Context of Cloud Providers

Google’s intimate relationship with Kubernetes provides GKE with a significant advantage that cannot be overstated. Google, as the original creator of the Kubernetes project, developed GKE based on years of internal experience in running containerized workloads on a large scale with their Borg system. This historical context clarifies why GKE is often the first to introduce advanced Kubernetes features, usually implementing new capabilities months before they are seen in competing platforms. The platform benefits from Google’s vast experience in operating some of the world’s largest container deployments.

Microsoft’s Azure Kubernetes Service (AKS) started a bit later than Google’s GKE, but it quickly gained traction as part of Microsoft’s broader cloud transformation under CEO Satya Nadella. While Microsoft initially had to play catch-up to GKE, the company has invested heavily in AKS development and has leveraged its enterprise relationships to drive adoption. The integration of AKS with Azure’s comprehensive set of services, particularly in hybrid cloud scenarios, has become a key selling point for organizations that are already using Microsoft technologies. AKS also benefits from Azure’s strong presence in regulated industries, where Microsoft’s compliance portfolio gives it a competitive edge.

The divergent development paths of these platforms have resulted in unique platform characteristics. GKE leans towards automation, advanced features, and integration with Google Cloud’s data and AI services. On the other hand, AKS prioritizes enterprise readiness, hybrid deployments, and smooth integration with Microsoft’s developer tools ecosystem. Recognizing these philosophical differences will help clarify many of the technical and operational differences we’ll discuss in this comparison.

How Pricing Models Affect Your Budget

When it comes to choosing between cloud services that offer similar features, the deciding factor often comes down to cost. The pricing models for AKS and GKE are different in ways that can significantly affect your overall spending, particularly as your scale increases. Both platforms charge for the underlying compute resources that power your Kubernetes nodes, but the differences in management fees and included services can result in very different cost profiles, depending on how you use the services.

The primary difference is how these platforms bill for the Kubernetes control plane. This is the brain of your cluster that manages orchestration tasks. This seemingly minor difference can amount to thousands of dollars a year as your deployment expands. This makes it critical to model costs based on your specific workload characteristics rather than relying on simplified marketing comparisons. For more insights on Kubernetes solutions, explore our article on managed vs self-hosted Kubernetes solutions.

Saving Thousands with Free Tier Differences

The Azure Kubernetes Service provides a control plane that is entirely free and doesn’t have any management fees, no matter how large or how many clusters you have. This means that you only pay for the virtual machines, storage, and networking resources that make up your actual workloads, while Microsoft takes care of the costs of the Kubernetes orchestration layer. This pricing advantage is particularly significant for organizations that run a lot of small clusters, because the management overhead doesn’t come with any additional costs.

Google Kubernetes Engine has a different pricing strategy, offering a free tier that includes one zonal cluster per billing account. However, it charges $0.10 per hour (or around $73 per month) for each additional cluster’s control plane. For businesses that use a microservices architecture and need multiple isolated clusters, this pricing model could lead to significantly higher costs compared to AKS. That said, GKE’s Autopilot mode, which automatically provisions and manages nodes, could balance out these costs by more efficiently using resources for certain workloads.

Your architecture will greatly influence the financial impact of these pricing models. If your company operates 10 separate Kubernetes clusters—which is typical in organizations that strictly separate environments or teams—AKS could save you around $8,760 per year in management fees alone compared to GKE. However, this estimate doesn’t factor in potential resource efficiency differences that might make GKE more cost-effective in some situations.

Costs Associated with Node Management

In addition to the fees for the control plane, the way in which nodes are managed can also affect costs. GKE’s Autopilot mode automatically provides and manages nodes based on the needs of your workload, which can reduce the amount of manual capacity planning and potentially decrease resource waste. While this more automated approach often leads to better resource utilization, it comes with a 30-40% increase in the cost of the underlying compute resources compared to standard GKE.

“We transitioned from traditional GKE to Autopilot and observed a 22% reduction in our per-pod expenses, even with the premium pricing. The automatic right-sizing and removal of underused capacity more than made up for the higher unit price.” – Senior Cloud Architect at a SaaS company with over 300 microservices

Hidden Charges to Watch For

|

Cost Category |

AKS |

GKE |

Impact on Monthly Bill |

|---|---|---|---|

|

Control Plane |

Free |

$73/month per cluster after first zonal cluster |

Significant for multi-cluster deployments |

|

Cluster Autoscaler |

Free |

Free |

Neutral |

|

Load Balancer |

Standard rates apply |

Standard rates apply |

Similar costs |

|

Ingress Controller |

Requires manual setup or Azure Application Gateway |

Built-in with GKE Ingress |

Potential savings with GKE |

|

Network Egress |

First 5GB free, then tiered pricing |

First 1GB free, then tiered pricing |

AKS slightly cheaper for network-heavy workloads |

|

Monitoring |

Azure Monitor costs for log retention |

Cloud Operations Suite billing for logs and metrics |

Variable based on retention policies |

Cost Optimization Strategies for Each Platform

Optimizing costs on AKS starts with proper node pool configuration to maximize the Azure Hybrid Benefit for organizations with existing Microsoft licensing. Organizations can save up to 40% on Windows container workloads by applying existing licenses, a distinct advantage over GKE for Windows-centric enterprises. Additionally, reserving virtual machines for predictable workloads through Azure Reserved VM Instances can reduce compute costs by up to 72% compared to pay-as-you-go pricing, significantly improving the economics of long-running AKS clusters.

When it comes to GKE deployments, the cost efficiency is achieved through commitment discounts and Autopilot’s intelligent resource management. Sustained use discounts are automatically applied to resources that are running for a significant portion of the billing month, while committed use discounts offer savings of up to 57% for 1-year or 3-year commitments. Unlike AKS, GKE also provides free-tier resources in every GCP account, including the first zonal cluster in each project, making it potentially more economical for organizations maintaining multiple isolated projects or development environments.

Both platforms are enhanced by the effective use of horizontal pod autoscaling and cluster autoscaling. However, GKE’s node auto-provisioning provides a more detailed control by adding nodes with specific hardware features based on the requirements of pending pods. For organizations with fluctuating workloads, this could mean improved resource use and reduced overall expenses compared to the more simple scaling approach of AKS.

Comparing Performance and Scalability

When it comes to comparing AKS and GKE, it’s important to look at the raw performance metrics. This is especially true for workloads that have specific throughput or latency requirements. In the past, independent benchmarks have shown that GKE has a better performance. However, recent updates have allowed Azure to close this gap significantly. Now, the performance differences are more likely to be specific to the workload, rather than favoring one platform over the other. This means it’s important to evaluate the platforms based on the specific profile of your application.

The network performance is where the most difference between platforms is seen, with Google’s GKE generally providing less latency and more throughput for connections between pods and services within the same cluster. This is due to Google’s highly optimized network fabric and the native integration with Google’s global backbone network. For applications with a lot of east-west traffic patterns between microservices, this difference in network performance can lead to significant improvements in the overall responsiveness of the application.

Speed of Cluster Creation

For DevOps teams who are adopting Kubernetes, the speed of cluster provisioning is a key measure of value, as it directly affects the speed of development and the ability to recover from disasters. GKE is consistently faster than AKS when it comes to creating clusters, with standard clusters usually up and running in 3-5 minutes, compared to 8-10 minutes on average for AKS. This advantage in speed also applies to the addition of nodes, with GKE typically adding new nodes 30-40% faster than AKS, making it better able to scale quickly in response to sudden increases in traffic.

When using GKE Autopilot clusters, the speed difference is even more noticeable. These clusters can be set up and ready to take on workloads in as little as 90 seconds. This can have a significant impact on operational efficiency, especially for development teams that are implementing continuous deployment pipelines or organizations that have strict recovery time objectives. However, with each platform update, AKS has been steadily improving in this area. This suggests that Microsoft is actively trying to close this performance gap.

Scaling Abilities

Both Azure Kubernetes Service (AKS) and Google Kubernetes Engine (GKE) offer horizontal pod autoscaling (HPA) and cluster autoscaling. However, GKE provides more advanced scaling features such as Node Auto-Provisioning (NAP) and multidimensional pod autoscaling. AKS uses standard Kubernetes autoscaling, while GKE enhances these features with smart scaling decisions based on multiple metrics at the same time and predictive scaling that anticipates resource needs before they become a problem. These advanced scaling features can provide more consistent performance while reducing over-provisioning for workloads with fluctuating resource needs.

Google GKE has a vertical pod autoscaling recommendation feature that AKS does not currently offer. This feature takes a look at past resource usage and makes suggestions for more appropriate CPU and memory requests. This can greatly improve the efficiency of a cluster by automatically adjusting the size of the container resource specifications based on actual usage patterns instead of developer estimates. Azure has announced that they plan to add similar capabilities, but as of now, GKE has the advantage when it comes to workloads that benefit from automated resource tuning.

Maximum Number of Nodes

For large-scale enterprise deployments, the maximum number of nodes and pods that a platform can support is a key consideration. At present, Azure Kubernetes Service (AKS) supports up to 1,000 nodes per cluster. In contrast, Google Kubernetes Engine (GKE) standard clusters can scale up to 15,000 nodes within a single cluster. This significant difference in maximum scale is an important factor for large deployments or for organizations that want to manage multiple applications more efficiently by consolidating them into fewer, larger clusters. To understand more about container strategies, you can explore microservices vs monolithic applications.

AKS and GKE also vary in the maximum pod density they support. AKS supports up to 250 pods per node, while GKE supports up to 256 pods per node when using custom networking. For dense workloads that consist of many small containers, this small difference can affect how efficiently resources are used across your cluster. Both platforms can support most production workloads, but GKE provides more room for very large deployments that might otherwise require splitting across multiple AKS clusters.

It’s important to mention that for applications that are critical to your operations, GKE’s regional clusters offer more robust assurances in terms of control plane availability. They offer a 99.95% SLA, compared to the 99.9% SLA offered by AKS for the management layer. This difference of half a percentage point can mean about 4 hours less potential downtime per year. This can be a significant difference for applications that are distributed globally and need to be available all the time.

Security Measures to Safeguard Your Work

Security features are an essential consideration when choosing a managed Kubernetes service, especially for companies in regulated industries. Both AKS and GKE offer extensive security controls, but they use different methods to implement and integrate with their respective cloud security ecosystems. These differences in security architecture reflect each provider’s overall security approach and can significantly affect your security operations and compliance stance.

Both platforms use their cloud provider’s identity systems as the main authentication method, which is the basis of Kubernetes security’s authentication and authorization mechanisms. AKS integrates with Azure Active Directory (now Microsoft Entra ID), providing organizations already using Microsoft’s identity platform with seamless single sign-on capabilities. On the other hand, GKE integrates with Google Cloud IAM, which provides fine-grained permission controls but may require additional configuration for organizations using external identity providers.

Managing Identities and Access

AKS provides a deep link with Azure Active Directory, allowing businesses to utilize their existing identity system for Kubernetes RBAC. This connection also covers Azure AD groups, enabling roles to be assigned according to company structure and making access management easier for larger teams. The Azure AD integration also allows for conditional access rules, giving security teams the ability to enforce multi-factor authentication or location-based restrictions on cluster access—features that are particularly useful for businesses with stringent governance requirements.

Google Cloud IAM provides the basis for access control in GKE, which takes a slightly different approach to identity. Although GKE supports workload identity federation to connect with external identity providers, the most seamless experience is provided by native integration with Google accounts. The implementation of Kubernetes RBAC in GKE includes predefined roles that align with the permission model of Google Cloud, which could make management easier for teams already familiar with the security concepts of GCP.

Although both platforms offer service account support for pod-level identity, GKE’s Workload Identity feature offers a smoother integration between Kubernetes service accounts and cloud provider permissions. This feature enables more detailed security controls without the need to manage service account keys, providing GKE with a security advantage in situations that require detailed access to cloud services from containerized applications.

Network Policies and Isolation

There are significant differences in network security implementation between the two platforms. Azure Kubernetes Service (AKS) primarily relies on Azure Network Policies (based on Calico), while Google Kubernetes Engine (GKE) offers both Calico and a proprietary network policy implementation. GKE enforces network policies at the hypervisor level instead of within the container network, which can potentially provide better performance and stronger isolation guarantees. For businesses with strict network segmentation requirements, GKE’s implementation may offer benefits in both security posture and performance overhead.

Both Azure Kubernetes Service (AKS) and Google Kubernetes Engine (GKE) offer private clusters, but the details of their implementation differ. In AKS, private clusters completely isolate the Kubernetes API server, which does not have a public IP address. Management access requires either a jump box or Azure Private Link. In GKE, private clusters also limit access to the API server, but they offer more flexible options for controlled public access through authorized networks. Both platforms effectively address the security concern of exposing the API server, but GKE offers more detailed controls for organizations that require limited public access in addition to strong security controls.

Security Scanning Tools

Both platforms come with built-in security scanning features, albeit with different execution methods and coverage scopes. GKE’s Container Threat Detection tool offers real-time monitoring for any suspicious activities in the containers, and this is further enhanced by the Container Analysis API that preemptively scans container images for any known vulnerabilities prior to deployment. This preemptive scanning approach is directly integrated with Google’s Artifact Registry, offering uninterrupted protection throughout the container lifecycle without the need for extra configuration.

AKS is designed to work seamlessly with Microsoft Defender for Containers. This provides a more comprehensive coverage that includes runtime threat protection, vulnerability assessment, and compliance monitoring all in one place. It is closely integrated with Azure Security Center, providing a centralized view of the security status across all Azure resources, not just Kubernetes workloads. For organizations that are already using Azure’s security tools, this integrated approach can make security operations easier and provide more consistent protection across hybrid environments.

Google Kubernetes Engine’s (GKE) Binary Authorization feature adds an extra layer of security by enforcing deployment policies based on image signatures and attestations, effectively creating a zero-trust model for container deployments. Azure Kubernetes Service (AKS) can achieve similar capabilities by integrating with Azure Policy and third-party tools, but GKE’s built-in implementation provides a more streamlined experience for enforcing supply chain security controls.

Regulatory Compliance

Both platforms are highly compliant, though the level of compliance varies slightly depending on the region and the maturity of the service. AKS benefits from Azure’s strong presence in regulated industries, with certifications including FedRAMP High, HIPAA, PCI DSS, and SOC 1/2/3. Microsoft’s established relationships with government and financial services organizations have resulted in compliance offerings specifically tailored to these highly regulated environments, potentially giving AKS an edge for organizations in these sectors.

Google’s GKE meets most of the compliance certifications offered by AKS, and also includes some unique Google assurances such as ISO 27017 for cloud security and ISO 27018 for protecting personally identifiable information. Both platforms have similar compliance portfolios, with the choice usually depending on specific regional requirements or industry-specific certifications that are relevant to your organization. Both platforms offer compliance monitoring tools that help maintain continuous compliance through automated policy enforcement and regular assessment.

Worldwide Accessibility and Local Availability

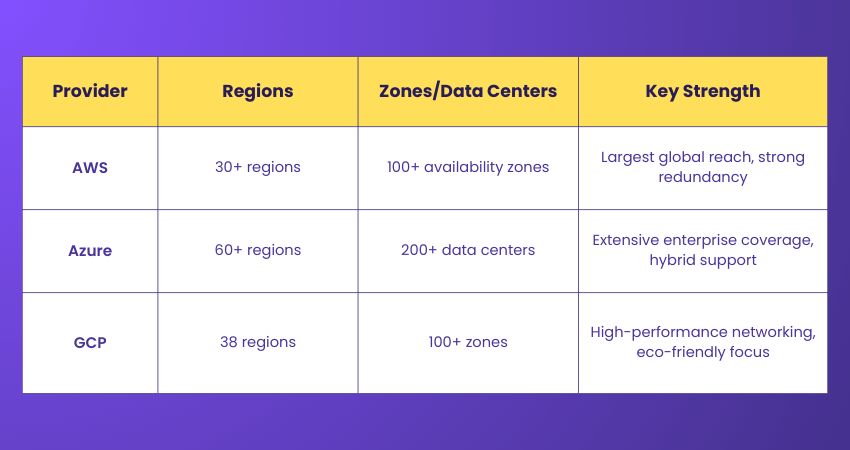

As companies grow internationally and face data residency obligations, the geographic distribution of workloads becomes increasingly crucial. AKS and GKE both provide widespread global coverage, but their regional deployment tactics and edge location capabilities differ, which can affect application performance and compliance. The choice between platforms frequently depends on particular local availability in markets that are important to your business operations.

At present, Azure operates in more geographic regions than Google Cloud, which could give AKS an edge for organizations that need to have a presence in specific territories where Google does not have data centers. This larger footprint is especially important for organizations that must meet strict data sovereignty requirements or that serve customers in regions where there are few cloud provider options. Microsoft’s early investment in global infrastructure continues to give AKS wider geographic coverage, but Google is quickly increasing its regional presence.

Comparison of Data Center Coverage

Compared to GKE’s 35+ regions, AKS currently operates in more than 60 regions globally. This difference is significant for organizations that need to deploy in specific territories, especially in the Middle East, Africa, and some parts of Asia where Azure has set up data centers ahead of Google Cloud. The gap in regional availability is most noticeable in regulated industries such as financial services and healthcare, where the location of the infrastructure is often dictated by data residency requirements.

Even though Google has fewer regions in total, its network architecture often offers performance benefits even from more remote data centers. Google’s private global backbone network, which transports traffic between regions, usually provides lower latency than public internet routes used by other providers. This network advantage can partially compensate for the difference in region count for applications where the global user experience is prioritized over strict data residency requirements.

Handling Multiple Regions

Both platforms offer solutions for the complexity that comes with managing Kubernetes deployments across multiple regions. GKE has a more developed approach to managing multiple clusters, using Fleet management to provide unified control of clusters across regions and even different environments (including on-premises via Anthos). This centralized method of management makes operations for applications distributed globally easier and supports complex strategies for deploying across multiple regions.

AKS provides multi-cluster management through Azure Arc, but this feature is not as advanced as GKE’s equivalent services. For businesses that need complex multi-region architectures with centralized management, GKE currently offers more powerful tools and automation capabilities. However, AKS has the advantage of being closely integrated with Azure Traffic Manager for global load balancing and Azure Front Door for multi-region application delivery, which may make it easier to implement globally distributed applications. For those interested in understanding the differences in managed Kubernetes vs self-hosted solutions, this comparison could provide further insights.

Compatibility with DevOps and CI/CD

When it comes to adopting Kubernetes, the developer’s experience and how well it integrates with existing DevOps tools can greatly affect the productivity of a team. Both AKS and GKE offer strong integration points for continuous integration and continuous delivery pipelines. However, they differ in terms of native tools and support from third parties. The choice often boils down to the existing developer ecosystem and preferred workflow patterns.

Microsoft’s focus on developers means that AKS has a deep integration with Azure DevOps and GitHub (now owned by Microsoft), making it easier for teams already using these tools to go from code to production. Google’s focus on cloud-native development patterns has led to a tight integration between GKE and Google Cloud Build, Cloud Deploy, and their Artifact Registry. Both approaches support modern deployment workflows effectively, but with different tool preferences that may be more in line with your existing practices.

Native Pipeline Tools

AKS works flawlessly with Azure DevOps Pipelines, offering templates and tasks specifically created for Kubernetes deployments. This integration also includes GitHub Actions through Microsoft-maintained action packages that make AKS deployments easier. The close relationship between Microsoft’s developer tools and AKS provides a seamless experience for teams already using the Microsoft ecosystem, with streamlined authentication, pre-set deployment tasks, and integrated monitoring.

Google Cloud Build and Cloud Deploy are a perfect match for GKE, providing serverless CI/CD pipelines that are designed for containerized applications. Google’s focus on declarative configuration and GitOps workflows fits well with Kubernetes native patterns, potentially providing a more cloud-native developer experience. GKE’s native tooling offers benefits in terms of consistency and alignment with Kubernetes best practices for teams that are adopting infrastructure-as-code and GitOps principles.

Capabilities for Integrating with Third Parties

In addition to their own native tools, both platforms are compatible with widely used third-party CI/CD tools such as Jenkins, CircleCI, and GitLab CI. Thanks to Microsoft’s solid relationships within the enterprise sector, AKS offers robust options for integration and documentation for standard enterprise CI/CD platforms. GKE, on the other hand, capitalizes on Google’s extensive experience in the cloud-native ecosystem, providing outstanding support for tools like ArgoCD, Flux, and other projects backed by CNCF.

When we look at the authentication mechanisms and service principal management for these integrations, the difference between the two becomes more apparent. AKS uses Azure service principals or managed identities, while GKE uses Google service accounts with workload identity federation. If your organization already uses specific CI/CD tools, you should look at the authentication and authorization mechanisms each platform needs. These integration points can often become operational issues if they’re not designed correctly. For more on securing your cloud infrastructure, you might explore the importance of a firewall in protecting your systems.

Tools for Monitoring and Observability

For maintaining reliable Kubernetes deployments and troubleshooting issues as they come up, effective monitoring and observability capabilities are a must. AKS and GKE each use a different approach for monitoring integration, with AKS using Azure Monitor and GKE built around Google Cloud Operations Suite (formerly Stackdriver). The differences in monitoring architecture reflect each provider’s wider observability strategy and can have a significant effect on operational workflows.

Both platforms offer the three main components of observability—metrics, logs, and traces—but they do so with different collection methods, retention policies, and visualization tools. The integration depth with the underlying platform often ends up being the deciding factor. Teams usually prefer monitoring solutions that provide unified visibility across all their cloud resources, rather than Kubernetes-specific tools that create operational silos.

Logging Solutions

AKS works seamlessly with Azure Monitor for container insights, automatically collecting logs from nodes, pods, and containers with retention policies that you can customize. The log data is sent to Azure Log Analytics, which allows complex queries through Kusto Query Language (KQL) and works with Azure’s larger monitoring ecosystem. This centralized logging approach makes it easier to correlate between Kubernetes events and logs from other Azure resources, but it also comes with costs for log ingestion and storage that can add up for applications that generate a lot of logs.

Google GKE also captures similar data points and stores them in Cloud Logging with a strong connection to Cloud Monitoring. Google’s approach puts more emphasis on automatic configuration with reasonable defaults, which requires less initial setup than Azure Kubernetes Service but may offer less customization for specialized requirements. The pricing models of the two also differ significantly. Google charges for log storage volume while Azure charges for both ingestion and retention. Therefore, cost comparisons depend largely on your specific logging patterns and retention requirements.

Gathering Data

The way data is gathered is quite different between platforms, and this affects both the experience of the developer and the operational processes. AKS uses the Azure Monitor agent to gather metrics in the Prometheus format, and these are then stored in Azure Monitor Metrics for 93 days. This method works well with Azure’s monitoring ecosystem, but it can make things more complicated for teams that use native Prometheus queries or tools.

Google Cloud’s GKE provides more built-in support for Prometheus, either through Google Cloud Managed Service for Prometheus or through direct integration with Cloud Monitoring. This method offers better compatibility with the broader Kubernetes ecosystem and makes it easier for teams already familiar with PromQL to adopt it. For organizations that have standardized Prometheus monitoring, GKE usually provides a more seamless experience with fewer compatibility issues.

Dashboard Features

The interface that teams use to interact with observability data is provided by visualization tools. As a result, their usability and integration capabilities are critical for operational efficiency. AKS provides visualization through Azure Portal dashboards and Azure Monitor workbooks, which offer highly customizable views that integrate data from multiple Azure services. The close integration with Azure’s role-based access control makes it easy to share dashboards across teams while still maintaining the necessary access restrictions.

Google’s GKE monitoring visualizations are mainly focused on Cloud Monitoring dashboards and the Kubernetes monitoring interface of the Google Cloud Console. Google’s method prioritizes pre-built dashboards with common visualization patterns. This requires less setup at the beginning but may offer less customization than what Azure offers. Both platforms can integrate with popular third-party visualization tools like Grafana. However, the complexity of the setup can vary based on authentication mechanisms and compatibility with data sources.

Support and Upgrade Paths

With Kubernetes rapidly changing, seeing three minor releases each year and each version being supported for roughly 14 months, it’s important to consider version support policies and upgrade automation when choosing a managed Kubernetes service. AKS and GKE both offer automated upgrade capabilities, but they differ in version availability, upgrade automation, and long-term support, which can affect operational stability.

The way version support is managed shows a clear difference in the basic principles of each platform. GKE focuses on keeping up with the latest Kubernetes versions, quickly adopting new versions and automating upgrades. On the other hand, AKS is more cautious, testing for longer periods and giving customers more say in the upgrade process. These contrasting approaches result in unique operational experiences that may be more suited to different company change management policies.

Availability of Kubernetes Versions

Usually, GKE is quicker than AKS in introducing new versions of Kubernetes, often making them available just weeks after the upstream release. This rapid adoption allows users to access new features sooner, but it may expose them to versions that have not been tested as thoroughly. GKE reduces this risk by offering release channels (Rapid, Regular, and Stable) that let customers choose the balance they prefer between the availability of features and stability.

AKS adopts a more cautious approach to introducing new versions, usually waiting 2-3 months after the upstream release before making new versions widely available. This longer testing period may offer more stability but delays access to new features. For organizations that value stability over the latest features, AKS’s cautious approach may be more in line with their risk tolerance, while teams that need immediate access to new Kubernetes capabilities may prefer GKE’s quicker release cycle.

Complexity of the Upgrade Process

When it comes to upgrading clusters, the methods used by each platform are quite different. GKE provides more automation, while AKS allows for more detailed control. GKE supports fully automated upgrades that can be scheduled according to your needs, and it even includes maintenance windows and exclusions to prevent any interruptions during crucial business hours. This level of automation can help reduce the amount of operational overhead, but it also requires you to trust in Google’s ability to test and implement upgrades. For a deeper understanding of automation benefits, check out this case study on CI/CD automation.

AKS necessitates more hands-on intervention for upgrades, as both control plane and node upgrades require explicit customer initiation. Although this method increases operational overhead, it allows for more control over timing and validation, which may be beneficial for organizations with strict change management requirements. AKS also provides node surge upgrades, which reduce disruption by creating new nodes before decommissioning old ones. However, GKE now offers similar features.

Support Policies Over the Long Term

Google’s GKE Enterprise tier provides extended support for specific versions of Kubernetes, enabling companies to stay with a specific version for up to two years. Critical security patches and bug fixes are included in this extended support, which does not require upgrades to newer feature versions. This provides stability for applications with stringent validation requirements or regulatory restrictions.

AKS does not have a formal extended support program similar to GKE Enterprise. However, Microsoft usually continues to provide security patches for versions slightly past their official support window. For organizations that need guaranteed long-term support for specific Kubernetes versions, GKE Enterprise offers more explicit commitments and clearer policies. This can potentially reduce upgrade-related operational risks for workloads that are highly regulated.

Integration of Ecosystem with Cloud Services

Managed Kubernetes services like AKS and GKE provide more than just the platform. They also integrate extensively with the broader ecosystem of cloud services. While both offer extensive integration with the services of their respective cloud providers, the approach and coverage areas differ. This can significantly impact the architecture of applications and the speed of development. For organizations that are already invested in specific cloud services, these integration capabilities often become the deciding factors.

Microsoft and Google have different integration philosophies. Microsoft prefers a tight coupling between AKS and Azure services through the Azure Portal and shared authentication mechanisms. Google, on the other hand, prefers a more Kubernetes-native approach. They emphasize the use of open standards like service bindings and the Open Service Broker API for connecting GKE with Google Cloud services. These different approaches create distinct developer experiences that may align better with different organizational preferences.

Storage Capabilities

Each platform comes with CSI drivers for their own cloud storage services, but they each have their own unique performance characteristics and features. AKS works flawlessly with Azure Disk for block storage and Azure Files for shared filesystem access, and it even has extra support for Azure NetApp Files for high-performance workloads. The provisioning of the storage class is deeply integrated with Azure’s wider storage ecosystem, which makes management easier for teams that already know how Azure storage works.

Connecting to Databases

The ability to connect to databases is a reflection of each provider’s range of databases and their connectivity architecture. AKS provides privileged connectivity to Azure’s managed database services through private endpoints and virtual network integration, reducing exposure to public networks. This integration extends to Azure’s wide range of database offerings, including SQL Database, PostgreSQL, MySQL, Cosmos DB, and more, with consistent authentication mechanisms through Azure Active Directory.

Google GKE offers similar connectivity to Google Cloud’s database services, especially strong integration for Cloud SQL and Spanner via the Cloud SQL Auth Proxy and direct API access. The authentication processes use GKE’s workload identity feature, which allows for seamless and secure service-to-service authentication without the need to manage credentials. For companies that use Google’s database services a lot, these native integration patterns can greatly simplify the implementation of secure connectivity.

Artificial Intelligence and Machine Learning Capabilities

Integration with artificial intelligence and machine learning tools is a key factor that sets these platforms apart, especially for applications that require a lot of data. GKE stands out for its seamless integration with Google’s AI Platform, BigQuery, and Vertex AI, offering containers and operators that are optimized for deploying models on a large scale. Google’s expertise in machine learning technologies means that it has more advanced patterns for AI workloads on Kubernetes, including specialized tools for serving models, training them in a distributed manner, and orchestrating workflows.

Choosing the Right Platform for Your Business

The choice between AKS and GKE is not typically a matter of comparing features—it involves matching the capabilities of the platform with the specific needs of your organization, your current investments, and your future strategy. Both platforms have achieved feature maturity for most common use cases, which means the decision-making criteria have shifted to the fit of the ecosystem, the expertise of the team, and the strategic direction of the cloud rather than specific technical limitations.

More often than not, your existing cloud footprint will be the primary driver in your decision, with the benefits of unified billing, consistent security models, and integrated monitoring usually outweighing the minor differences in features between Kubernetes platforms. For organizations that are starting from scratch or implementing multi-cloud strategies, the decision becomes more complex, requiring a careful evaluation of how each platform aligns with your technical requirements and organizational constraints.

Organizations with a Microsoft Focus

For organizations that have already invested heavily in Microsoft technologies, AKS tends to offer the smoothest experience. The integration with Azure Active Directory, Azure DevOps, and the larger Microsoft ecosystem can provide operational efficiencies that may outweigh any specific feature benefits that GKE may provide. For teams that are already familiar with Microsoft tools and interfaces, AKS offers a less steep learning curve and a more consistent management experience across cloud resources.

Google Cloud Fans

Teams that are already using Google Cloud services or those that need advanced data processing and machine learning capabilities often find that GKE provides better integration and performance. Google’s long-standing relationship with Kubernetes means it often has a more mature implementation of advanced features and is typically quicker to adopt new Kubernetes capabilities. Organizations that prioritize a cloud-native approach and are closely aligned with open-source patterns may find that GKE’s philosophy is more in line with how they operate.

Strategies for Multiple Clouds

For businesses that are employing genuine multiple cloud structures, GKE has benefits via Anthos. Anthos offers steady Kubernetes administration across Google Cloud, on-site environments, and other cloud providers such as Azure. This unified administration plane can make operations easier for teams that are running workloads across several environments. However, it does come with extra cost and complexity considerations.

Azure Arc offers comparable capabilities for AKS, extending Azure’s management plane to on-premises and other cloud environments, albeit with less Kubernetes-specific functionality than Anthos. Organizations that are dedicated to Microsoft’s hybrid cloud strategy may find that Arc provides sufficient multi-cloud capabilities while preserving consistency with their existing Azure investments.

Considerations Based on Industry

Some industries naturally align with certain cloud providers due to compliance offerings, specialized services, or historical relationships. For example, healthcare organizations often choose Azure because of Microsoft’s robust HIPAA compliance stance and data solutions designed specifically for healthcare. Retail companies sometimes choose Google Cloud to avoid supporting Amazon through AWS. Media and entertainment companies may find that Google’s content delivery capabilities better meet their needs. These considerations based on industry often have more weight than generic platform comparisons.

Common Questions

In our experience helping clients move to either AKS or GKE, some questions always come up when choosing a platform. The answers below are based on what we’ve learned from actual projects, not just what the platforms are supposed to be able to do. We hope these real-world insights will clear up some of the issues that tech leaders often run into when they’re looking at these platforms.

Which is better for machine learning workloads, AKS or GKE?

For machine learning workloads, GKE generally offers better support. This is due to its deeper integration with Google’s AI ecosystem, its optimized GPU scheduling, and specialized tools such as Kubeflow. The platform includes features built specifically for distributed training jobs, simplified model serving, and efficient GPU sharing. These features can significantly speed up ML operations. While AKS supports similar workloads and integrates with Azure ML, organizations that focus heavily on machine learning typically find that GKE provides more mature tools and performs better for AI-centric applications.

Is it possible to move my Kubernetes cluster from AKS to GKE without any downtime?

Although it is theoretically possible to migrate between managed Kubernetes services without any downtime, practical implementations usually involve some service disruption. The most reliable method is to run parallel clusters and gradually shift traffic using DNS or load balancing, but this requires an application architecture that can support this type of distribution. The biggest challenge is managing the state, especially for databases and persistent volumes that can’t be active in both environments at the same time. The most successful migrations are done in stages with planned maintenance windows, rather than trying to achieve true zero-downtime transitions.

Which platform is better for Windows containers?

AKS is the clear winner when it comes to Windows containers. This is no surprise given Microsoft’s expertise in Windows workloads. AKS has more advanced Windows node pools, better Active Directory integration for Windows authentication, and more detailed documentation for Windows-specific deployment patterns. If your organization heavily relies on Windows containers, especially if you’re running .NET Framework (not .NET Core) applications, AKS is the more reliable choice with fewer operational issues.

While GKE does offer support for Windows containers, it comes with more restrictions and less integration with Google Cloud’s wider Windows capabilities. If your organization is planning to run primarily Windows workloads, you’ll encounter fewer problems and receive better support on AKS. However, this advantage becomes less pronounced in mixed environments where Linux containers are the majority.

What distinguishes AKS and GKE in terms of cluster backup and disaster recovery?

GKE has more advanced built-in backup features thanks to its Backup for GKE service, which offers application-consistent backups with point-in-time recovery capabilities. This built-in service takes care of both Kubernetes resource metadata and persistent volume data, making disaster recovery planning easier. AKS, on the other hand, depends more on Azure Backup integration and third-party solutions, which require more setup for complete protection. For businesses with stringent recovery point objectives, GKE’s built-in backup service currently offers more advanced features with less operational overhead.

How do the command-line tools for managing AKS and GKE compare?

Both platforms enhance the standard kubectl command-line experience with their own CLI tools. Azure CLI with the AKS extension provides a full suite of management capabilities for AKS clusters, while Google Cloud SDK offers comparable functionality for GKE through the gcloud container clusters commands. The primary difference is in the authentication flow and context management, with GKE offering a more streamlined authentication through gcloud and AKS requiring a more hands-on approach to credential management through az aks get-credentials. Teams that are used to one provider’s CLI patterns might find a learning curve when switching, but the basic Kubernetes interactions are the same.

When deciding between Azure Kubernetes Service and Google Kubernetes Engine, it all comes down to your organization’s individual needs, current cloud investments, and long-term plan. Both platforms offer mature Kubernetes implementations with robust security, reliability, and management features. By assessing your specific use cases in relation to the strengths and weaknesses highlighted in this comparison, you can make a decision that best suits your technical requirements and business goals.

If your organization needs expert advice on choosing a platform or migration strategies, SlickFinch offers expert consulting on Kubernetes implementations on both Azure and Google Cloud platforms (and also AWS). We can help you navigate the complexities of modern container orchestration with confidence.