Key Takeaways

Karpenter offers advanced resource optimization, reducing cloud costs significantly.

EKS Cluster Autoscaler integrates seamlessly with Amazon EKS, ensuring reliability.

Karpenter provides finer-grained resource allocation based on workload needs.

Cluster Autoscaler is ideal for dynamic node management and stability.

Choosing the right tool depends on your specific cloud strategy and workload requirements.

Introduction to Karpenter and EKS Cluster Autoscaler

In Kubernetes deployments, autoscaling is crucial for optimizing resource usage and managing costs. Two popular tools that help achieve this are Karpenter and the native EKS Cluster Autoscaler. While the native cluster autoscaler is fine and good for many use cases, Karpenter was developed to achieve certain needs not provided by the cluster autoscaler. Here we compare both of their distinct features and benefits so you can decide which is right for your needs.

What is Karpenter?

Overview of Karpenter

Karpenter is an open-source, high-performance autoscaler designed to optimize resource utilization in Kubernetes clusters. It was developed to address the limitations of existing autoscaling solutions by providing a more flexible and efficient approach to node provisioning.

Features of Karpenter

Karpenter stands out due to its advanced features that go beyond standard autoscaling:

Optimal Resource Utilization: Karpenter ensures that resources are used efficiently, reducing waste and lowering costs.

Customized Scaling: It allows for tailored scaling based on specific workload requirements, offering more control.

Ease of Use: Karpenter is easy to install and integrates seamlessly with Kubernetes, making it user-friendly.

How Karpenter Works

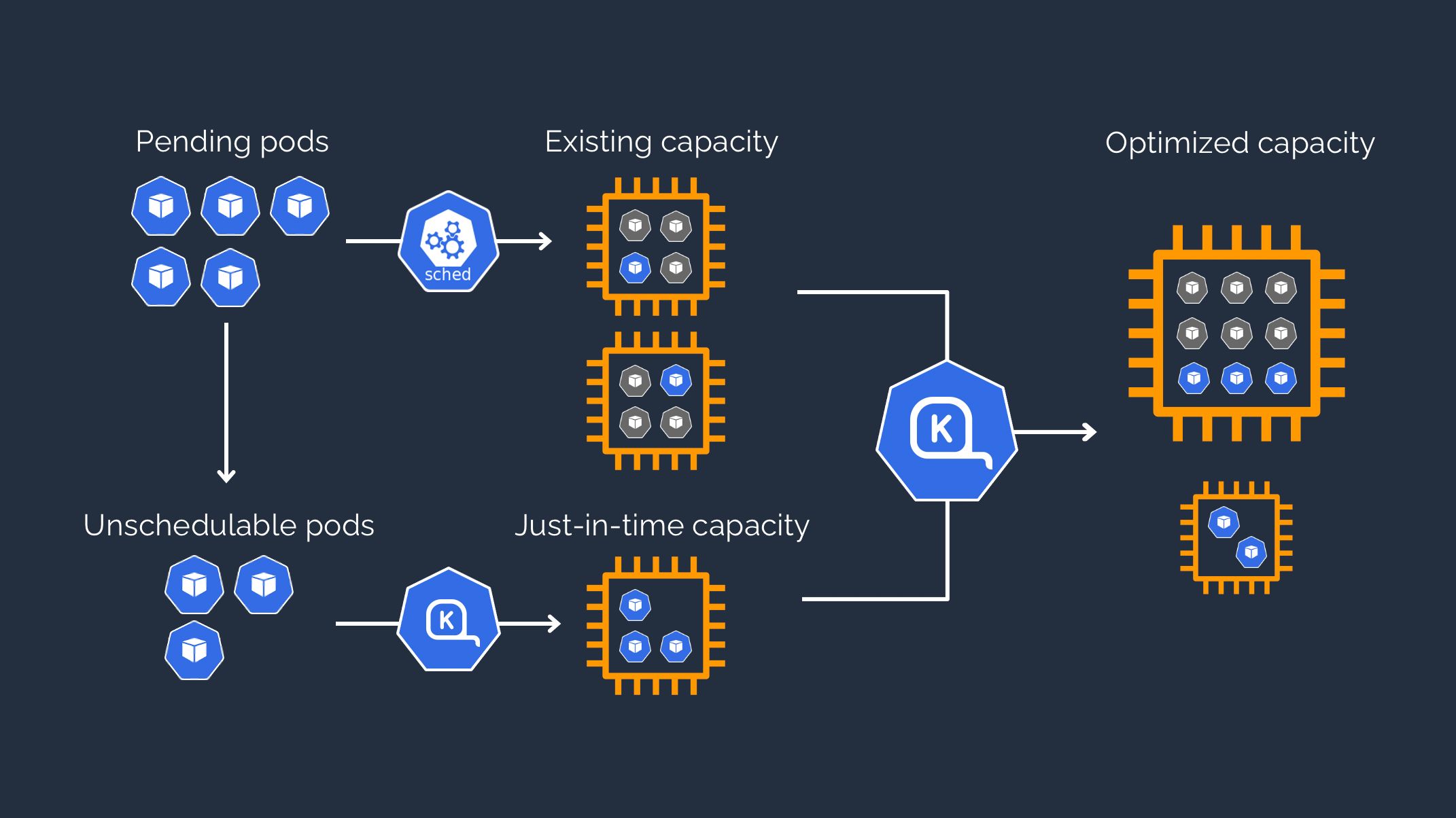

Karpenter operates by monitoring unscheduled pods and spawning new nodes to accommodate them. It optimizes scheduling for cost efficiency, ensuring that resources are allocated based on application needs. This means it can provision nodes that are just right for the workload, avoiding over-provisioning.

What is EKS Cluster Autoscaler?

Overview of EKS Cluster Autoscaler

The EKS Cluster Autoscaler is the native tool for managing node groups in Amazon EKS. It automatically adjusts the number of nodes in a cluster based on the current workload, ensuring that applications have the resources they need to run smoothly.

Dynamic Node Management: The Cluster Autoscaler adds or removes nodes based on demand.

Seamless Integration with EKS: It works directly with Amazon EKS, providing a reliable and stable autoscaling solution.

Reliability: Known for its stability and robustness, the Cluster Autoscaler is a trusted tool in many Kubernetes environments.

Features of EKS Cluster Autoscaler

The EKS Cluster Autoscaler has several key features that make it a popular choice:

Automatic Scaling: It adjusts the number of nodes automatically based on resource requirements.

Integration with EKS: It’s natively integrated with Amazon EKS, ensuring smooth operation.

Predefined Autoscaling Groups: It uses predefined autoscaling groups to manage nodes, providing a structured approach.

How EKS Cluster Autoscaler Works

The Cluster Autoscaler works by monitoring the resource demands of the current workload and adjusting the number of nodes accordingly. It uses Amazon EC2 Auto Scaling Groups to manage node groups, adding or removing nodes as needed to meet the demand.

Scalability Approach

When it comes to scalability, Karpenter and EKS Cluster Autoscaler take different approaches. Karpenter focuses on workload-based scaling, ensuring that resources are provisioned precisely based on the needs of individual applications. This means it can create nodes with specific configurations to match the workload requirements, thereby optimizing resource usage.

On the other hand, EKS Cluster Autoscaler operates at the node level. It adjusts the number of nodes in a cluster based on overall demand, adding or removing entire nodes to meet resource needs. While this approach is effective for general scaling, it may not be as efficient as Karpenter in terms of resource utilization.

Customization Options

Customization is another area where Karpenter shines. It allows users to define resource classes tailored to specific workloads. This means you can create customized scaling policies that match the unique requirements of your applications. For example, you can set up different resource classes for compute-intensive and memory-intensive workloads, ensuring that each gets the resources it needs without overspending.

In contrast, the EKS Cluster Autoscaler offers basic configuration options. While it provides the necessary tools for dynamic scaling, it lacks the advanced customization features that Karpenter offers. This makes it less flexible for organizations with diverse workload requirements.

Node-Level vs. Workload-Level Operations

Node-Level Operations: EKS Cluster Autoscaler operates at the node level, adding or removing entire nodes to meet overall demand.

Workload-Level Operations: Karpenter operates at the workload level, allowing for finer-grained resource allocation based on defined workload profiles.

By focusing on workload-level operations, Karpenter can provide more efficient resource utilization. This is because it provisions nodes based on the specific needs of the workloads, rather than just adding or removing nodes based on overall demand. As a result, it can help reduce costs and improve performance, contributing to Kubernetes cost optimization on AWS EKS.

Benefits of Using Karpenter

Karpenter offers several key benefits that make it an attractive choice for organizations looking to optimize their Kubernetes clusters.

Optimized Resource Utilization

One of the primary benefits of Karpenter is its ability to optimize resource utilization. By provisioning nodes based on the specific needs of the workloads, it ensures that resources are used efficiently. This can help reduce waste and lower costs, making it a cost-effective solution for managing Kubernetes clusters.

Cost Savings

Besides optimizing resource utilization, Karpenter can also help achieve significant cost savings. By avoiding over-provisioning and ensuring that nodes are right-sized for the workloads, it reduces unnecessary spending on cloud resources. This makes it an excellent choice for organizations looking to manage their cloud costs effectively.

Custom Metrics and Scaling

Karpenter supports custom metrics and scaling policies, allowing you to tailor the autoscaling behavior to your specific needs. You can define custom metrics that reflect the performance and resource requirements of your applications, ensuring that scaling decisions are based on accurate and relevant data. This level of customization can help improve the efficiency and effectiveness of your autoscaling solution.

Benefits of Using EKS Cluster Autoscaler

While Karpenter offers many advanced features, EKS Cluster Autoscaler also has its own set of benefits that make it a popular choice for many organizations.

It is a reliable and stable solution that integrates seamlessly with Amazon EKS, providing a robust and easy-to-use autoscaling tool.

Dynamic Node Management

The EKS Cluster Autoscaler excels in dynamic node management. It automatically adjusts the number of nodes in the cluster based on current demand, ensuring that applications always have the resources they need to run smoothly. This dynamic scaling capability makes it an effective tool for managing resource requirements in real-time.

Moreover, the Cluster Autoscaler works with Amazon EC2 Auto Scaling Groups to manage node groups, providing a structured and reliable approach to scaling. This integration with AWS services ensures that the autoscaling process is seamless and efficient.

Seamless Integration with EKS

Another significant benefit of the EKS Cluster Autoscaler is that it’s native to Amazon EKS. This integration ensures that the autoscaler works smoothly with other AWS services, providing a cohesive and reliable scaling solution. It also means that users can leverage the full power of the AWS ecosystem to manage their Kubernetes clusters effectively.

Reliability and Stability

Reliability and stability are critical factors when it comes to autoscaling solutions. The EKS Cluster Autoscaler is known for its robustness and reliability, making it a trusted tool for many Kubernetes environments. It provides consistent performance and ensures that applications have the resources they need to run smoothly, even during peak times.

Overall, the EKS Cluster Autoscaler is a dependable solution that offers dynamic node management, seamless integration with EKS, and reliable performance. These benefits make it an excellent choice for organizations looking for a stable and effective autoscaling tool.

Choosing the Right Tool for Your Needs

Deciding between Karpenter and EKS Cluster Autoscaler depends on your specific cloud strategy and workload requirements. Both tools offer unique benefits, and the right choice will depend on your needs and priorities.

In the next section, we’ll explore the factors to consider when choosing between these two autoscaling solutions and provide some use case scenarios to help you make an informed decision.

Factors to Consider

When choosing between Karpenter and EKS Cluster Autoscaler, consider the following factors:

Workload Requirements: Consider the specific needs of your workloads. If you have diverse workloads with varying resource requirements, Karpenter’s advanced customization options may be beneficial.

Cost Management: If cost optimization is a priority, Karpenter’s ability to provision right-sized nodes based on workload needs can help reduce cloud costs.

Integration with AWS Services: If seamless integration with Amazon EKS and other AWS services is essential, the EKS Cluster Autoscaler may be the better choice.

Reliability and Stability: For organizations that prioritize reliability and stability, the EKS Cluster Autoscaler is a trusted and robust solution.

Use Case Scenarios

To help illustrate the differences between Karpenter and EKS Cluster Autoscaler, let’s look at some use case scenarios:

Scenario 1: An organization with diverse workloads that require tailored scaling policies may benefit from Karpenter’s advanced customization options.

Scenario 2: A company looking to optimize cloud costs by avoiding over-provisioning and ensuring efficient resource utilization may find Karpenter to be the ideal solution.

Scenario 3: An organization that prioritizes seamless integration with Amazon EKS and other AWS services may prefer the EKS Cluster Autoscaler for its reliable performance and ease of use.

Scenario 4: A business that values stability and robustness in its autoscaling solution may choose the EKS Cluster Autoscaler for its consistent and reliable performance.

Choosing between Karpenter and EKS Cluster Autoscaler can be challenging, but understanding the unique benefits of each tool can help make the decision easier. Let’s explore some practical considerations and best practices for implementing these autoscaling solutions.

Transitioning Between Tools

Transitioning from one autoscaling tool to another can be complex, but with careful planning, it can be done smoothly. If you’re considering moving from the EKS Cluster Autoscaler to Karpenter, or vice versa, follow these steps for a seamless transition.

Evaluate Your Current Setup: Understand how your current autoscaling solution is configured and identify any dependencies or customizations.

Plan the Transition: Create a detailed plan that outlines the steps needed to transition, including any potential downtime or impact on your applications.

Test in a Staging Environment: Before making changes in production, test the new autoscaling solution in a staging environment to identify any issues.

Monitor the Transition: Keep a close eye on the transition process, monitoring resource utilization and application performance to ensure everything runs smoothly.

Implementation and Best Practices

Implementing Karpenter or EKS Cluster Autoscaler requires careful planning and execution. Here are some best practices to ensure a successful implementation:

Setting Up Karpenter

Setting up Karpenter involves several key steps:

Install Karpenter: Use Helm or other installation methods to deploy Karpenter in your Kubernetes cluster.

Configure Resource Classes: Define resource classes that match the specific needs of your workloads. This allows Karpenter to provision nodes with the right configurations.

Set Up Custom Metrics: Configure custom metrics that reflect the performance and resource requirements of your applications. This ensures accurate and relevant scaling decisions.

Monitor and Optimize: Continuously monitor the performance of Karpenter and make adjustments as needed to optimize resource utilization and cost savings.

Setting Up EKS Cluster Autoscaler

Setting up the EKS Cluster Autoscaler involves the following steps:

Install the Autoscaler: Deploy the EKS Cluster Autoscaler in your Kubernetes cluster using Helm or other installation methods.

Configure Auto Scaling Groups: Set up Amazon EC2 Auto Scaling Groups to manage your node groups. This provides a structured approach to scaling.

Define Scaling Policies: Create scaling policies that reflect the resource requirements of your applications. This ensures that the autoscaler can adjust the number of nodes based on demand.

Monitor and Adjust: Continuously monitor the performance of the EKS Cluster Autoscaler and make adjustments as needed to ensure reliable and efficient scaling.

Monitoring and Maintaining Your Scaling Solution

Whether you’re using Karpenter or EKS Cluster Autoscaler, regular monitoring and maintenance are essential for ensuring optimal performance. Here are some best practices:

Regular Monitoring: Continuously monitor resource utilization, application performance, and scaling activities to identify any issues or areas for improvement.

Performance Tuning: Make adjustments to your scaling policies and configurations as needed to optimize resource utilization and cost savings.

Regular Updates: Keep your autoscaling solution up to date with the latest releases and patches to ensure security and performance.

Proactive Maintenance: Perform regular maintenance tasks, such as cleaning up unused resources and optimizing configurations, to ensure smooth operation.

Final Recommendations

Choosing the right autoscaling tool for your Kubernetes cluster depends on your specific needs and priorities. Both Karpenter and EKS Cluster Autoscaler offer unique benefits, and the right choice will depend on factors such as workload requirements, cost management, and integration with AWS services.

Choosing the Best Tool for Your Cloud Strategy

Consider the following factors when choosing between Karpenter and EKS Cluster Autoscaler:

Workload Requirements: If you have diverse workloads with varying resource needs, Karpenter’s advanced customization options may be beneficial.

Cost Management: If cost optimization is a priority, Karpenter’s ability to provision right-sized nodes based on workload needs can help reduce cloud costs.

Integration with AWS Services: If seamless integration with Amazon EKS and other AWS services is essential, the EKS Cluster Autoscaler may be the better choice.

Reliability and Stability: For organizations that prioritize reliability and stability, the EKS Cluster Autoscaler is a trusted and robust solution.

By carefully evaluating your needs and considering the unique benefits of each tool, you can choose the best autoscaling solution for your cloud strategy. For more insights, check out this guide on Kubernetes cost optimization on AWS EKS.

Frequently Asked Questions (FAQ)

What are the primary use cases for Karpenter?

Karpenter is ideal for organizations with diverse workloads that require tailored scaling policies. Its advanced customization options allow for efficient resource utilization and cost savings, making it a great choice for managing complex Kubernetes environments.

How does EKS Cluster Autoscaler handle scaling during peak times?

The EKS Cluster Autoscaler automatically adjusts the number of nodes in the cluster based on current demand. During peak times, it adds nodes to ensure that applications have the resources they need to run smoothly. This dynamic scaling capability ensures reliable performance even during high-demand periods.